Robots already stand in for humans in some of the dullest and most dangerous jobs there are, handling everything from painting cars to drilling rocks on Mars. And if you listen to the hype about the potential of drones and autonomous vehicles, it's just a matter of time before robots do more. These future autonomous handymen and handywomen will deliver packages, take us to the airport, or handle less romantic tasks like shuffling freight containers and helping bedridden patients.

There's just one problem: robots are dumb.

Despite all of the science fiction over the past half-century that has foretold the coming of intelligent, autonomous mechanical beings that attain consciousness—Neil Blomkamp's Chappie being the latest—robots generally remain limited to the most basic of programmed tasks. Even the most advanced and deadliest of unmanned aerial vehicles depend heavily on their network tethers back to human beings. Otherwise, they're nothing more than glorified model aircrafts on autopilot.

For robots to do tasks that are relatively simple for humans—like driving a car, fetching a tool from a toolbox, passing something to a human co-worker, or repairing a broken object—they need to be a lot more intelligent. But putting the computing power required for that intelligence onboard a robot, absent Isaac Asimov's positronic brain, would make robots prohibitively expensive, bulky, and power-hungry. (Exhibit A: Northrop Grumman's X-47B and the UCLASS robot fighter/bomber that will follow it).

But smart, mass market robotics isn't an impossible goal. In fact, the building blocks for such a change may exist today—it's the same technologies that have driven the "app" economy, software as a service, and the Internet startup economy. Specifically, researchers have increasingly looked to the power of cloud computing and high-speed networking in recent years to bring more cognitive capabilities to robots. Cloud technologies that were pioneered to help human beings process information appear to be the key for making robots act more intelligently, too.

The basics of the new botnet

"Up to this point, the performance of a mobile robot is largely limited by the amount of memory or computation that it has onboard," Dr Chris Jones, Director for Research Advancement at iRobot, told Ars. "And if you're trying to hit price points that make sense, that computation and memory can be fairly small. So by connecting to the cloud, you end up with a lot more resources at your disposal."

Jones cites an array of possible examples: from more memory and more computation stored in the cloud to the boundless advantages of connectivity. By striving for a robot that's constantly networked, developers would be able to offload the theoretical burden of advanced perception approaches or navigation tactics.

We're already seeing some companies offloading an autonomous "brain" to the cloud. And it may not be too long before the same sorts of services used to build mobile digital assistants like Siri, Google's Voice Search, and Cortana are helping physical robots understand the world around them. The result could be a sort of "hive mind," where relatively inexpensive machines with some autonomous systems share a common set of cloud services that act as a group consciousness. Such a setup would allow a group of machines to constantly improve, adjusting operations as more experience is added to the collective memory. Theoretically, bots like this could not only interact with more complex environments, but they could engage people around them in a way that resembles a co-worker more than a calculator.

That's still a ways off, however. So far, industrial robots have in many ways followed a similar course to that of the PC. They've gone from standalone machines to networked devices, first over a proprietary protocol and eventually using open standards. In the 1980s, General Motors developed the first standard protocol for networked robots, called the Manufacturing Automation Protocol (MAP), based on a token bus architecture. MAP's networking would become the IEEE's 802.4 standard. MAP, and later other protocols that used Ethernet networking, allowed robots from different manufacturers to communicate and synchronize operations in real time.

"Networked robots can use a network infrastructure such as a wireless network or the Internet to talk to each other," said Markus Waibel, co-founder of the autonomous drone manufacturer Verity Studios AG and the non-profit ROBOTS Association. "In Cloud Robotics, robots can not only talk to each other but also to the cloud." And depending on how it's implemented, robots could use both shared cloud resources and a "personal" cloud, Waibel explained—the equivalent of a robot's Google Drive or iCloud.

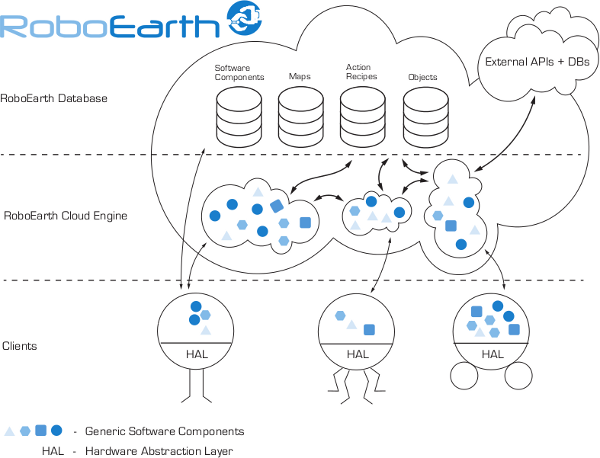

Waibel earned a PhD in robotics from the Swiss Federal Institute of Technology, and he was also part of the four-year RoboEarth project, a European Community funded program that created an open source platform for cloud robotics. That effort sought to address two ways to use the cloud to improve robotics: first by showing how conditions and learning experienced by one robot can be shared with other robots, then by demonstrating how the cloud "can allow robots to autonomously carry out useful tasks that were not explicitly planned for at design time," according to Waibel. By using a community of robots sharing a common, cloud-based software system, RoboEarth demonstrated that robots could dynamically be used by cloud software to carry out tasks that weren't hard-coded into the robots themselves. Some of these tasks were even tasks that no single bot could have done without human coordination.

The first hurdle—voice

That first RoboEarth goal is particularly important. Just as "Internet of Things" applications allow cloud applications to add functionality to electronic devices and to gather sensor and other data from them, cloud robotics applications can enhance capabilities of robots by processing streams of sensor data.

One of the most desired future capabilities is robots that can perceive the world around them. While something like Google's driverless car has the luxury of carrying large quantities of sensor processing hardware aboard, robots traveling around a refinery, a hospital, or factory to go fetch a specific tool or instrument for a task don't. And in some ways, the perception tasks they have are more complex.

"Forty percent of the human brain is used for perception, and perception is one of the most difficult tasks for an autonomous robot," Gill Pratt, Program Manager of DARPA’s Defense Sciences Office, recently told Robohub. "It’s very difficult to fit a computer with the size, weight, and power that you need to achieve really good perception onto a robot. The computer just gets too large, it consumes too much power, and it weighs too much. However, if you have access to cloud resources with lots of data and lots of computing cycles, they can help move that burden off of the physical robot."

One of the first and most obvious cloud-based tools to inch robots closer to perception is voice recognition. This particular challenge has a seemingly easier solution. The same cloud application programming interfaces used by smartphone applications, gaming platforms, and even "smart" televisions to understand voice commands can be incorporated into robotic systems connected to the Internet. "It doesn't make sense to develop our own voice recognition when there are folks like nuance that have very robust speech recognition engines that have a cloud API," said Jones. "That's an example of the type of existing cloud service that we could reach out and grab."

From there, things get trickier. It's not only important to recognize what was said, it's just as vital to understand what someone means. Cognitive systems, such as IBM's Watson, could help here. With such technology behind them, robots could understand what's being asked of them based on the context of the request. Alexa Swainson-Barreveld, IBM's Vice President for Watson products and solutions, told Ars that IBM has been partnering with SoftBank for that precise sort of cognitive capability for robots that act as human assistants (particularly in geriatric care).

"We're exploring opportunities where robots have cognitive capabilities to support people who are aging," Swainson-Barreveld explained. "The piece we've been working on in our labs is tightening the interaction with people—understanding human speech and interacting directly back with you, being able to respond back in a way that's appropriate. We're also starting to think about cognitive abilities in robots more broadly—how robots sense their location, where a sound is coming from, and things like that." These pieces, she said, are "the difference between working with an object that is frankly inanimate and working with a machine that is a partner."

reader comments

143