Self-service data preparation: Who's your best provider?

It seems 2015 is shaping up as the emergent year for self-service data-preparation capabilities. Thus far we've seen announcements from Informatica (Informatica Rev), Qlik (Smart Data Load) and independents including Paxata, Trifacta and Tamr. The latest to join the bandwagon are SnapLogic and Logi Analytics.

As options proliferate, the question for buyers is: Which type of vendor is your best choice for self-service data preparation?

The three vendor types bringing self-service data prep to the market include BI and analytics vendors (like Qlik and Logi), integration vendors (like Informatica and SnapLogic) and stand-alone-vendors (like Paxata and Trifacta) that are most often associated with big data work.

Logi Analytics fits the pattern. It responded to the self-service data exploration and data visualization craze by introducing Logi Vision in early 2014. Last week it added Logi DataHub, designed to give data professionals as well as analyst types a logical data view for self-service data prep, data access and data enrichment. The short list of integration-ready sources includes cloud-app sources like Salesforce and Marketo, cloud-platform sources from the likes of Amazon and Google, and on-premises sources including HP Vertica, PostgreSQL, and ODBC-standard databases.

Dedicated data-integration vendors have always prided themselves on broader, deeper and generally more sophisticated capabilities than what you'd typically get in an optional module from a BI or analytics vendor. These specialists span service-bus-style application integration and ETL-style data integration, and most have developed robust capabilities for integrating cloud-based apps and data sources.

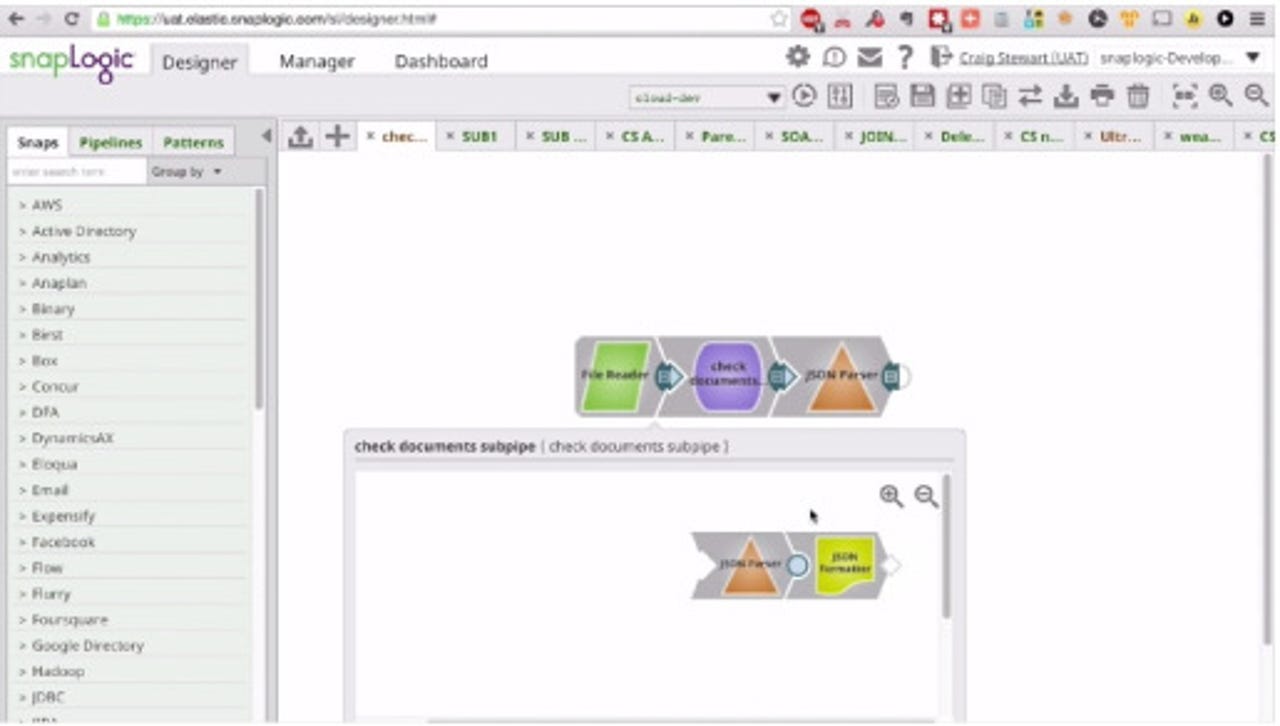

SnapLogic joined the self-service trend last week with its Summer 2015 release of the cloud-based SnapLogic Elastic Integration Platform. SnapLogic takes a componentized approach in which the data experts handle the messy details of data-access, transformation and processing by preconfiguring reusable Snaps and Sub-pipelines. The non-experts can then assemble new data pipelines by snapping together these components. New features supporting self-service include a Sub-pipeline preview, which lets admins and non-integration experts drill down and see the Snaps and processing steps within a reusable sub-pipeline.

Safeguards built into SnapLogic's self-service approach include a Lifecycle Management feature that lets the experts create, compare and test Snaps and sub-pipelines before sharing them with business users. They can also test pipelines developed by the non-experts before moving them into production. A schedule task view is designed to help admins coordinate integration workloads, spot potential performance bottlenecks and schedule lower-priority tasks for off-peak periods.

MyPOV On Self-Service Data Prep

When it comes to choosing one of these vendors, I expect the prevailing selection patterns will live on. So companies focused on BI and analytics will first turn to those vendors to meet their data-integration needs. If needs span application integration and data integration, customers very likely already work with a dedicated integration vendor (or a suite from the likes of IBM or Oracle). There's good reason to leverage these products and the expertise of your data-integration experts.

Beyond this general rule of thumb, I'd investigate the relative user-friendliness of the candidates you're considering; some are "easy to use" for business users while others are really geared to data-savvy analyst types.

I like the self-service offerings that embed the data-integration experts into self-service capabilities in an oversight capacity. The danger in ungoverned self-service is that not-so-data-savvy users will mashup and interpret data in inconsistent ways. Look for features whereby IT can ensure consistent data definitions and data modeling, providing guard rails around the use of data so that the non-experts don't end up going off track.

As for that third set of vendors -- that stand-alone self-service players focused on big data -- they've been getting a lot of attention this year, with Trifacta and Paxata in particular getting a lot of buzz. For practitioners who are in thick of big data analysis, these specialists are filling a void the platform players have yet to address.

As the big data world matures, I suspect niche players will be good candidates for acquisition. Alteryx and Datameer are two vendors I can think of that are often tapped for self-service data prep, but these features are part of larger analytics offerings. It strikes me that self-service data prep is a feature we're going to see inside many products and platforms.