We have been pioneering a new solution that is the ultimate virtualization and container setup for development servers/ labs. We have used Proxmox VE for many years as a stable, Debian Linux based, KVM virtualization platform. Aside from virtualization, Proxmox VE has features such as high-availability clustering, Ceph storage, ZFS storage and etc built-in. While enterprises may love VMware ESXi, Proxmox VE is a great open alternative that saves an enormous amount on license costs.

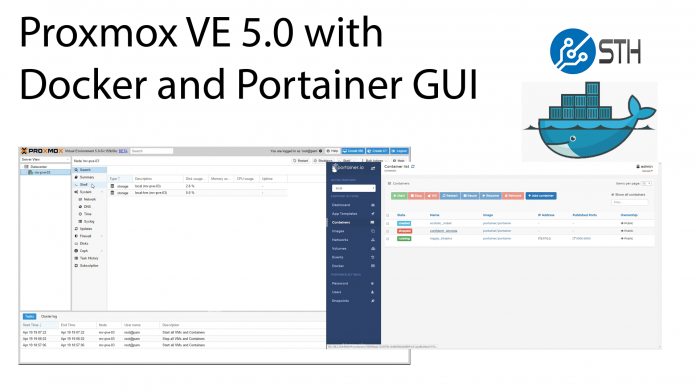

Given the market Proxmox VE is targeted at, it adopted LXC as its container solution. We have many readers that love the Proxmox VE for its power and simplicity but wanted to add Docker containers given their popularity. With the next-generation Debian Stretch-based Proxmox VE 5.0 coming, we wanted to do a how-to guide on getting everything setup so that you can have Proxmox plus Docker with a Portainer web GUI to manage everything.

If you want to discuss this here is the original thread.

Proxmox VE + Docker + Portainer GUI How-to Video

Here is a video guide showing the setup from installation through starting a Monero Mining container via the Portainer web GUI.

We do want to caution that you may want to change the directories and users involved, and we will not recommend this for production. As a developer system, it works great. As described here, it is a security nightmare

Proxmox VE + Docker + Portainer.io GUI Steps and Commands

Video coming soon but I wanted to document the steps:

1. Install Proxmox VE 5.0

2. Make the following sources adjustments so you can update:

To fix this first add the no subscription sources:

# nano /etc/apt/sources.list add: deb http://download.proxmox.com/debian stretch pve-no-subscription

Then remove the enterprise source:

# nano /etc/apt/sources.list.d/pve-enterprise.list comment out (add a # symbol in front) of this line: # deb https://enterprise.proxmox.com/debian stretch pve-enterprise

apt-get update && apt-get dist-upgrade -y

3. Reboot

4. Install docker-ce:

apt-get install -y apt-transport-https ca-certificates curl gnupg2 software-properties-common curl -fsSL https://download.docker.com/linux/debian/gpg | apt-key add - apt-key fingerprint 0EBFCD88 add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/debian $(lsb_release -cs) stable" apt-get update && apt-get install docker-ce -y

You should now be able to do docker ps and see no containers are running.

5. Install Portainer with a persistent container

Just for ease of getting started, we are going to make a directory on the boot drive. You should move this to other storage, but this makes it simple for a guide:

mkdir /root/portainer/data

Install Portainer for a Docker WebGUI:

docker run -d -p 9000:9000 -v /root/portainer/data:/data -v /var/run/docker.sock:/var/run/docker.sock portainer/portainer

Again, make the directory on ZFS storage or similar, not in the root directory.

Wait about 15-30 seconds after you see Portainer start (you can check “docker ps” to see status.)

6. Your login URLs will be the following ports:

Proxmox GUI: https://<serverip>:8006 Portainer GUI: http://<serverip>:9000

At this point, you now have a GUI for everything you might want.

7. You may also configure Proxmox to restart on boot:

sudo systemctl enable docker

Update for those using a ZFS rpool for Proxmox VE Installation

If you are following this guide, and you installed Proxmox VE on a ZFS rpool, some things have changed (as of July 2018.) Docker will default to using ZFS as the storage driver and the system will not boot properly after you make a container. There is an easy fix to change the Docker ZFS path. We have a quick guide and video here Setup Docker on Proxmox VE Using ZFS Storage. The steps should take under 1 minute. Those steps are equally useful if you want to change the ZFS storage pool for Docker storage.

I would love a more detailed description of a more secure setup. Are you planning to make a more elaborate one in the near future?

Thanks for the guide. I installed Docker and Portainer on top of Proxmox 4.4. it required one small changes in sources.list. Rather than pointing to the “Stretch” repo, I pointed to the “Jessie” repo with the following: deb http://download.proxmox.com/debian jessie pve-no-subscription

2nd vote for hardening this, if possible.

In the meantime looking at Joyent Triton. Need something secure.

Update: Triton is too big. Is there something inherently insecure about this, or can we just use best practices?

If I understand correctly, the security issue is that Docker runs as root, so an attack on a container could potentially escalate its way to the host, which would then have root access of your Proxmox OS.

I completed this guide and was content on having docker working inside Proxmox4. My issue is I lost the network on the proxmox kvms i created since docker took over the 172.x.x.x . I am new to linux, what is the easy fix for this? I was leaning on creating another virtual network but not sure on how to hook that to my existing kvms.

Has anyone run into issues where the bridge network doesn’t work for VM’s with this setup? I did a clean install and none of my VMs (all created after Docker setup) have internet access unless I switch them to NAT. I’m also seeing a lot of errors in the syslog about veth devices.

Aug 25 08:51:04 pve kernel: br-a3505c2f1aea: port 1(veth0c726fc) entered disabled state

Aug 25 08:51:04 pve kernel: device veth0c726fc left promiscuous mode

Aug 25 08:51:04 pve kernel: br-a3505c2f1aea: port 1(veth0c726fc) entered disabled state

Aug 25 08:51:04 pve systemd-udevd[22640]: Could not generate persistent MAC address for veth2fcf5b9: No such file or directory

Aug 25 08:51:04 pve kernel: br-a3505c2f1aea: port 1(veth2fcf5b9) entered blocking state

Aug 25 08:51:04 pve kernel: br-a3505c2f1aea: port 1(veth2fcf5b9) entered disabled state

Aug 25 08:51:04 pve kernel: device veth2fcf5b9 entered promiscuous mode

Aug 25 08:51:04 pve kernel: IPv6: ADDRCONF(NETDEV_UP): veth2fcf5b9: link is not ready

Aug 25 08:51:04 pve kernel: br-a3505c2f1aea: port 1(veth2fcf5b9) entered blocking state

Aug 25 08:51:04 pve kernel: br-a3505c2f1aea: port 1(veth2fcf5b9) entered forwarding state

Aug 25 08:51:04 pve systemd-udevd[22639]: Could not generate persistent MAC address for veth59ae241: No such file or directory

Aug 25 08:51:04 pve kernel: eth0: renamed from veth59ae241

Aug 25 08:51:04 pve kernel: IPv6: ADDRCONF(NETDEV_CHANGE): veth2fcf5b9: link becomes ready

Aug 25 08:51:13 pve kernel: br-a3505c2f1aea: port 1(veth2fcf5b9) entered disabled state

Aug 25 08:51:13 pve kernel: veth59ae241: renamed from eth0

Aug 25 08:51:13 pve kernel: br-a3505c2f1aea: port 1(veth2fcf5b9) entered disabled state

Aug 25 08:51:13 pve kernel: device veth2fcf5b9 left promiscuous mode

….

Aug 25 08:54:54 pve kernel: br-a3505c2f1aea: port 1(vethe8aa3a1) entered blocking state

Aug 25 08:54:54 pve kernel: br-a3505c2f1aea: port 1(vethe8aa3a1) entered disabled state

Hi, great write up, however I have an issue which is caused with ZFS. Maybe you can assist.

So I have ProxMox 5.1 installed and installed Docker using your instructions above.

I’m also running everything with ZFS which here comes my issue.

I create a Docker instance. Busybox, Debian, Ubuntu, doesn’t matter what it is.

I create a Container in ProxMox.

I try to rm the Docker instance after doing a stop and get the below.

root@nexus:/root# docker rm d109ad6b30f2

Error response from daemon: driver “zfs” failed to remove root filesystem for d109ad6b30f266d756be8c78f279bc9423be87e17952abc22f2d9569c9ca607f: exit status 1: “/sbin/zfs zfs destroy -r repo1/Virtua

lization/docker/5cfd29753e8071ee499877a21440d6b80aa81d8d3ad888049a8b5c54951ee3ce” => cannot destroy ‘repo1/Virtualization/docker/5cfd29753e8071ee499877a21440d6b80aa81d8d3ad888049a8b5c54951ee3ce’: dataset is busy

So after a bunch of looking. I see that the [lxc monitor] process thanks to the container I just started somehow has this file system locked.

See below.

root@nexus:/root# grep 5cfd29753e8071ee499877a21440d6b80aa81d8d3ad888049a8b5c54951ee3ce /proc/*/mounts

/proc/27287/mounts:repo1/Virtualization/docker/5cfd29753e8071ee499877a21440d6b80aa81d8d3ad888049a8b5c54951ee3ce /repo1/Virtualization/docker/docker/zfs/graph/5cfd29753e8071ee499877a21440d6b80aa81d8

d3ad888049a8b5c54951ee3ce zfs rw,relatime,xattr,noacl 0 0

And process belongs to.

root@nexus:~# ps ax | grep 27287 | grep -v grep

27287 ? Ss 0:00 [lxc monitor] /var/lib/lxc 201

So any clue how to fix this ? Essentially I have to shutdown any containers I started after creating the docker instance, so that the lxc monitor process goes away, then I can safely docker rm the container then start back up the lxc containers in ProxMox..

Just a pain in the but. I can’t find anything about how to make lxc monitor not pull in docker mounts into its /proc/*/mounts file …

Any thoughts would be greatly appreciated.

How can you start portainer using SSL? Can you use the proxmox SSL cert? It’s on the same domain. I installed a Let’s Encrypt SSL cert in proxmox that works and I have for the portainer start command:

docker run -d -p 443:9000 –name portainer -v ~/local-certs:/certs portainer/portainer –ssl –sslcert /etc/pve/pve-root-ca.pem –sslkey /etc/pve/pve-www.key /rpool/ROOT/portainer/data:/data -v /var/run/docker.sock:/var/run/docker.sock portainer/portainer

It doesn’t work, anyone have any thoughts. Maybe serverthehome could do an SSL addition to this awesome tut?

Hello, Very nice article it worked perfectly except the network segment it is running on 172.17 is not my network segment, How do I expose the containers to my network and give them ips I do not have a dhcp server on this network.

Thanks,

Michael

Hi Michael – perhaps that is a good question for our forums https://forums.servethehome.com

This works great. FYI because of the multiple bridge networks I had to enable STP on my PVE bridge in order to get external connectivity to my LXC boxes.

I have just installed proxmox 5 on my laptop, because I was excited to have both kvm, lxc and docker on the same box. It has been a long time since I have make use of LVM, and I don’t want to screw up a process that works nicely.

I see the directive to use ZFS or in my case just LVM. I see that I have three LVs, root, data, and swap. How to I point to the data LV? I don’t see it mounted… For that matter, how to I point my kvm or lxc containers to use the data LV?

K. Callis – great idea for a follow-up article. In the meantime, go to the web UI. Click on Datacenter then Storage. Use the Add dropdown.

I see in the instructions to create /root/portainer/data. Again, I am confused on how to make use of the data partition. Is there a way to make use of the data partition to save container in that location? Currently, my logical drives look like this (the standard layout):

root@pve:~# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Conve

data pve twi-a-tz– 812.26g 0.00 0.42

root pve -wi-ao—- 96.00g

swap pve -wi-ao—- 7.00g

Looking at your last comment, I went to the Datacenter/Storage, and I see LVM-Thin, but there is no path listed and I am sure that is where my KVM images should reside. Is there a way to create a directory for the portainer directory on the data partition as well as opposed to /root/portainer/data? Also, can I use the data volume for lxc and docker containers as well?

What is the issue with running under root is you have strong password and Google 2SV ?

Finally I got my head wrapped around lxc and lvm-thin storage. What is the trick to have docker use lvm-thin as opposed to root disk space

In the above example, I am suppose to /root/portainer/data to store my image. I would rather have the image live on the LVM-Thin data partition. So what is the method to put an image on LVM-thin as opposed to being on the root partition?

Installing docker-ce killed my lxc containers’ network connectivity (my pihole is one, so my network screeched to a halt). Someone above mentioned turning on stp for the bridged network but that didn’t seem to have an effect. I reversed it by uninstalling docker-ce and rebooting. Maybe I will have to just use a VM docker host.

Same here. No network connectivity for my lxc containers and virtual machines in proxmox. turning on stp for the proxmox bridge didn’t help. any advice?

Same here. No network connectivity for my lxc containers and virtual machines in proxmox. turning on stp for the proxmox bridge didn’t help. any advice?

I am running a slightly different setup right now. My Proxmox cluster consists of 3 Machines using CEPH as distributed storage. There are 2 VLANS on the cluster, a public one to the internet and a private one for backend services. I am using Foreman to provision/manage VMs via puppet.

On every physical node, there is a VM running CentOS+docker in swarm mode. These containers as well as some backend datbase VMs are running on the internal VLAN only. There are two other VMs (hardened stripped down nginx+haproxy) serving as frontends. These are dual homed in the internal and public network, exposing the web services to the world.

Using rexray/ceph integration, docker can make use of the ceph cluster as persistent storage, no matter where the container is currently running. As docker is running in VMs which are not publically accessible, I believe, I have minimized the risk, if a container or a docker node gets compromised.

Two years later and this is still a great writeup.

Was wondering if you would do another version for 2019 with leasson learned avoer the past couple of years?

Over the last couple of months this has turned my NUC from a clever idea to an essential part of my homelab.

david – on the docket! We are a bit backed up at the moment but the hardware for the next iteration is ready to go.

What are the trade-offs between this and running docker in a VM?

Has anyone solved the issue with docker breaking network access for all LXC and QEMU vms on proxmox?

I’ve disabled the docker service for now and confirmed that all is well until the docker service is started.

I get that it’s likely related to docker messing with iptables, but I’m not willing to manually manage all of docker’s iptables madness.

Tons of discussion above and elsewhere on the net, but I can’t find any solutions. Any ideas?!?

Did you ever find a fix for this, I think I am running into this same issue.

FIX FOR VM’S WITH DOCKER!!!!

simple solution… edit /etc/sysctl.conf and enable forwarding… reboot and the world is a good place to live {WARNING: you need to understand any security implications from this setting}

Details backing this solution.

lol this is where my LOVE of Debian comes from… i always love reading issues and how they get resolved…

https://bugs.debian.org/cgi-bin/bugreport.cgi?bug=865975

Direct Firewall solution less clean might be better from security stand point.

https://bbs.archlinux.org/viewtopic.php?id=233727

I would like to roll back and clean up my system, but i’m not sure how to do that.

Can any one please help me out to roll back specifically on the dockers and portainer part?

So i’m talking about the rollback commands required for step 4 and 5 above.

I would stop at installing docker-ce and portainer on the host. I would instead create a VM on each machine with RancherOS on it. This is a very small version of Linux with everything running in docker, including the cli. Then in Proxmox create a backup or snapshot. then run rancher/rancher on one of the nodes to create the docker cluster. this will give you a docker command to add nodes to your docker cluster. Then in Proxmox create a backup or snapshot. at this point, you have a system that can be cleaned up fairly simply and is easy to manage.

Hi, any plans to update this guide?