AI

AI

AI

AI

AI

AI

A Linux Foundation offshoot called the LF AI & Data Foundation is teaming up with a host of technology industry giants to launch a new initiative that aims to drive open-source innovation in data and artificial intelligence infrastructure.

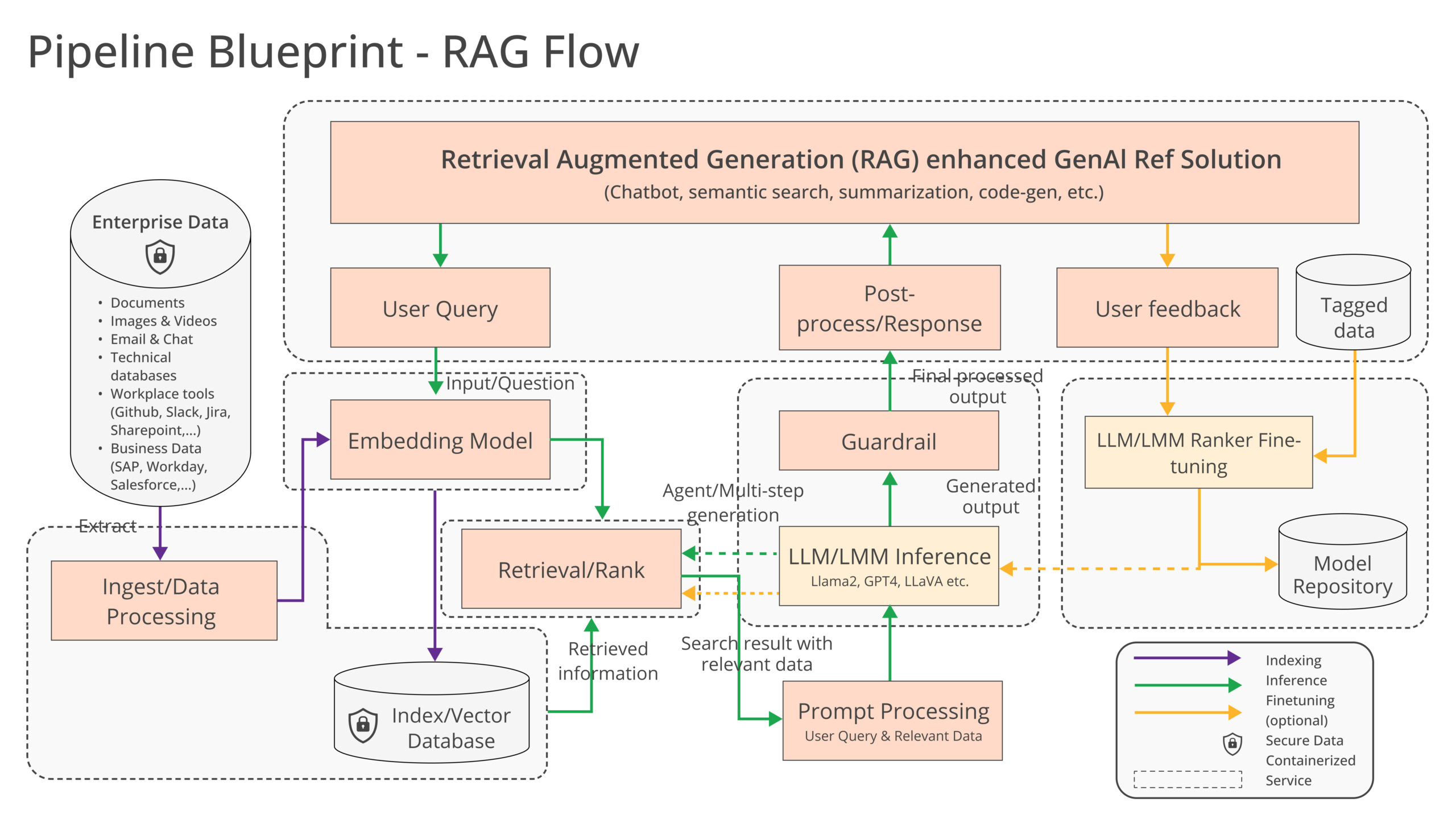

Announced today, the new initiative is called the Open Platform for Enterprise AI or OPEA, and it’s focused on building open standards for the data infrastructure that’s needed to run enterprise AI applications. One of it’s main interests is a technique known as “retrieval augmented generation” or RAG, which OPEA says has the capacity to “unlock significant value from existing data repositories.”

The LF AI & Data Foundation was created by the Linux Foundation in 2018, with its mission being to facilitate the development of vendor-neutral and open-source AI and data technologies. The OPEA project fits into that narrative, as it intends to pave the way for the creation of hardened and scalable generative AI systems that will “harness the best open-source innovation” from across the big data tech ecosystem.

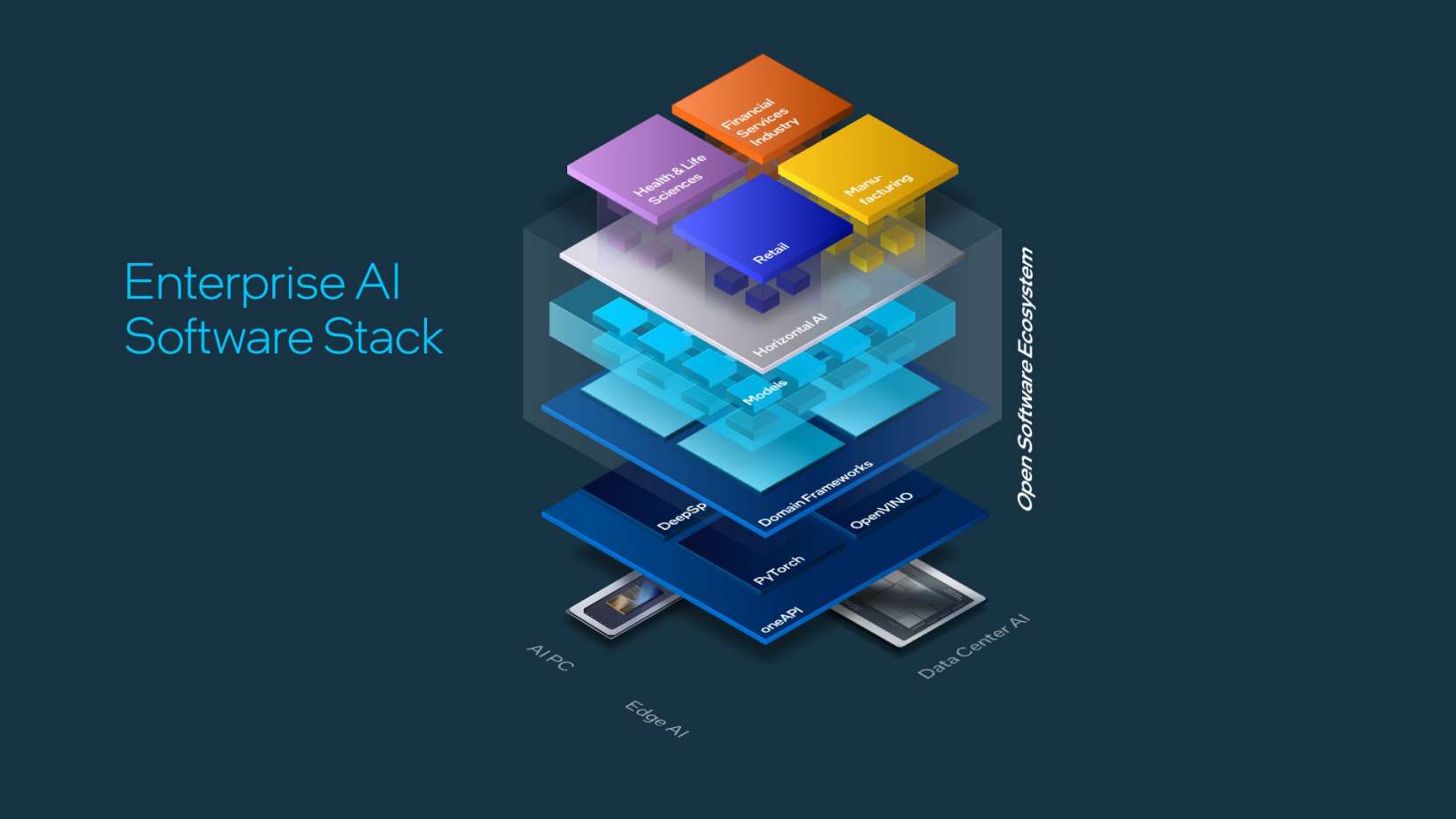

LF AI and Data Executive Director Ibrahim Haddad said OPEA’s main focus will be on creating a detailed and composable framework for AI technology stacks. “This initiative is a testament to our mission to drive open-source innovation and collaboration within the AI and data communities, under a neutral and open governance model,” he added.

One of OPEA’s major collaborators is Intel Corp., which has a keen interest in building the framework to support RAG workloads. Others include Cloudera Inc., IBM Corp.-owned Red Hat Inc., Hugging Face Inc., Domino Data Lab Inc., MariaDB Corp., SAS Institute Inc. and VMware Inc.

Image: OPEA

OPEA’s interest in RAG is likely to get some traction, as the technique is quickly becoming vital for enterprises to take advantage of proprietary large language models. For enterprises, AI adoption is not as simple as just plugging in a generative AI chatbot such as ChatGPT and letting loose.

That’s because the knowledge of generative AI models is limited to the data they were initially trained on. Such models lack any knowledge of the intricacies of the businesses that want to put them to use, and this is where RAG can help.

RAG enables companies to expand the knowledge of generative AI models by enabling them to tap into external datasets – such as a company’s own proprietary data. Essentially, it provides a way for companies to teach AI about their specific business operations, enhancing its knowledge base so models can carry out more specific tasks, or provide more on-point responses to employees’ questions and prompts.

Intel explained the need for a better RAG stack in a separate announcement, saying that there is no industry standard around how these techniques can be applied. “Enterprises are challenged with a do-it-yourself approach [to RAG] because there are no de facto standards across components that allow enterprises to choose and deploy RAG solutions that are open and interoperable and that help them quickly get to market,” the chipmaker said. “OPEA intends to address these issues by collaborating with the industry to standardize components, including frameworks, architecture blueprints and reference solutions.”

Constellation Research Inc. Vice President and Principal Analyst Andy Thurai elaborated on the fragmented nature of generative AI data pipelines, saying RAG frameworks are an exceptional case. “Given how new RAG is, it’s an area that numerous players are trying to cater too, with startups such as Verba, Unstructured and Nuem being some of the better known,” Thurai said. “The generative AI pipeline tooling is all over the place too, with systems and frameworks such as LangChain, various vector database and vector stores.”

The analyst said this fragmentation makes the task of building an open-source RAG pipeline very complex and time-consuming, which entirely defeats the purpose of producing new AI models quickly.

The OPEA’s long list of backers should ensure it makes a very strong push towards building a more unified RAG framework to ease deployment headaches, but Thurai said it’s necessary to see the full details of what it’s offering before he can declare it a market winner. “Hopefully it will build a true open-source platform and not something that’s dependent on the proprietary, closed-source components of some of the companies involved in this endeavor,” Thurai added. “For example, Intel has already released an architecture reference diagram for an AI code generation tool, but it’s built entirely on Intel’s processors and hardware, which seems to defeat the purpose of this initiative.”

OPEA has already created a GitHub repository that reveals the bare bones of its nascent, open and composable RAG framework. In it, the project proposes a rubric that will grade generative AI system according to their performance, features, trustworthiness and “enterprise-grade readiness.” OPEA relies on industry benchmarks to assess each AI system’s performance, while the “features” grade is really more of an appraisal of the system’s interoperability, deployment options and ease-of-use. In terms of trustworthiness, OPEA looks at each model’s robustness and the quality of its outputs, while enterprise readiness specifies what’s needed to get the system up and running.

Image: OPEA

According to Intel’s director of open-source strategy Rachel Roumeliotis, OPEA intends to work with the open-source community to perform tests based on the above rubric, and also assess and grade generative AI deployments on an ad-hoc basis when it’s requested to do so.

OPEA is less clear on its other plans, but the initiative seems to have quite a far-reaching scope. For instance, Haddad hinted at “optimized support” for AI toolchains and compilers in order to give companies the option to run AI workloads on different hardware platforms.

He also floated the idea of “open model development,” so we might see the release of collaboratively developed generative AI models similar to Meta Platforms Inc.’s Llama 2. Intel has already contributed a number of reference implementations for a generative AI chatbot, document summarizer and code generator.

The founding members of OPEA have all shown a strong desire to become players in the emerging AI industry, but to date they have nowhere near the same kind of influence as the major generative AI model developers such as OpenAI, Microsoft Corp., Google LLC, Anthropic PBC and Cohere Inc. As such, the initiative seems to be an effort to increase the relevance of its members by providing the much-needed foundations on which enterprise AI will be built.

Cloudera, for instance, recently announced various partnerships as part of an effort to build an “AI ecosystem” in the cloud, while Domino provides a suite of tools for building and auditing AI models. Moreover, VMware has shown an interest in the area of “Private AI” for enterprises. By joining forces, these companies may hope to become essential parts of the enterprise AI stack.

This kind of collaborative approach has obvious benefits, as OPEA’s members can combine their resources and design their platforms and technologies to be interoperable with each other. Then they can create reference frameworks that simplify the deployment of more composable AI frameworks, enhancing the appeal of their AI offerings.

THANK YOU