It’s been a tough year or so for many SEO companies – particularly those that don’t follow the rules when it comes to black hat techniques, especially with regard to links. I’ve always been of the opinion that quality wins out and while you can tweak for SEO, chasing links through poor content is always going to raise some Google eyebrows or worse – a manual penalty.

The major algorithm changes that the search engine has made in recent years with regard to content have meant that many companies have found their sites pretty much disappearing from the index. Why? Because they have not followed the rules, either deliberately or accidentally.

Panda, Penguin and Hummingbird, Google’s most recent famous algorithm updates, have changed the game. They demand high quality, both within site architecture and content. What’s more, one sniff of purchased links and it’s highly likely that a site will be de-indexed. Sometimes, this is the fault of an agency that uses a somewhat less-than-ethical approach to SEO. In other cases, it’s down to a site owner’s lack of knowledge, or their making simple mistakes.

White-hat SEO is the only approach to take unless you want to risk a penalty. This doesn’t just apply to link building, it also applies to other SEO techniques such as:

- Cloaking/Gateway pages

- Spun content

- Purchasing links

- Duplicate content

- Keyword stuffing

Many webmasters still use cloaking and gateway pages as a means to climb the SERPs, but it’s really not advisable. Don’t just take my word for it, have a look at what Google’s Matt Cutts has to say:

Link building

In January Matt Cutts also put an end to using guest posting as a means to building back links for SEO.

Okay, I’m calling it: if you’re using guest blogging as a way to gain links in 2014, you should probably stop. Why? Because over time it’s become a more and more spammy practice, and if you’re doing a lot of guest blogging then you’re hanging out with really bad company.

So while guest posting can still be used as a means of promoting content and Public Relations, it can no longer be used solely to build links. The spammers ruined it for everybody.

If you get a penalty

According to Google, you could be at risk of a penalty if your site:

[D]oes not meet the quality standards necessary to assign accurate PageRank. [Google] cannot comment on the individual reasons your page was removed. However, certain actions such as cloaking, writing text in such a way that it can be seen by search engines but not by users, or setting up pages/links with the sole purpose of fooling search engines may result in permanent removal from [the] index.

A manual penalty will show up in your Webmaster Tools account. Depending on the cause of the penalty, you can clean up and re-submit the site to Google. This isn’t necessarily an easy job, though, and it’s important that you get it right. Given that shady links are a very common reason that you may receive a penalty, let’s have a look at how you can approach this.

First of all, Google recommends that you use Lynx, a text-based browser that allows you to view a site in the same way that a search bot does. However, do bear in mind that you won’t be able to view scripts, session IDs, Flash and so on (just as a search bot can’t).

Another option is to use [Open Site Explorer](http://www.opensiteexplorer.org/) to examine links. This free tool (part of the [Moz](http://moz.com/) suite) lets you export your inbound links as a .CSV file, which is useful in order to examine your links and begin to address the problem.

Getting organised

Before you begin, it pays to get yourself organized by setting up a spreadsheet containing all your link information. This gives you a record of the changes you’ve made, which is helpful when submitting a re-consideration request.

Your spreadsheet should include:

- Links to and from URLs

- Anchor text details

- Contact details for the linked from sites

- Removal/nofollow requests sent to sites including dates and number of requests made

- Link status (removed/nofollow/live)

Removing Bad Links

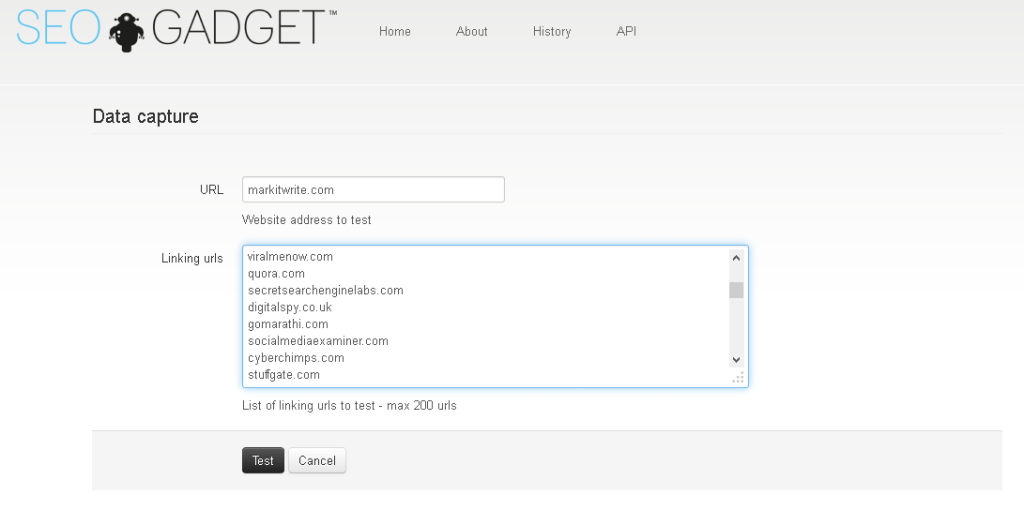

Before you can remove ‘bad links’, you will have to determine which ones are doing the damage. To help you to do this, the SEOgadgets tool has the best reputation of the many bad link tools out there and allows you to upload 200 links at a time that have been saved as an Excel/CSV file. You will have done this by using Open Site Explorer as mentioned above, or if you prefer, you can download links directly from Webmaster Tools. The tool uses special algorithms to decide which links are safe and which aren’t. It takes its link information from SEOMoz, which is a trusted source, and then scores them. The tool attempts to find contact info for each link and gathers other information such as anchor text, social media details, Authorship and link metrics such as Google Pagerank and SEOMoz domain authority. Best of all, as well as being from a trusted company, SEOgadgets is free, unlike many of the other tools out there, some of which charge $10-20 per link – that’s a lot of cash if you have a lot of links to work with. On the next screen, you can wait whilst the tool analyzes each link, or you can enter your email address and ask to be notified when the report has been generated.

You can then export all of the infomation from the tool and act on the bad links by contacting the site owner/webmaster and asking for the link to have a nofollow placed on it or removed completely. Do take care, as when I tested the tool I did find that it pointed to links as being bad that I know are not, so check out sites manually too.

Finally, you can also use the Google Disavow tool if you really have to, but unless you’re very conversant with SEO, it’s not recommended. The tool is there for those links that you absolutely have no way of cleaning and you’re sure that are having a detrimental effect on the site’s ranking. These must be submitted to Google as a .txt file and contain one link per line.

It must also be UTF-8 or 7-bit ASCII encoded and you can add further information explaining why this link is being disavowed by using the # symbol to indicate that it’s a descriptive line, rather than a link. Using the tool instructs Google to ignore those bad links you can’t get removed, but don’t expect instant results, it can take a while for it to have an effect.

On the next screen, you can wait whilst the tool analyzes each link, or you can enter your email address and ask to be notified when the report has been generated.

You can then export all of the infomation from the tool and act on the bad links by contacting the site owner/webmaster and asking for the link to have a nofollow placed on it or removed completely. Do take care, as when I tested the tool I did find that it pointed to links as being bad that I know are not, so check out sites manually too.

Finally, you can also use the Google Disavow tool if you really have to, but unless you’re very conversant with SEO, it’s not recommended. The tool is there for those links that you absolutely have no way of cleaning and you’re sure that are having a detrimental effect on the site’s ranking. These must be submitted to Google as a .txt file and contain one link per line.

It must also be UTF-8 or 7-bit ASCII encoded and you can add further information explaining why this link is being disavowed by using the # symbol to indicate that it’s a descriptive line, rather than a link. Using the tool instructs Google to ignore those bad links you can’t get removed, but don’t expect instant results, it can take a while for it to have an effect.

Before Resubmission

Before you appeal to Google and look at resubmitting the site, you should also take a look at the following:

- Site architecture

- The use of keywords throughout the content

- What’s included in meta descriptions and titles

- That no keywords are used in meta keywords

Site architecture should follow a logical pattern and include internal linking. Keyword density throughout the site should be measured and, if necessary, lowered to ensure that it reads naturally. Keywords remain a well-loved SEO tactic, but you should think of one or two key phrases, rather than using one word over and over again. Phrases that are similar and relevant to each other also work well.

Meta descriptions and page titles should all reflect the content of the page and be different for each page on the site.

Links are not the only things that may have got you a manual penalty. You should also look at:

- potential malware attacks/injections

- poorly written or ‘thin’ content that offers nothing of value

- hidden text (as in meta info/cloaked pages/black text on black background etc.)

- user-generated spam in comments or community areas of the site

- ‘spammy freehosts’

Free hosting services are unfortunately often related to spam as they appear to attract low-quality sites.

The tricky part

OK, so you’ve done all of the link-cleaning you can, you’ve checked the site over with a fine tooth comb and moved hosts if necessary. Now it’s time to ask Google nicely if it will reconsider indexing your site.

You can do this directly from the Manual Actions page in Webmaster Tools. In your reconsideration request, you should include as much information as possible in order to, as Matt Cutts puts it, offer:

clear, compelling evidence [to make it] easier for Google to make an assessment

If unnatural links caused the penalty, make your link-tracking spreadsheet available as a Google doc, and share it within your request.

You should also detail the reasons you wound up with unnatural links. If you’ve used an agency, inform Google. If it’s a member of your staff that put the links in place, tell Google what you have done to ensure that the mistake won’t be made in the future. It’s your job to convince the search engine staff that this is something that will never happen again. The more information you can give to support your request, the better. Leave no stone unturned and create an argument that your site, and its administrators, can be trusted 100 per cent to make sure there’s not going to be a repeat of the problem.

If you’ve been thorough, looked at all unnatural links and cleaned up every aspect of the site, then there’s no reason Google should refuse. If they do, you can appeal, but make absolutely sure that the site is clean first.

It’s not pleasant getting a penalty from Google and if your site has a lot of backlinks, it can be a laborious and drawn-out process. However, it’s got to be done if you want to maintain a web presence, especially if you’re in business.

The best way to avoid getting a manual penalty is to ensure that you do everything according to the rules, keep a keen eye on the sites that are linking to your content and forget chasing links as a form of SEO. Instead, build relationships with editors and sites in your niche if you want to write for other sites and want them to link back to yours. Use a bio and sign up for Google Authorship and make sure that you offer quality content – don’t insist upon a link either, or it will be clear that it’s all you’re really after.

SEO is getting tougher now and this is a positive step as far as I’m concerned. It makes for a better web for all of us, one that’s more useful and where high quality work can rise to the top.

Frequently Asked Questions (FAQs) about SEO Disasters and Google De-indexing

What is Google de-indexing and how does it affect my website?

Google de-indexing refers to the removal of a webpage or a whole website from Google’s search results. This usually happens when Google finds that the site violates its guidelines. The impact of de-indexing is significant as it leads to a drop in organic traffic, which can affect your site’s visibility and ranking on the search engine.

What are the common reasons for Google to de-index a site?

There are several reasons why Google might de-index a site. These include spammy or low-quality content, keyword stuffing, cloaking, hidden text or links, and user-generated spam. Other reasons could be harmful behavior like phishing or installing malware, and sneaky redirects.

How can I find out if my site has been de-indexed by Google?

You can check if your site has been de-indexed by performing a site search on Google. Simply type “site:yourwebsite.com” into Google’s search bar. If no results show up, your site may have been de-indexed. You can also check Google Search Console for any messages or notifications about penalties.

Can I recover my site after it has been de-indexed by Google?

Yes, it is possible to recover a de-indexed site. The first step is to identify and fix the issues that led to the de-indexing. Once you’ve made the necessary changes, you can submit a reconsideration request to Google through the Search Console.

What is a reconsideration request and how do I submit one?

A reconsideration request is a request to Google to review your site after you’ve fixed the issues that led to a penalty. You can submit a reconsideration request through Google Search Console. It’s important to provide detailed information about the actions you’ve taken to fix the issues.

How long does it take for Google to process a reconsideration request?

The time it takes for Google to process a reconsideration request can vary. Generally, it can take anywhere from a few days to a few weeks. Google will notify you of the outcome in the Search Console.

What is the impact of paid reviews on my site’s ranking?

Paying for reviews can negatively impact your site’s ranking. Google considers this practice as a violation of its guidelines as it can mislead users and create an unfair advantage in search results.

How can I prevent my site from being de-indexed by Google?

To prevent your site from being de-indexed, ensure that your site complies with Google’s Webmaster Guidelines. This includes creating high-quality, original content, avoiding deceptive practices, and making your site easily navigable.

What is the role of SEO in preventing de-indexing?

SEO plays a crucial role in preventing de-indexing. Proper SEO practices such as using relevant keywords, creating quality content, and building legitimate backlinks can help your site stay in Google’s good graces.

Can I still use paid advertising if my site has been de-indexed?

Yes, you can still use paid advertising like Google Ads even if your site has been de-indexed. However, it’s important to address the issues that led to the de-indexing to ensure the long-term success of your site.

Kerry is a prolific technology writer, covering a range of subjects from design & development, SEO & social, to corporate tech & gadgets. Co-author of SitePoint’s Jump Start HTML5, Kerry also heads up digital content agency markITwrite and is an all-round geek.