Ray Ozzie's proposal to end the long-simmering crypto war between law enforcement and much of the tech world is getting a chilly reception from privacy advocates and security experts. They argue his plan is largely the same key-escrow program proposed 20 years ago and suffers from the same fatal shortcomings.

Dubbed "Clear," Ozzie's idea was first detailed Wednesday in an article published in Wired and described in general terms last month. The former chief technical officer and chief software architect of Microsoft and the creator of Lotus Notes, Ozzie portrays Clear as a potential breakthrough in bridging the widening gulf between those who say the US government has a legitimate need to bypass encryption in extreme cases, such as those involving terrorism and child abuse, and technologists and civil libertarians who warn such bypasses threaten the security of billions of people.

In a nutshell, here's how Clear works:

- Apple and other manufacturers would generate a cryptographic keypair and would install the public key on every device and keep the private key in the same type of ultra-secure storage vault it uses to safeguard code-signing keys.

- The public key on the phone would be used to encrypt the PIN users set to unlock their devices. This encrypted PIN would then be stored on the device.

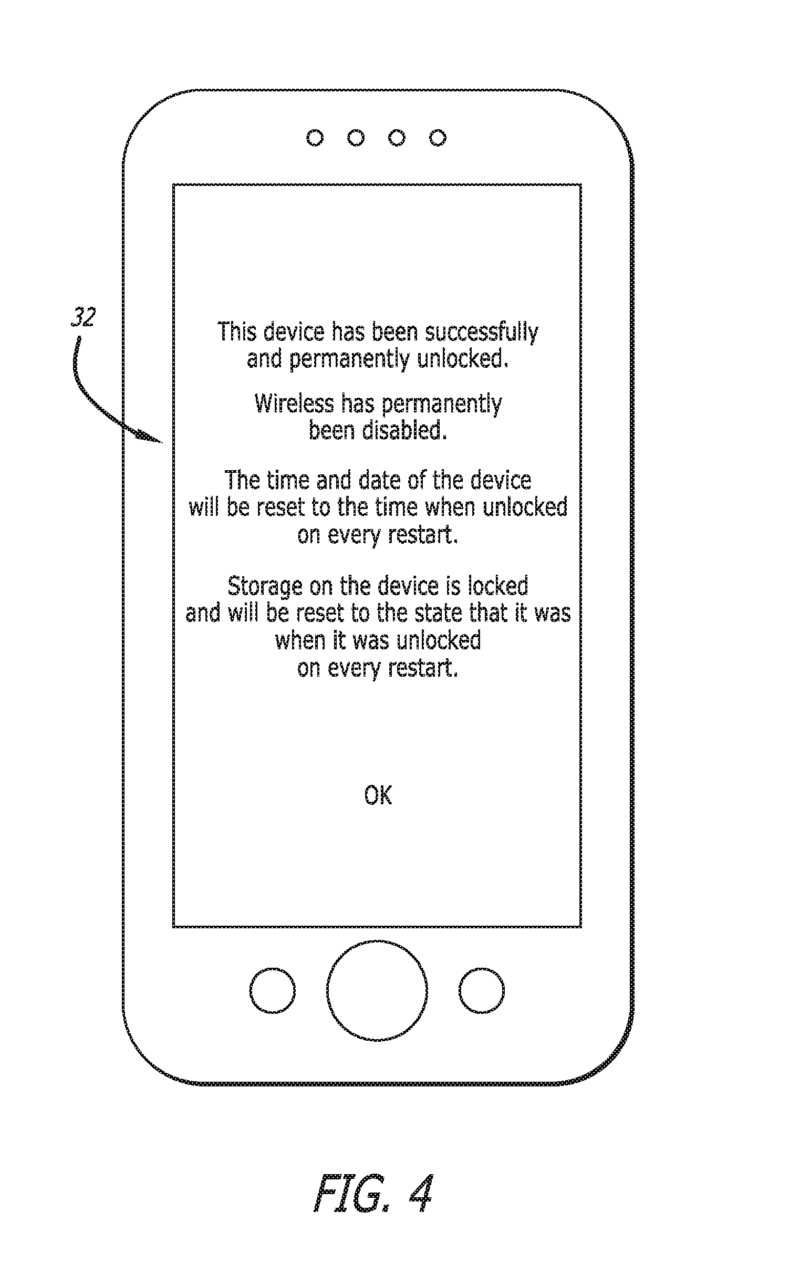

- In cases where "exceptional access" is justified, law enforcement officials would first obtain a search warrant that would allow them to place a device they have physical access over into some sort of recovery mode. This mode would (a) display the encrypted PIN and (b) effectively brick the phone in a way that would permanently prevent it from being used further or from data on it being erased.

- Law enforcement officials would send the encrypted PIN to the manufacturer. Once the manufacturer is certain the warrant is valid, it would use the private key stored in its secure vault to decrypt the PIN and provide it to the law enforcement officials.

Remember Clipper?

Almost as soon as the Wired article was published, security experts and privacy advocates took to social media to criticize Clear. Little of their critiques was new. Instead, they largely cited shortcomings first voiced in the 1990s when the Clinton administration proposed a key-escrow system that would be enabled by the so-called Clipper chip. In fairness to Ozzie, Clear has one significant difference—the automatic bricking feature. This post will mention later why critics are skeptical of that, too. First, here are the main objections to Clear, as outlined in a blog post published Thursday by Matt Green, a Johns Hopkins professor specializing in cryptography and security.

The private keys that Clear envisions would unlock a dizzying number of encrypted PINs. Apple alone manages nearly a billion active iPhones and iPads. Keys with that kind of power—no matter how well fortified—would be too valuable a target for organized, well-financed hacking groups to pass up. Green wrote:

Does this vault sound like it might become a target for organized criminals and well-funded foreign intelligence agencies? If it sounds that way to you, then you've hit on one of the most challenging problems with deploying key-escrow systems at this scale. Centralized key repositories—that can decrypt every phone in the world—are basically a magnet for the sort of attackers you absolutely don't want to be forced to defend yourself against.

This is essentially the same argument Apple and its supporters made in 2016 when opposing FBI efforts to force Cupertino to write a special version of iOS that would decrypt the iPhone 5c of one of the San Bernardino shooters who killed 14 and injured 22. While the FBI argued the software would be used to decrypt a single phone, critics said it would be too easy for this software to fall into the wrong hands and be used to decrypt other devices. Ultimately, the FBI dropped its demands after finding a private company to unlock the iPhone.

To be effective, Clear wouldn't be binding on just Apple but rather manufacturers of all computing devices, many of them low-cost products made by bootstrapped manufacturers. That means there would be dozens, hundreds, or probably thousands of PIN vaults mandated under Clear. And each of them would be a potential target for hackers all around the world.

"If ever a single attacker gains access to that vault and is able to extract, at most, a few gigabytes of data (around the size of an iTunes movie), then the attackers will gain unencrypted access to every device [made by that manufacturer] in the world," Green wrote. "Even better: if the attackers can do this surreptitiously, you'll never know they did it."

The Johns Hopkins professor also notes that the theft of code-signing keys, used to certify legitimate software, happens on occasion, in some cases despite the use of hardware security modules that are supposed to safeguard keys against hacking thefts. If those kinds of thefts can happen to Adobe, they can happen to device manufacturers, too.

Bricking

Clear does provide one improvement over most proposed key-escrow schemes—the effective bricking of a device that's unlocked. That, in theory, would prevent Clear from being used to access a phone over an extended period of time. Bricking would also make it obvious to most device owners that their phone has been unlocked, a countermeasure that would serve as a major deterrent to organizations such as the National Security Agency, which almost always values preserving the secrecy of its operations over other imperatives.

The mechanism for enforcing this bricking safeguard has yet to be developed. Presumably, it would rely on the same type of secure enclave processor iPhones use to limit the number of incorrect PIN entries a user can make. After a certain number of wrong guesses, the SEP will increase the time the iPhone takes to accept new guesses. Once a certain threshold is reached, the SEP can permanently wipe the data on the device.

But as Green notes, flaws in the iPhone SEP are presumed to be the way forensics software sold by companies such as Cellebrite unlocks iPhones that use PINs or weak passcodes. If a secure enclave designed by the world's most valuable company can be hacked, there's no reason to think a new one to enforce bricking mandated by Clear wouldn't face a similar outcome.

There are other reasons for skepticism. For instance, once Clear is built, what's to stop China from insisting it be given PINs to unlock the phones of human-rights dissidents? This would put the Apples and Samsungs of the world in a near-impossible position of alienating the government in one of their biggest markets. Like most of the others, this objection to Clear has been made to most of the other proposed solutions for what the FBI calls its "going dark" problem. The more critics look into Ozzie's plan and the patent implementing it, the more it looks like the same flawed key-escrow that was soundly rejected two decades ago.

Post corrected in the 9th paragraph to specify that the private key is the thing targeted by hackers. There is no need for manufacturers to store encrypted PINs..

Listing image by Getty Images | Boonrit Panyaphinitnugoon

reader comments

312