In this guest article, our friends at Intel discuss how CPUs prove better for some important Deep Learning. Here’s why, and keep your GPUs handy!

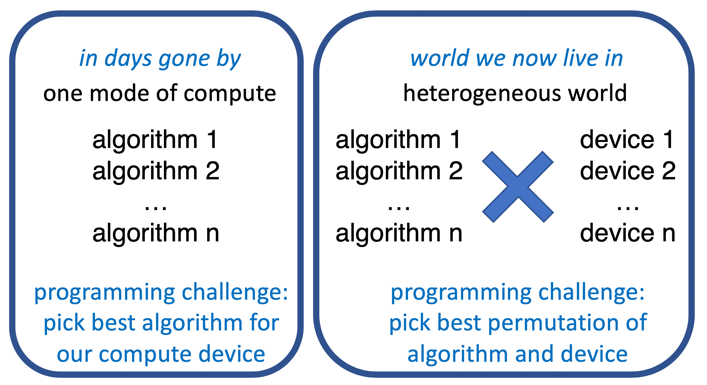

When programming in a heterogeneous world, we need to optimize a combination of algorithms and hardware devices.

Two Examples of Superior Results by Matching Algorithms to Devices

In a heterogeneous world, the choice for an optimal device might surprise us (there is no single winner all the time) — and it can be a challenge to find the best combination.

Following are two examples that illustrate that picking the best algorithm + device combination may not be obvious without some experimentation. In two cases, AI algorithms that had been running on GPUs, turned out to have better options available to them on CPUs — once some rethinking was done.

Both are great examples of permutations available on a heterogeneous machine — we need to consider algorithm X on device A, vs. algorithm Y on device B.

Example 1: Multifaceted Implications of Compact Sparse Memory Representation

Researchers [1] looking to improve Neural machine translation (NMT), investigated moving from sparse gradient gather operations to the use of dense gradient reduction operations. Switching to a dense matrix representation, proved to benefit from the larger memory capacities and scale-out capabilities of CPUs. In addition to superior performance and scaling using CPUs, it also (perhaps surprisingly) reduced the memory footprint of the application. The researchers reported that their code using a dense representation resulted in a more than 82x reduction (11446MB to 139MB) in the amount of memory required by a 64-node run, and it showed more than a 25x reduction in time required for the accumulation operation (4321ms to 169ms).

The positive effects of their work are available in Horovod (0.15.2 and later), allowing anyone to benefit from their approach. The researchers actively encourage others to think as they have, because they believe that their rethinking of such work has applicability to other frameworks and libraries, such as BERT (Bidirectional Encoder Representations from Transformers).

Example 2: Higher Complexity Models Improve X-Ray Analysis

Scale-out, large-batch training recently proved to be an effective way to speed up neural network training for Chest X-Ray analysisi on Intel® Xeon® Scalable platforms. Researchers saw improved classification accuracy without significantly increasing the total number of passes through the dataset required. The upscaled version of ResNet-50, called ResNet-59, utilized the full 1024×1024 images to improve classification accuracy even further — an optimization not possible within the memory constraints of the GPU systems the code ran on previously.

Hammers for Nails, but Don’t Throw Away the Screwdrivers

Heterogeneous computing ushers in a world where we must consider permutations of algorithms and devices to find the best platform solution. No single device will win all the time, so we need to constantly assess our choices and assumptions.

The goal of a heterogeneous system is to offer more choices, to deliver more performance. In the two cases shown, the superior memory capacity of Intel Xeon Scalable platforms was the critical element that made it a superior solution for these AI / Big Data problems.

Welcome to heterogeneous systems!

To learn more about the research illustrating these important Deep Learning results – download Switch to CPUs Improves Chest X-Ray Analysis with Deep Learning, Accelerates Neural Machine Translation Models.

[1] Learn the details at https://www.intel.com/content/www/us/en/products/docs/network-io/high-performance-fabrics/opa-xeon-scalable-surfsara-research-case-study.html

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.