Optical microscopes depend on light, of course, but they are also limited by that same light. Typically, anything under 200 nanometers just blurs together because of the wavelength of the light being used to observe it. However, engineers at the University of California San Diego have published their results using a hyperbolic metamaterial composed of silver and silica to drive optical microscopy down to below 40 nanometers. You can find the original paper online, also.

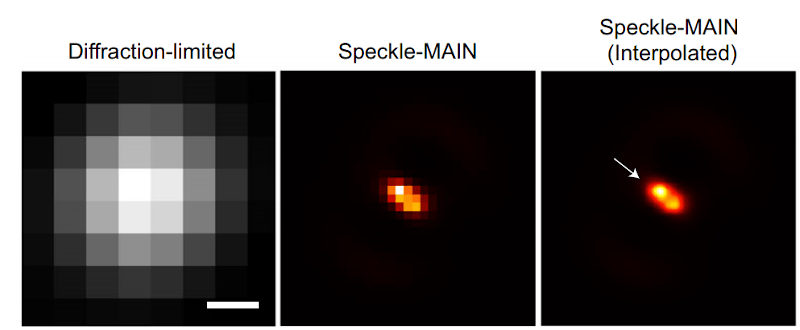

The technique also requires image processing. Light passing through the metamaterial breaks into speckles that produce low-resolution images that can combine to form high-resolution images. This so-called structured illumination technique isn’t exactly new, but previous techniques allowed about 100-nanometer resolution, much less than what the researchers were able to find using this material.

The technique requires a conventional microscope along with a 1-watt laser and a fiberoptic collimator. A digital camera collects the optical data for processing. While a laser used to be exotic, these days a 1W laser isn’t especially hard to acquire. If you could figure out how to get or make the metamaterial, this looks like something you could possibly replicate in your basement.

The university has been doing work in this area for a while. In 2018. they showed similar methods resolving down 80 nanometers. Sure, a scanning electron microscope can resolve around 10 nanometers, but having to put your specimen in a vacuum chamber and irradiate it poses difficulties for some kinds of samples.

While it might not have the same resolution, your 3D printer can become a microscope. Or if you want to really image atoms, try building an atomic force microscope.

I know this was pretty old news, but seeing it again gets me thinking about it. Perhaps it might be possible to obtain an even greater resolution. If a material could be created that had relatively long pores of a size close to the wavelength of the laser you were using to illuminate the sample, then perhaps it could be used to filter out the angled beams, like a defraction grating but in all directions at once. Then you could jiggle the material around and take an average, so it worked a bit like a Fourier transform for each pixel. Although sadly you might need to get pretty close to the sample in order to see it clearly and the material would need to be pretty close to the camera sensor. Not to mention if you wanted to keep it still, you’d need to slightly curve the material and angle all the holes. Perhaps the material could incorporate some sort of piezoelectric substance to move the focal point, sort of like a liquid filled lens.

A fancier type of fluorescence microscopy is laser scanning confocal microscopy, which uses a pinhole to screen out light which isn’t in the exact focal plane you want. I don’t think the pore is quite as small as the one you’re proposing, so is only sort-of already done. Spinning disc confocal uses multiple pores to speed things up.

The jiggling idea sounds vaguely like (sub)pixel-shifting in camera sensors. I was involved in buying a new microscope a few years ago and the manufacturers were very keen to push all sorts of resolution improvements, but being able to get down to 40nm like in this paper would be brilliant. Bacteria are tiny, seeing substructures optically is not that easy, but as the article says EM is not always ideal.

This RIM technique (for random illumination microscopy) has more in common with other FSM techniques like PALM/STORM (FSM for fluorescence speckle microscopy) and is somewhat related to SIM (structured illumination like the Zeiss Apotome). Correct me if I’m wrong but these are all widefield techniques, not confocal (LSM or spinning disk).

Here distinct fluorophores are excited using a widefield microscope and the pattern of these excited fluorophores is randomly changed from one image to the next. This is done by applying some sort of scrambler (the metamaterial) to the laser excitation.

A final image is reconstructed by combining the 100s / 1000s of images necessary to make this technique work (like you do in PAM/STORM). So this would apply to fixed samples rather than live.

In any case, it’s a really cool development, and interesting to see how quickly it was picked-up by mainstream news.

>Correct me if I’m wrong but these are all widefield techniques, not confocal (LSM or spinning disk).

Oh absolutely, CRJEEA’s comment about shining light through pores just made me think about confocal.

I’d be keen to try something like this – there are some nutrient storage granules in bacteria which I work with but are a pain to image partly because of their size – but I’m sure the commercial versions will be horrendously expensive, as usual. I’d be curious to know how much modification something like the DIY vapor deposition kit which was covered by HAD a day or two ago would need to achieve this, or if it would work at all.

> I’d be keen to try something like this – there are some nutrient storage granules in bacteria which I work with but are a pain to image partly because of their size

Here’s another implementation from a few years back that doesn’t require exotic materials:

https://www.nature.com/articles/srep02075

And I’m wondering if deep learning could help in this case: say, you like the look of 100,000 images combined but only realistically can take 500, then you could train a network with 500 combined images as the input and the 100,000 images as the output:

https://biii.eu/csbdeep-toolbox-content-aware-image-restoration-care-fiji

https://youtu.be/uMC67X3DdUo?t=256

I don’t know, worth a try I suppose :-)

I don’t think you can get any closer to a sample with this setup. Isn’t the sample laying on the metamaterial? The process looks like a combination of image reconstruction from projections and shadowgrams, all requiring a great deal of deconvolution (done with FFT’s using the fact that convolution in the image domain is simple multiplication in the frequency domain).

I don’t see how you can avoid the diffraction limit with Long and thin collimation. Look at fig 3 of the paper, it shows the diffraction limit image for i think 488nm light they used. Visible light is 400-750nm, so thats the normal limit of far field optical systems. Shorter lambda is not practical because it’s destructive and sources are tricky and expensive–the semiconductor industry now is suffering on that cross because they need short wavelengths for their sub 10nm chips.

I think this isn’t far field optics and I don’t think diffraction limits apply. The main optics are making an image of an image of a different sort. The meta-material in the near field of the shadows or reflected light of the specimens forms an “image” of the speckle dots, which is imaged by the optics and deconvolved? I did not read the paper closely, but that was my impression.

There are what are called “capillary optics” or “poly-capillary optics” which are used for collecting x-rays. FWIW

https://www.albany.edu/x-ray-optics/polycapillary_optics.htm

Your description matches near-field scanning optical microscopy. Take an AFM tip, put a tiny hole in it, then put that really near your emitter. It can get down to a handful of nanometers in resolution. The trade-off is that you have to be in the near field of the light being emitted, which means being at most a couple hundred nanometers away from the emitter. Good for surface stuff and awful for anything else.

The other part you describe sounds like lensless imaging. Put your emissive specimen on the camera chip directly and use some knowledge of the chip structure and lots of math to yield an image.

Neat. Haven’t had a chance to read the article, but I want to point out that the sort of lasers used in research don’t tend to be of the $20 eBay variety. Yes it’s 1W but I’d be surprised if they’re using a cheap multi mode laser. Typically where very high resolution is required you want single mode, and single frequency, which is achieved with thermal control, feedback and expensive filters like fiber Bragg gratings or other exotics. The diode modules alone for these start around a thousand bucks.

Checking the paper, they couple to a multi mode fiber, but I’m not really sure how important frequency stability is. They mention random, diffraction limited light into the fiber. The laser they are using can be found used on eBay for $16,000.