Abstract

Gate-based quantum computations represent an essential to realize near-term quantum computer architectures. A gate-model quantum neural network (QNN) is a QNN implemented on a gate-model quantum computer, realized via a set of unitaries with associated gate parameters. Here, we define a training optimization procedure for gate-model QNNs. By deriving the environmental attributes of the gate-model quantum network, we prove the constraint-based learning models. We show that the optimal learning procedures are different if side information is available in different directions, and if side information is accessible about the previous running sequences of the gate-model QNN. The results are particularly convenient for gate-model quantum computer implementations.

Similar content being viewed by others

Introduction

Gate-based quantum computers represent an implementable way to realize experimental quantum computations on near-term quantum computer architectures1,2,3,4,5,6,7,8,9,10,11,12,13. In a gate-model quantum computer, the transformations are realized by quantum gates, such that each quantum gate is represented by a unitary operation14,15,16,17,18,19,20,21,22,23,24,25,26. An input quantum state is evolved through a sequence of unitary gates and the output state is then assessed by a measurement operator14,15,16,17. Focusing on gate-model quantum computer architectures is motivated by the successful demonstration of the practical implementations of gate-model quantum computers7,8,9,10,11, and several important developments for near-term gate-model quantum computations are currently in progress. Another important aspect is the application of gate-model quantum computations in the near-term quantum devices of the quantum Internet27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43.

A quantum neural network (QNN) is formulated by a set of quantum operations and connections between the operations with a particular weight parameter14,25,26,44,45,46,47. Gate-model QNNs refer to QNNs implemented on gate-model quantum computers14. As a corollary, gate-model QNNs have a crucial experimental importance since these network structures are realizable on near-term quantum computer architectures. The core of a gate-model QNN is a sequence of unitary operations. A gate-model QNN consists of a set of unitary operations and communication links that are used for the propagation of quantum and classical side information in the network for the related calculations of the learning procedure. The unitary transformations represent quantum gates parameterized by a variable referred to as gate parameter (weight). The inputs of the gate-model QNN structure are a computational basis state and an auxiliary quantum system that serves a readout state in the output measurement phase. Each input state is associated with a particular label. In the modeled learning problem, the training of the gate-model QNN aims to learn the values of the gate parameters associated with the unitaries so that the predicted label is close to a true label value of the input (i.e., the difference between the predicted and true values is minimal). This problem, therefore, formulates an objective function that is subject to minimization. In this setting, the training of the gate-model QNN aims to learn the label of a general quantum state.

In artificial intelligence, machine learning4,5,6,19,23,45,46,48,49,50,51,52,53 utilizes statistical methods with measured data to achieve a desired value of an objective function associated with a particular problem. A learning machine is an abstract computational model for the learning procedures. A constraint machine is a learning machine that works with constraint, such that the constraints are characterized and defined by the actual environment48.

The proposed model of a gate-model quantum neural network assumes that quantum information can only be propagated forward direction from the input to the output, and classical side information is available via classical links. The classical side information is processed further via a post-processing unit after the measurement of the output. In the general gate-model QNN scenario, it is assumed that classical side information can be propagated arbitrarily in the network structure, and there is no available side information about the previous running sequences of the gate-model QNN structure. The situation changes, if side information propagates only backward direction and side information about the previous running sequences of the network is also available. The resulting network model is called gate-model recurrent quantum neural network (RQNN).

Here, we define a constraint-based training optimization method for gate-model QNNs and RQNNs, and propose the computational models from the attributes of the gate-model quantum network environment. We show that these structural distinctions lead to significantly different computational models and learning optimization. By using the constraint-based computational models of the QNNs, we prove the optimal learning methods for each network—nonrecurrent and recurrent gate-model QNNs—vary. Finally, we characterize optimal learning procedures for each variant of gate-model QNNs.

The novel contributions of our manuscript are as follows.

-

We study the computational models of nonrecurrent and recurrent gate-model QNNs realized via an arbitrary number of unitaries.

-

We define learning methods for nonrecurrent and recurrent gate-model QNNs.

-

We prove the optimal learning for nonrecurrent and recurrent gate-model QNNs.

This paper is organized as follows. In Section 2, the related works are summarized. Section 3 defines the system model and the parameterization of the learning optimization problem. Section 4 proves the computational models of gate-model QNNs. Section 5 provides learning optimization results. Finally, Section 6 concludes the paper. Supplemental information is included in the Appendix.

Related Works

Gate-model quantum computers

A theoretical background on the realizations of quantum computations in a gate-model quantum computer environment can be found in15 and16. For a summary on the related references1,2,3,13,15,16,17,54,55, we suggest56.

Quantum neural networks

In14, the formalism of a gate-model quantum neural network is defined. The gate-model quantum neural network is a quantum neural network implemented on gate-model quantum computer. A particular problem analyzed by the authors is the classification of classical data sets which consist of bitstrings with binary labels.

In44, the authors studied the subject of quantum deep learning. As the authors found, the application of quantum computing can reduce the time required to train a deep restricted Boltzmann machine. The work also concluded that quantum computing provides a strong framework for deep learning, and the application of quantum computing can lead to significant performance improvements in comparison to classical computing.

In45, the authors defined a quantum generalization of feedforward neural networks. In the proposed system model, the classical neurons are generalized to being quantum reversible. As the authors showed, the defined quantum network can be trained efficiently using gradient descent to perform quantum generalizations of classical tasks.

In46, the authors defined a model of a quantum neuron to perform machine learning tasks on quantum computers. The authors proposed a small quantum circuit to simulate neurons with threshold activation. As the authors found, the proposed quantum circuit realizes a “œquantum neuron”. The authors showed an application of the defined quantum neuron model in feedforward networks. The work concluded that the quantum neuron model can learn a function if trained with superposition of inputs and the corresponding output. The proposed training method also suffices to learn the function on all individual inputs separately.

In25, the authors studied the structure of artificial quantum neural network. The work focused on the model of quantum neurons and studied the logical elements and tests of convolutional networks. The authors defined a model of an artificial neural network that uses quantum-mechanical particles as a neuron, and set a Monte-Carlo integration method to simulate the proposed quantum-mechanical system. The work also studied the implementation of logical elements based on introduced quantum particles, and the implementation of a simple convolutional network.

In26, the authors defined the model of a universal quantum perceptron as efficient unitary approximators. The authors studied the implementation of a quantum perceptron with a sigmoid activation function as a reversible many-body unitary operation. In the proposed system model, the response of the quantum perceptron is parameterized by the potential exerted by other neurons. The authors showed that the proposed quantum neural network model is a universal approximator of continuous functions, with at least the same power as classical neural networks.

Quantum machine learning

In57, the authors analyzed a Markov process connected to a classical probabilistic algorithm58. A performance evaluation also has been included in the work to compare the performance of the quantum and classical algorithm.

In19, the authors studied quantum algorithms for supervised and unsupervised machine learning. This particular work focuses on the problem of cluster assignment and cluster finding via quantum algorithms. As a main conclusion of the work, via the utilization of quantum computers and quantum machine learning, an exponential speed-up can be reached over classical algorithms.

In20, the authors defined a method for the analysis of an unknown quantum state. The authors showed that it is possible to perform “œquantum principal component analysis” by creating quantum coherence among different copies, and the relevant attributes can be revealed exponentially faster than it is possible by any existing algorithm.

In21, the authors studied the application of a quantum support vector machine in Big Data classification. The authors showed that a quantum version of the support vector machine (optimized binary classifier) can be implemented on a quantum computer. As the work concluded, the complexity of the quantum algorithm is only logarithmic in the size of the vectors and the number of training examples that provides a significant advantage over classical support machines.

In22, the problem of quantum-based analysis of big data sets is studied by the authors. As the authors concluded, the proposed quantum algorithms provide an exponential speedup over classical algorithms for topological data analysis.

The problem of quantum generative adversarial learning is studied in51. In generative adversarial networks a generator entity creates statistics for data that mimics those of a valid data set, and a discriminator unit distinguishes between the valid and non-valid data. As a main conclusion of the work, a quantum computer allows us to realize quantum adversarial networks with an exponential advantage over classical adversarial networks.

In54, super-polynomial and exponential improvements for quantum-enhanced reinforcement learning are studied.

In55, the authors proposed strategies for quantum computing molecular energies using the unitary coupled cluster ansatz.

The authors of56 provided demonstrations of quantum advantage in machine learning problems.

In57, the authors study the subject of quantum speedup in machine learning. As a particular problem, the work focuses on finding Boolean functions for classification tasks.

System Model

Gate-model quantum neural network

Definition 1 A QNNQG is a quantum neural network (QNN) implemented on a gate-model quantum computer with a quantum gate structure QG. It contains quantum links between the unitaries and classical links for the propagation of classical side information. In a QNNQG, all quantum information propagates forward from the input to the output, while classical side information can propagate arbitrarily (forward and backward) in the network. In a QNNQG, there is no available side information about the previous running sequences of the structure.

Using the framework of14, a QNNQG is formulated by a collection of L unitary gates, such that an i-th, i = 1, …, L unitary gate Ui(θi) is

where P is a generalized Pauli operator formulated by a tensor product of Pauli operators {X, Y, Z}, while θi is referred to as the gate parameter associated with Ui(θi).

In QNNQG, a given unitary gate Ui(θi) sequentially acts on the output of the previous unitary gate Ui−1(θi−1), without any nonlinearities14. The classical side information of QNNQG is used in calculations related to error derivation and gradient computations, such that side information can propagate arbitrarily in the network structure.

The sequential application of the L unitaries formulates a unitary operator \(U(\overrightarrow{\theta })\) as

where Ui(θi) identifies an i-th unitary gate, and \(\overrightarrow{\theta }\) is the gate parameter vector

At (2), the evolution of the system of QNNQG for a particular input system \(|\psi ,\varphi \rangle \) is

where \(|Y\rangle \) is the (n + 1)-length output quantum system, and \(|\psi \rangle =|z\rangle \) is a computational basis state, where z is an n-length string

where each zi represents a classical bit with values

while the (n + 1)-th quantum state is initialized as

and is referred to as the readout quantum state.

Objective function

The \(f(\overrightarrow{\theta })\) objective function subject to minimization is defined for a QNNQG as

where \( {\mathcal L} ({x}_{0},\tilde{l}(z))\) is the loss function14, defined as

where \(\tilde{l}(z)\) is the predicted value of the binary label

of the string z, defined as14

where Yn+1 ∈ {−1, 1} is a measured Pauli operator on the readout quantum state (7), while x0 is as

The \(\tilde{l}\) predicted value in (11) is a real number between −1 and 1, while the label l(z) and Yn+1 are real numbers −1 or 1. Precisely, the \(\tilde{l}\) predicted value as given in (11) represents an average of several measurement outcomes if Yn+1 is measured via R output system instances |Y〉(r)-s, r = 1, …, R14.

The learning problem for a QNNQG is, therefore, as follows. At an \({{\mathscr{S}}}_{T}\) training set formulated via R input strings and labels

where r refers to the r-th measurement round and R is the total number of measurement rounds, the goal is therefore to find the gate parameters (3) of the L unitaries of QNNQG, such that \(f(\overrightarrow{\theta })\) in (8) is minimal.

Recurrent Gate-model quantum neural network

Definition 2 An RQNNQG is a QNN implemented on a gate-model quantum computer with a quantum gate structure QG, such that the connections of RQNNQG form a directed graph along a sequence. It contains quantum links between the unitaries and classical links for the propagation of classical side information. In an RQNNQG, all quantum information propagates forward, while classical side information can propagate only backward direction. In an RQNNQG, side information is available about the previous running sequences of the structure.

The classical side information of RQNNQG is used in error derivation and gradient computations, such that side information can propagate only in backward directions. Similar to the QNNQG case, in an RQNNQG, a given i-th unitary Ui(θi) acts on the output of the previous unitary Ui−1(θi−1). Thus, the quantum evolution of the RQNNQG contains no nonlinearities14. As follows, for an RQNNQG network, the objective function can be similarly defined as given in (8). On the other hand, the structural differences between QNNQG and RQNNQG allows the characterization of different computational models for the description of the learning problem. The structural differences also lead to various optimal learning methods for the QNNQG and RQNNQG structures as it will be revealed in Section 4 and Section 5.

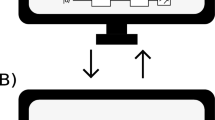

Comparative representation

For a simple graphical representation, the schematic models of a QNNQG and RQNNQG for an (r − 1)-th and r-th measurement rounds are compared in Fig. 1. The (n + 1)-length input systems are depicted by \(|{\psi }_{r-1}\rangle |1\rangle \) and \(|{\psi }_{r}\rangle |1\rangle \), while the output systems are denoted by \(|{Y}_{r-1}\rangle \) and \(|{Y}_{r}\rangle \). The result of the M measurement operator in the (r − 1)-th and r-th measurement rounds are denoted by \({Y}_{n+1}^{(r-1)}\) and \({Y}_{n+1}^{(r)}\). In Fig. 1(a), structure of a QNNQG is depicted for an (r − 1)-th and r-th measurement round. In Fig. 1(b), the structure of a RQNNQG is illustrated. In a QNNQG, side information is not available about the previous, (r − 1)-th measurement round in a particular r-th measurement round. For an RQNNQG, side information is available about the (r − 1)-th measurement round (depicted by the dashed gray arrows) in a particular r-th measurement round. The side information in the RQNNQG setting refer to information about the gate-parameters and the measurement results of the (r − 1)-th measurement round.

Schematic representation of a QNNQG and RQNNQG in an (n − 1)-th and r-th measurement rounds. The (n + 1)-length input systems of the (r − 1) and r-th measurement rounds are depicted by \(|{\psi }_{r-1}\rangle |1\rangle \) and \(|{\psi }_{r}\rangle |1\rangle \), the output systems are \(|{Y}_{r-1}\rangle \) and \(|{Y}_{r}\rangle \). The result of the M measurement operator in the (n − 1)-th and r-th measurement rounds are denoted by \({Y}_{n+1}^{(r-1)}\) and \({Y}_{n+1}^{(r)}\). (a) In a QNNQG, for an r-th measurement round, side information is not available about the previous (n − 1)-th measurement round. (b) In a RQNNQG, side information is available about the previous (n − 1)-th measurement round in an r-th round.

Parameterization

Constraint machines

The tasks of machine learning can be modeled via its mathematical framework and the constraints of the environment4,5,6. A \({\mathscr{C}}\) constraint machine is a learning machine working with constraints48. A constraint machine can be formulated by a particular function f or via some elements of a functional space \( {\mathcal F} \). The constraints model the attributes of the environment of \({\mathscr{C}}\).

The learning problem of a \({\mathscr{C}}\) constraint machine can be represented via a \({\mathscr{G}}=(V,S)\) environmental graph48,59,60,61,62. The \({\mathscr{G}}\) environmental graph is a directed acyclic graph (DAG), with a set V of vertexes and a set S of arcs. The vertexes of \({\mathscr{G}}\) model associated features, while the arcs between the vertexes describe the relations of the vertexes.

The \({\mathscr{G}}\) environmental graph formalizes factual knowledge via modeling the relations among the elements of the environment48. In the environmental graph representation, the \({\mathscr{C}}\) constraint machine has to decide based on the information associated with the vertexes of the graph.

For any vertex v of V, a perceptual space element x, and its identifier 〈x〉 that addresses x in the computational model can be defined as a pair

where \(x\in {\mathscr{X}}\) is an element (vector) of the perceptual space \({\mathscr{X}}\subset {{\mathbb{C}}}^{d}\). Assuming that features are missing, the ◊ symbol can be used. Therefore, \({\mathscr{X}}\) is initialized as \({{\mathscr{X}}}_{0}\),

The environment is populated by individuals, and the \( {\mathcal I} \) individual space is defined via V and \({{\mathscr{X}}}_{0}\) as

such that the existing features are associated with a subset \(\tilde{V}\) of V.

The features can be associated with the 〈x〉 identifier via a \({f}_{{\mathscr{P}}}\) perceptual map as

If the condition

holds, then \({f}_{{\mathscr{P}}}\) is yielded as

A given individual \(\iota \in {\mathcal I} \) is defined as a feature vector \(x\in {\mathscr{X}}\). An \(\iota \in {\mathcal I} \) individual of the individual space \( {\mathcal I} \) is defined as

where + is the sum operator in \({{\mathbb{C}}}^{d}\), ¬ is the negation operator, while Υ is a constraint as

where \({{\mathscr{X}}}_{0}\) is given in (15). Thus, from (20), an individual ι is a feature vector x of \({\mathscr{X}}\) or a vertex v of \({\mathscr{G}}\).

Let \({\iota }^{\ast }\in {\mathcal I} \) be a specific individual, and let f be an agent represented by the function \(f: {\mathcal I} \to {{\mathbb{C}}}^{n}\). Then, at a given environmental graph \({\mathscr{G}}\), the \({\mathscr{C}}\) constraint machine is defined via function f as a machine in which the learning and inference are represented via enforcing procedures on constraints \({C}_{{\iota }^{\ast }}\) and Cι, such that for a \({\mathscr{C}}\) constraint machine the learning procedure requires the satisfaction of the constraints over all \({ {\mathcal I} }^{\ast }\), while in the inference the satisfaction of the constraint is enforced over the given \({\iota }^{\ast }\in {\mathcal I} \)48, by theory. Thus, \({\mathscr{C}}\) is defined in a formalized manner, as

where \(\tilde{ {\mathcal I} }\) is a subset of \( {\mathcal I} \), ι* refers to a specific individual, vertex or function, χ(⋅) is a compact constraint function, while v* and f*(ι*) refer to the vertex and function at ι*, respectively.

Calculus of variations

Some elements from the calculus of variations63,64 are utilized in the learning optimization procedure.

Euler-Lagrange Equations: The Euler-Lagrange equations are second-order partial differential equations with solution functions. These equations are useful in optimization problems since they have a differentiable functional that is stationary at the local maxima and minima63. As a corollary, they can be also used in the problems of machine learning.

Hessian Matrix: A Hessian matrix H is a square matrix of second-order partial derivatives of a scalar-valued function, or scalar field63. In theory, it describes the local curvature of a function of many variables. In a machine-learning setting, it is a useful tool to derive some attributes and critical points of loss functions.

Constraint-based Computational Model

In this section, we derive the computational models of the QNNQG and RQNNQG structures.

Environmental graph of a gate-model quantum neural network

Proposition 1 The \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}=(V,S)\) environmental graph of a QNNQG is a DAG, where V is a set of vertexes, in our setting defined as

where \({{\mathscr{S}}}_{in}\) is the input space, \({\mathscr{U}}\) is the space of unitaries, \({\mathscr{Y}}\) is the output space, and S is a set of arcs.

Let \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}\) be an environmental graph of QNNQG, and let \({v}_{{U}_{i}}\) be a vertex, such that \({v}_{{U}_{i}}\in V\) is related to the unitary Ui(θi), where index i = 0 is associated with the \(|z\mathrm{,\; 1}\rangle \) input system with vertex v0. Then, let \({v}_{{U}_{i}}\) and \({v}_{{U}_{j}}\) be connected vertices via directed arc sij, sij ∈ S, such that a particular θij gate parameter is associated with the forward directed arc (Note: the notation Uj(θij) refers to the selection of θj for the unitary Uj to realize the operation Ui(θi)Uj(θj), i.e., the application of Uj(θj) on the output of Ui(θi) at a particular gate parameter θj), as

such that arc s0j is associated with θ0j = θj.

Then a given state \({x}_{{U}_{i}({\theta }_{i})}\) of \({\mathscr{X}}\) associated with Ui(θi) is defined as

where \({v}_{{U}_{i}}\) is a label for unitary Ui in the environmental graph \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}\) (serves as an identifier in the computational structure of (25)), while parameter \({a}_{{U}_{i}({\theta }_{i})}\) is defined for a Ui(θi) as

where Ξ(i) refers to the parent set of \({v}_{{U}_{i}}\), Ui(θhi) refers to the selection of θi for unitary Ui for a particular input from Uh(θh), while \({b}_{{U}_{i}({\theta }_{i})}\) is the bias relative to \({v}_{{U}_{i}}\).

Applying a f∠ topological ordering function on \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}\) yields an ordered graph structure \({f}_{\angle }({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}})\) of the L unitaries. Thus, a given output \(|Y\rangle \) of QNNQG can be rewritten in a compact form as

where the term \({x}_{0}\in {{\mathscr{S}}}_{in}\) is associated with the input system as defined in (12).

A particular state \({x}_{{U}_{l}({\theta }_{l})}\), l = 1, …, L is evaluated in function of \({x}_{{U}_{l-1}({\theta }_{l-1})}\) as

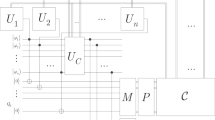

The environmental and ordered graphs of a gate-model quantum neural network are illustrated in Fig. 2. In Fig. 2(a) the \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}\) environmental graph of a QNNQG is depicted, and the ordered graph \({f}_{\angle }({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}})\) is shown in Fig. 2(b).

(a) The \({{\mathscr{G}}}_{{{\rm{RQNN}}}_{QG}}\) environmental graph of a QNNQG, with L unitaries. The input state of the QNNQG is \(|\psi \mathrm{,1}\rangle \). A unitary Ui(θi) is represented by a vertex \({v}_{{U}_{i}}\) in \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}\). The vertices \({v}_{{U}_{i}}\) and \({v}_{{U}_{j}}\) of unitaries Ui(θi) and Uj(θj) are connected by directed arcs sij. The gate parameters θij = θj are associated with sij, while \({{\mathscr{S}}}_{in}\) is the input space, \({\mathscr{U}}\) is the space of L unitaries, and \({\mathscr{Y}}\) is the output space. Operator M is a measurement on the (n + 1)-th state (readout quantum state), and Yn+1 is a Pauli operator measured on the readout state (classical links are not depicted) (b) The compacted ordered graph \({f}_{\angle }({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}})\) of QNNQG. The output is \(|Y\rangle =U(\overrightarrow{\theta }){x}_{0}\), where \({x}_{0}=|z\mathrm{,1}\rangle \) and \(U(\overrightarrow{\theta })={\prod }_{l=1}^{L}{U}_{l}({\theta }_{l})\) (classical links are not depicted).

Computational model of gate-model quantum neural networks

Theorem 1

The computational model of a QNNQG is a \({\mathscr{C}}({\rm{Q}}N{N}_{QG})\) constraint machine with linear transition functions fT(QNNQG).

Proof. Let \({\mathscr{G}}({\rm{Q}}N{N}_{QG})=(V,S)\) be the environmental graph of a QNNQG, and assume that the number of types of the vertexes is p. Then, the vertex set V can be expressed as a collection

where Vi identifies a set of vertexes, p is the total number of the Vi sets, such that \({V}_{i}\cap {V}_{j}=\varnothing ,\) if only i ≠ j48. For a v ∈ Vi vertex from set Vi, an \({f}_{T}:{{\mathbb{C}}}^{{{\rm{\dim }}}_{in}}\to {{\mathbb{C}}}^{{{\rm{\dim }}}_{out}}\) transition function48 can be defined as

where \({{\mathscr{X}}}_{{V}_{i}}\) is the perceptual space \({\mathscr{X}}\) of Vi, \({{\mathscr{Z}}}_{{V}_{i}}\subset {{\mathbb{C}}}^{{\rm{\dim }}({{\mathscr{Z}}}_{{V}_{i}})}\); \({\rm{\dim }}({{\mathscr{Z}}}_{{V}_{i}})\) is the dimension of the space \({{\mathscr{Z}}}_{{V}_{i}}\); xv is an element of \({{\mathscr{X}}}_{{V}_{i}}\); \({x}_{v}\in {{\mathscr{X}}}_{{V}_{i}}\) associated with a unitary Uv(θv); \({\mathscr{Z}}\) is the state space, \({{\mathscr{Z}}}_{{V}_{i}}\) is the state space of Vi, \({{\mathscr{Z}}}_{{V}_{i}}\subset {{\mathbb{C}}}^{{\rm{d}}{\rm{i}}{\rm{m}}({{\mathscr{Z}}}_{{V}_{i}})}\), \({\rm{d}}{\rm{i}}{\rm{m}}({{\mathscr{Z}}}_{{V}_{i}})\) is the dimension of the space \({{\mathscr{Z}}}_{{V}_{i}}\); Γ(v) refers to the children set of v; |Γ(v)| is the cardinality of set Γ(v); \(\gamma \in {\mathscr{Z}}\) is a state variable in the state space \({\mathscr{Z}}\) that serves as side information to process the v vertices of V in \({\mathscr{G}}({{\rm{QNN}}}_{QG})\), while \({\gamma }_{{\rm{\Gamma }}(v)}\in {{\mathscr{Z}}}_{{V}_{i}}^{|{\rm{\Gamma }}(v)|}\subset {{\mathbb{C}}}^{|{\rm{\Gamma }}(v)|}\) and \({\gamma }_{{\rm{\Gamma }}(v)}=({\gamma }_{{\rm{\Gamma }}(v\mathrm{),1}},\cdots ,{\gamma }_{{\rm{\Gamma }}(v),|{\rm{\Gamma }}(v)|})\), by theory48,62. Thus, the fT transition function in (30) is a complex-valued function that maps an input pair (γ, x) from the space of \({\mathscr{X}}\times {\mathscr{Z}}\) to the state space \({\mathscr{Z}}\).

Similarly, for any Vi, an \({f}_{O}:{{\mathbb{C}}}^{{{\rm{\dim }}}_{in}}\to {{\mathbb{C}}}^{{{\rm{\dim }}}_{out}}\) output function48 can be defined as

where \({{\mathscr{Y}}}_{{V}_{i}}\) is the output space \({\mathscr{Y}}\), and γv is a state variable associated with v, \({\gamma }_{v}\in {{\mathscr{Z}}}_{{V}_{i}}\), such that γv = γ0 if \({\rm{\Gamma }}(v)=\varnothing \). The fO output function in (31) is therefore a complex-valued function that maps an input pair (γ, x) from the space of \({\mathscr{X}}\times {\mathscr{Z}}\) to the output space \({\mathscr{Y}}\).

From (30) and (31), it follows that for any Vi, there exists the ϕ(Vi) associated function-pair as

Let us specify the generalized functions of (30) and (31) for a QNNQG.

Let \(U(\overrightarrow{\theta })\) of QNNQG be defined as given in (2). Since in QNNQG, a given i-th unitary Ui(θi) acts on the output of the previous unitary Ui−1(θi−1), the network contains no nonlinearities14. As a corollary, the state transition function fT(QNNQG) in (30) is also linear for a QNNQG.

Let \(|{\gamma }_{v}\rangle \) be the quantum state associated with γv state variable of a given v. Then, the constraints on the transition function and output function of a QNNQG can be evaluated as follows.

Let fT(QNNQG) be the transition function of a QNNQG defined for a given v ∈ V of \({\mathscr{G}}({{\rm{QNN}}}_{QG})\) via (30) as

The FO(QNNQG) output function of a QNNQG for a given v of \({\mathscr{G}}({{\rm{QNN}}}_{QG})\) via (31) is

Since fT(QNNQG) in (33) and FO(QNNQG) in (34) correspond with the data flow computational scheme of a QNNQG with linear transition functions, (33) and (34) represent an expression of the constraints of QNNQG. These statements can be formulated in a compact form.

Let ζv be a constraint on fT(QNNQG) of QNNQG as

Thus, the fT(QNNQG) transition function is constrained as

With respect to the output function, let φv be a constraint on FO(QNNQG) of QNNQG as

where ⚬ is the composition operator, such that \((f\circ g)(x)=f(g(x))\), \({\wp }_{v}\) is therefore another constraint as \({\wp }_{v}({F}_{O}({{\rm{QNN}}}_{QG}))=0\).

Then let πv be a compact constraint on fT(QNNQG) and FO(QNNQG) defined via constraints (35) and (37) as

Since it can be verified that a learning machine that enforces the constraint in (38), is in fact a constraint machine. As a corollary, the constraints (33) and (34), along with the compact constraint (38), define a \({\mathscr{C}}({{\rm{QNN}}}_{QG})\) constraint machine for a QNNQG with linear functions fT(QNNQG) and FO(QNNQG).■

Diffusion machine

Let \({\mathscr{C}}\) be the constraint machine with linear transition function fT(γΓ(v), xv), and let §v be a state variable such that \(\forall \,v\in V\)

and let FO(γv, xv) be the output function of \({\mathscr{C}}\), such that ∀v ∈ V

where cv is a constraint.

Then, the \({\mathscr{C}}\) constraint machine is a \({\mathscr{D}}\) diffusion machine48, if only \({\mathscr{C}}\) enforces the constraint \({C}_{{\mathscr{D}}}\), as

Computational model of recurrent gate-model quantum neural networks

Theorem 2

The computational model of an RQNNQG is a \({\mathscr{D}}({{\rm{RQNN}}}_{QG})\) diffusion machine with linear transition functions fT(RQNNQG).

Proof. Let \({\mathscr{C}}({{\rm{RQNN}}}_{QG})\) be the constraint machine of RQNNQG with linear transition function fT(RQNNQG) = fT(γΓ(v), xv). Using the \({{\mathscr{G}}}_{{{\rm{RQNN}}}_{QG}}\) environmental graph, let Λv be a constraint on fT(RQNNQG) of RQNNQG, v ∈ V as

where \(|{\gamma }_{v}\rangle \) is the quantum state associated with γv state variable of a given v of RQNNQG. With respect to the output function FO(RQNNQG) = FO(γv, xv) of RQNNQG, let ωv be a constraint on FO(RQNNQG) of RQNNQG, as

where Ωv is another constraint as Ωv(FO(RQNNQG)) = 0.

Since RQNNQG is a recurrent network, for all v ∈ V of \({{\mathscr{G}}}_{{{\rm{RQNN}}}_{QG}}\), a diffuse constraint λ(Q(x)) can be defined via constraints (42) and (43), as

where x = (x1, …, x|V|), and Q(x) = (Q(x1), …, Q(x|V|)) is a function that maps all vertexes of \({{\mathscr{G}}}_{{{\rm{RQNN}}}_{QG}}\). Therefore, in the presence of (44), the relation

follows for an RQNNQG, where \({\mathscr{D}}({{\rm{RQNN}}}_{QG})\) is the diffusion machine of RQNNQG. It is because a constraint machine \({\mathscr{C}}({{\rm{RQNN}}}_{QG})\) that satisfies (44) is, in fact, a diffusion machine \({\mathscr{D}}({{\rm{RQNN}}}_{QG})\), see also (41).

In (42), the fT(RQNNQG) state transition function can be defined for a \({\mathscr{D}}({{\rm{RQNN}}}_{QG})\) via constraint (42) as

Then, let Ht be a unit vector for a unitary Ut(θt), t = 1, …, L − 1, defined as

where xt and yt are real values.

Then, let Zt+1 be defined via \(U(\overrightarrow{\theta })\) and (47) as

where E is a basis vector matrix60.

Then, by rewriting \(U(\overrightarrow{\theta })\) as

where ϕ, φ are real parameters, allows us to evaluate \(U(\overrightarrow{\theta }){H}_{t}\) as

with

where Ht+1 is normalized at unity, and function \({f}_{\sigma }^{{{\rm{RQNN}}}_{QG}}(\cdot )\) is defined as

where |⋅|1 is the L1-norm.

Since the RQNNQG has linear transition function, (52) is also linear, and allows us to rewrite (52) via the environmental graph representation for a particular (γΓ(v), xv), as

where fT(γΓ(v), xv) is given in (50).

Thus, by setting t = ν, the term Ht can be rewritten via (50) and (52) as

Then, the Yt(RQNNQG) output of RQNNQG is evaluated as

where W is an output matrix60.

Then let |Γ(v)| = L, therefore at a particular objective function f(θ) of the RQNNQG, the derivative \(\frac{df(\theta )}{d{x}_{\nu }}\) can be evaluated as

where

is a Jacobian matrix60. For the norms the relation

holds, where

The proof is concluded here.■

Optimal Learning

Gate-model quantum neural network

Theorem 3

A supervised learning is an optimal learning for a \({\mathscr{C}}({{\rm{QNN}}}_{QG})\).

Proof. Let πv be the compact constraint on fT(QNNQG) and FO(QNNQG) of \({\mathscr{C}}({{\rm{QNN}}}_{QG})\) from (38), and let A be a constraint matrix. Then, (38) can be reformulated as

where b(x) is a smooth vector-valued function with compact support48, \({f}^{\ast }: {\mathcal I} \to {{\mathbb{C}}}^{n}\),

is the compact function subject to be determined such that

The problem formulated via (60) can be rewritten as

As follows, learning of functions fT(QNNQG) and FO(QNNQG) of \({\mathscr{C}}({{\rm{QNN}}}_{QG})\) can be reduced to the determination of function f*(x), which problem is solvable via the Euler-Lagrange equations48,63,64.

Then, let \({{\mathscr{S}}}_{L({\rm{QNN}})}\) be a non-empty supervised learning set defined as a collection

where (xκ, yκ), yκ = f*(xκ) is a supervised pair, and \(|{\mathscr{X}}|\) is the cardinality of the perceptive space \({\mathscr{X}}\) associated with \({{\mathscr{S}}}_{L({\rm{QNN}})}\).

Since \({{\mathscr{S}}}_{L({\rm{QNN}})}\) is non-empty set, f*(x) can be evaluated by the Euler-Lagrange equations48,63,64, as

where AT is the transpose of the constraint matrix A, and \(\ell \) is a differential operator as

where cκ-s are constants, \({\nabla }^{2}\) is a Laplacian operator such that \({\nabla }^{2}f(x)={\sum }_{i}{\partial }_{i}^{2}f(x)\); while Υ is as

where \({\mathscr{G}}(\cdot )\) is the Green function of differential operator \(\ell \). Since function \({\mathscr{G}}(\,\cdot \,)\) is translation invariant, the relation

follows. Since the constraint that has to be satisfied over the perceptual space \({\mathscr{X}}\) is given in (62), the \( {\mathcal L} \) Lagrangian can be defined as

where 〈⋅,⋅〉 is the inner product operator, while P is defined via (66) as

where \({P}^{\dagger }\) is the adjoint of P, while λ(x) is the Lagrange multiplier as

where

and \(\ell b\) is as

Then, (65) can be rewritten using (71) and (73) as

where H(x) is as

and Φ is as

where In is an identity matrix.

Therefore, after some calculations, f*(x) can be expressed as

where χκ is as

The compact constraint of \({\mathscr{C}}({{\rm{QNN}}}_{QG})\) determined via (77) is optimal, since (77) is the optimal solution of the Euler–Lagrange equations.

The proof is concluded here.■

Lemma 1

There exists a supervised learning for a \({\mathscr{C}}({{\rm{QNN}}}_{QG})\) with complexity \({\mathscr{O}}(|S|)\), where |S| is the number arcs (number of gate parameters) of \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}\).

Proof. Let \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}\) be the environmental graph of QNNQG, such that QNNQG is characterized via \(\overrightarrow{\theta }\) (see (3)).

The optimal supervised learning method of a \({\mathscr{C}}({{\rm{QNN}}}_{QG})\) is derived through the utilization of the \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}\) environmental graph of QNNQG, as follows.

The \({{\mathscr{A}}}_{{\mathscr{C}}({{\rm{QNN}}}_{QG})}\) learning process of \({\mathscr{C}}({{\rm{QNN}}}_{QG})\) in the \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}\) structure is given in Algorithm 1.

The optimality of Algorithm 1 arises from the fact that in Step 4, the gradient computation involves all the gate parameters of the QNNQG, and the gate parameter updating procedure has a computational complexity \({\mathscr{O}}(|S|)\). The QNNQG complexity is yielded from the gate parameter updating mechanism that utilizes backpropagated classical side information for the learning method.

The proof is concluded here.■

Description and method validation

The detailed steps and validation of Algorithm 1 are as follows.

In Step 1, the number R of measurement rounds is set.

Step 2 is the quantum evolution phase of QNNQG that yields an output quantum system \(|Y\rangle \) via forward propagation of quantum information through the unitary sequence \(U(\overrightarrow{\theta })\) realized via the L unitaries. Then, a parameterization follows for each \({x}_{{U}_{i}({\theta }_{i})}\), and the terms \({W}_{{U}_{i}({\theta }_{i})}\) and \({Q}_{{U}_{i}({\theta }_{i})}\) are defined to characterize the θi angles of the Ui(θi) unitary operations in the QNNQG.

In Step 3, side information initializations are made for the error computations. A given \({W}_{{U}_{i}({\theta }_{i})}\) is set as a cumulative quantity with respect to the parent set Ξ ∈ i of unitary Ui(θi) in QNNQG.

Note, that (80) and (81) represent side information, thus the gate parameter θhi is used to identify a particular unitary U(θhi).

Let \({{\mathscr{G}}^{\prime} }_{{{\rm{QNN}}}_{QG}}\) be the the environmental graph of QNNQG such that the directions of quantum links are reversed. It can be verified that for a \({{\mathscr{G}}^{\prime} }_{{{\rm{QNN}}}_{QG}}\), \({\delta }_{{U}_{i}({\theta }_{i})}\) from (82) can be rewritten as

and \({\delta }_{{U}_{L}({\theta }_{L})}\) can be evaluated as given in (83)

while the term \({\delta }_{{U}_{i}({\theta }_{i})}{W}_{{U}_{j}({\theta }_{j})}\) for each Ui(θi) can be rewritten as

Since (86) and (85) are defined via the non-reversed \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}\), for a given unitary the Γ children set is used. The utilization of the Ξ parent set with reversed link directions in \({{\mathscr{G}}^{\prime} }_{{{\rm{QNN}}}_{QG}}\) (see (89), (90), (91)) is therefore analogous to the use of the Γ children set with non-reversed link directions in \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}\). It is because classical side information is available in arbitrary directions in \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}\).

First, we consider the situation, if i = 1, …, L − 1, thus the error calculations are associated to unitaries U1(θ1), …, UL−1(θL−1), while the output unitary UL(θL) is proposed for the i = L case.

In \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}\), the error quantity \({\delta }_{{U}_{i}({\theta }_{i})}\) associated to Ui(θi) is determined, where \({W}_{{U}_{L}({\theta }_{L})}\) is associated to the output unitary UL(θL). Only forward steps are required to yield \({W}_{{U}_{L}({\theta }_{L})}\) and \({Q}_{{U}_{L}({\theta }_{L})}\). Then, utilizing the chain rule and using the children set Γ(i) of a particular unitary Ui(θi), the term \(d{W}_{{U}_{L}({\theta }_{L})}/d{Q}_{{U}_{i}({\theta }_{i})}\) in \({\delta }_{{U}_{i}({\theta }_{i})}\) can be rewritten as \(\frac{d{W}_{{U}_{L}({\theta }_{L})}}{d{Q}_{{U}_{i}({\theta }_{i})}}={\sum }_{h\in {\rm{\Gamma }}(i)}\frac{d{W}_{{U}_{L}({\theta }_{L})}}{d{Q}_{{U}_{h}({\theta }_{h})}}\frac{d{Q}_{{U}_{h}({\theta }_{h})}}{d{W}_{{U}_{i}({\theta }_{i})}}\frac{d{W}_{{U}_{i}({\theta }_{i})}}{d{Q}_{{U}_{i}({\theta }_{i})}}\). In fact, this term equals to \({Q}_{{U}_{i}({\theta }_{i})}{\sum }_{h\in {\rm{\Gamma }}(i)}{\theta }_{hi}{\delta }_{{U}_{h}({\theta }_{h})}\), where \({\delta }_{{U}_{h}({\theta }_{h})}\) is the error associated to a Uh(θh), such that Uh(θh) is a children unitary of Ui(θi). The \({\delta }_{{U}_{h}({\theta }_{h})}\) error quantity associated to a children unitary Uh(θh) of Ui(θi) can also be determined in the same manner, that yields \({\delta }_{{U}_{h}({\theta }_{h})}=d{W}_{{U}_{L}({\theta }_{L})}/d{Q}_{{U}_{h}({\theta }_{h})}\). As follows, by utilizing side information in \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}\) allows us to determine \({\delta }_{{U}_{i}({\theta }_{i})}\) via the \( {\mathcal L} (\,\cdot \,)\) loss function and the Γ(i) children set of unitary Ui(θi), that yields the quantity given in (82).

The situation differs if the error computations are made with respect to the output system, thus for the L-th unitary UL(θL). In this case, the utilization of the loss function \( {\mathcal L} ({x}_{0},\tilde{l}(z))\) allows us to use the simplified formula of \({\delta }_{{U}_{L}({\theta }_{L})}=d {\mathcal L} ({x}_{0},\tilde{l}(z))/d{Q}_{{U}_{L}({\theta }_{L})}\), as given in (83). Taking the \(\frac{d {\mathcal L} ({x}_{0},\tilde{l}(z))}{d{\theta }_{ij}}\) derivative of the loss function \( {\mathcal L} ({x}_{0},\tilde{l}(z))\) with respect to the angle θij yields \(\frac{d {\mathcal L} ({x}_{0},\tilde{l}(z))}{d{Q}_{{U}_{i}({\theta }_{i})}}\frac{d{Q}_{{U}_{i}({\theta }_{i})}}{d{\theta }_{ij}}\), that is, in fact equals to \({\delta }_{{U}_{i}({\theta }_{i})}{W}_{{U}_{j}({\theta }_{j})}\).

In Step 4, the quantities defined in the previous steps are utilized in the QNNQG for the error calculations. The errors are evaluated and updated in a backpropagated manner from unitary UL(θL) to U1(θ1). Since it requires only side information these steps can be achieved via a \({\rm{P}}({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}})\) post-processing (along with Step 3). First, a gate parameter modification vector \(\overrightarrow{{\rm{\Delta }}}\theta \) is defined, such that its i-th element, \(\overrightarrow{{\rm{\Delta }}}{\theta }_{i}\), is associated with the modification of the θi gate parameter of an i-th unitary Ui(θi).

The i-th element \(\overrightarrow{{\rm{\Delta }}}{\theta }_{i}\) is initialized as \(\overrightarrow{{\rm{\Delta }}}{\theta }_{i}={W}_{{U}_{i}({\theta }_{i})}\). If \(\overrightarrow{{\rm{\Delta }}}{\theta }_{i}\) equals to 1, then no modification is required in the θi gate parameter of Ui(θi). In this case, the \({\delta }_{{U}_{i}({\theta }_{i})}\) error quantity of Ui(θi) can be determined via a simple summation, using the children set of Ui(θi), as \({\delta }_{{U}_{i}({\theta }_{i})}={\sum }_{j\in {\rm{\Gamma }}(i)}{\theta ^{\prime} }_{ij}{\delta }_{{U}_{j}({\theta }_{j})}\), where Uj(θj) is a children of Ui(θi), as it is given in (85). On the other hand, if \(\overrightarrow{{\rm{\Delta }}}{\theta }_{i}\ne 1\), then the θi gate parameter of Ui(θi) requires a modification. In this case, summation \({\sum }_{j\in \Gamma (i)}{\theta }_{ij}{\delta }_{{U}_{j}({\theta }_{j})}\) has to be weighted by the actual \(\overrightarrow{{\rm{\Delta }}}{\theta }_{i}\) to yield \({\delta }_{{U}_{i}({\theta }_{i})}\). This situation is obtained in (86).

According to the update mechanism of (84–86), for z = L − 1, …, 1, the errors are updated via (88) as follows. At z = L and \(\overrightarrow{{\rm{\Delta }}}{\theta }_{z}=1\), \({\delta }_{{U}_{z}({\theta }_{z})}\) is as

while at \(\overrightarrow{{\rm{\Delta }}}{\theta }_{z}\ne 1\), \({\delta }_{{U}_{z}({\theta }_{z})}\) is updated as

For z = L − 1, …, 1, if \(\overrightarrow{{\rm{\Delta }}}{\theta }_{z}=1\), then \({\delta }_{{U}_{z}({\theta }_{z})}\) is as

while, if \(\overrightarrow{{\rm{\Delta }}}{\theta }_{z}\ne 1\), then

In Step 5, for a given unitary Ui(θi), i = 2, …, L and for its parent Uj(θj), the \({g}_{{U}_{i}({\theta }_{i}),{U}_{j}({\theta }_{j})}\) gradient is computed via the \({\delta }_{{U}_{i}({\theta }_{i})}\) error quantity derived from (85–86) for Ui(θi), and by the \({W}_{{U}_{j}({\theta }_{j})}\) quantity associated to parent Uj(θj). (For U1(θ1) the parent set Ξ(1) is empty, thus i > 1.) The computation of \({g}_{{U}_{i}({\theta }_{i}),{U}_{j}({\theta }_{j})}\) is performed for all Uj(θj) parents of Ui(θi), thus (87) is determined for ∀j, j ∈ Ξ(i). By the chain rule,

Since for i = L, \({\delta }_{{U}_{L}({\theta }_{L})}\) is as given in (83), the gradient can be rewritten via (91) as

Finally, Step 6 utilizes the number R of measurements to extend the results for all measurement rounds, r = 1, …, R. Note that in each round a measurement operator is applied, for simplicity it is omitted from the description.

Since the algorithm requires no reversed quantum links, i.e. \({{\mathscr{G}}^{\prime} }_{{{\rm{QNN}}}_{QG}}\) for the computations of (85–86), the gradient of the loss in (87) with respect to the gate parameter can be determined in an optimal way for QNNQG networks, by the utilization of side information in \({{\mathscr{G}}}_{{{\rm{QNN}}}_{QG}}\).

The steps and quantities of the learning procedure (Algorithm 1) of a QNNQG are illustrated in Fig. 3. The QNNQG network realizes the unitary \(U(\overrightarrow{\theta })\). The quantum information is propagated through quantum links (solid lines) between the unitaries, while the auxiliary classical information is propagated via classical links in the network (dashed lines). An i-th node is represented via unitary Ui(θi).

The learning method for a QNNQG. The QNNQG network realizes unitary \(U(\overrightarrow{\theta })\) as a sequence of L unitaries, \(U(\overrightarrow{\theta })={U}_{L}({\theta }_{L}){U}_{L-1}({\theta }_{L-1})\ldots {U}_{1}({\theta }_{1})\). The algorithm determines the gradient of the loss with respect to the θ gate parameter, at a particular loss function \( {\mathcal L} ({x}_{0},\tilde{l}(z))\). All quantum information propagates forward via quantum links (solid lines), classical side information can propagate arbitrarily (dashed lines).

For an i-th unitary, Ui(θi), parameters \({W}_{{U}_{i}({\theta }_{i})}\), \({Q}_{{U}_{i}({\theta }_{i})}\) and \({\delta }_{{U}_{i}({\theta }_{i})}=d{W}_{{U}_{L}({\theta }_{L})}/d{Q}_{{U}_{i}({\theta }_{i})}\) for i < L, are computed, where \({W}_{{U}_{L}({\theta }_{L})}={\sum }_{j\in \Xi (L)}{\theta }_{Lj}{V}_{{U}_{j}({\theta }_{j})}\). For the output unitary, \({\delta }_{{U}_{L}({\theta }_{L})}=d {\mathcal L} ({x}_{0},\tilde{l}(z))/d{Q}_{{U}_{L}({\theta }_{L})}\). Parameters \({W}_{{U}_{i}({\theta }_{i})}\) and \({Q}_{{U}_{i}({\theta }_{i})}\) are determined via forward propagation of side information, the \({\delta }_{{U}_{i}({\theta }_{i})}\) quantities are evaluated via backward propagation of side information. Finally, the gradients, \({g}_{{U}_{i}({\theta }_{i}),{U}_{j}({\theta }_{j})}={\delta }_{{U}_{i}({\theta }_{i})}{W}_{{U}_{j}({\theta }_{j})},\) are computed.

Recurrent gate-model quantum neural network

In classical neural networks, backpropagation59,60,61 (backward propagation of errors) is a supervised learning method that allows to determine the gradients to learn the weights in the network. In this section, we show that for a recurrent gate-model QNN, a backpropagation method is optimal.

Theorem 4

A backpropagation in \({{\mathscr{G}}}_{{{\rm{RQNN}}}_{QG}}\) is an optimal learning in the sense of gradient descent.

Proof. In an RQNNQG, the backward classical links provide feedback side information for the forward propagation of quantum information in multiple measurement rounds. The backpropagated side information is analogous to feedback loops, i.e, to recurrent cycles over time. The aim of the learning method is to optimize the gate parameters of the unitaries of the RQNNQG quantum network via a supervised learning, using the side information available from the previous k = 1, …, r − 1 measurement rounds at a particular measurement round r.

Let \({{\mathscr{G}}}_{{{\rm{RQNN}}}_{QG}}\) be the environmental graph of RQNNQG, and fT(RQNNQG) be the transition function of an RQNNQG. Then the γv constraint is defined via \({{\mathscr{G}}}_{{{\rm{RQNN}}}_{QG}}\) as

while the constraint Ωv on the output F(γv, xv) of RQNNQG is defined via ωv = 0 as48,61,62

Utilizing the structure of the \({{\mathscr{G}}}_{{{\rm{RQNN}}}_{QG}}\) environmental graph allows us to define a modified version of the backpropagation through time algorithm59 to the RQNNQG.

The learning of \({\mathscr{D}}({{\rm{RQNN}}}_{QG})\) with constraints (42), (43), and (44) is given in Algorithm 2, depicted as \({{\mathscr{A}}}_{{\mathscr{D}}({{\rm{RQNN}}}_{QG})}\).

As a corollary, the training of \({\mathscr{D}}({{\rm{RQNN}}}_{QG})\) can be reduced to a backpropagation method via the environmental graph of RQNNQG.■

Description and method validation

The detailed steps and validation of Algorithm 2 are as follows.

In Step 1, the number R of measurement rounds are set for RQNNQG. For each measurement round initialization steps (100, 101) are set.

Step 2 provides the quantum evolution phase of RQNNQG, and produces output quantum system \(|{Y}_{r}\rangle \) (102) via forward propagation of quantum information through the unitary sequence \(U({\overrightarrow{\theta }}_{r})\) of the L unitaries.

Step 3 initializes the P(r)(RQNNQG) post-processing method via the definition of (105) for gradient computations. In (106), the quantity \({{\rm{\Phi }}}_{r}={z}^{(r)}+U({\overrightarrow{\theta }}_{r-1})+{B}_{r}\) connects the side information of the r-th measurement round with the side information of the (r − 1)-th measurement round; and \(U({\overrightarrow{\theta }}_{r-1})\) is the unitary sequence of the (r − 1)-th round, and Br is a bias the current measurement round. The quantity ξr,k = dΦr/dΦk in (107) utilizes the Φi quantities (see (106)) of the i-th measurement rounds, such that i = k + 1, …, r, where k < r.

Step 4 determines the gr loss function gradient of the r-th measurement round. In (108), the gr gradient is determined as \({\sum }_{k=1}^{r}\frac{ {\mathcal L} ({x}_{\mathrm{0,}r},\tilde{l}({z}^{(r)}))}{d{{\rm{\Phi }}}_{r}}\frac{d{{\rm{\Phi }}}_{r}}{d{{\rm{\Phi }}}_{k}}\frac{d{\tilde{{\rm{\Phi }}}}_{k}}{d{\mathscr{S}}({\overrightarrow{\theta }}_{r})}\), that is, via the utilization of the side information of the k = 1, …, r measurement rounds at a particular r.

In Step 5, the gate parameters are updated via the gradient descent rule59 by utilizing the gradients of the k = 1, …, r measurement rounds at a particular r. Since in (111) all the gate parameters of the L unitaries are updated by ωr as given in (112), for a particular unitary Ui(θr,i), the gate parameter is updated via \({\overrightarrow{\alpha }}_{r}\) (114) to θr+1,i as

Finally, Step 6 outputs the G final gradient of the total R measurement rounds in (116), as a summation of the gr gradients (108) determined in the r = 1, …, R rounds.

The steps of the learning method of an RQNNQG (Algorithm 2) are illustrated in Fig. 4. The \({\overrightarrow{\theta }}_{r}\) gate parameters of the unitaries of unitary sequence \(U({\overrightarrow{\theta }}_{r})\) are set as \({\overrightarrow{\theta }}_{r}={\overrightarrow{\theta }}_{r-1}-{\omega }_{r-1},\) where \({\overrightarrow{\theta }}_{r-1}\) is the gate parameter vector associated to sequence \(U({\overrightarrow{\theta }}_{r-1})\), while αr−1,i = ωr−1 is the gate parameter modification coefficient, and \({\omega }_{r-1}=\frac{\lambda }{r-1}{\sum }_{k=1}^{r-1}\frac{ {\mathcal L} ({x}_{\mathrm{0,}k},\tilde{l}({z}^{(k)}))}{d{\mathscr{S}}({\overrightarrow{\theta }}_{k})}\).

The learning method for an RQNNQG. In an r-th measurement round, the RQNNQG network realizes the unitary sequence \(U({\overrightarrow{\theta }}_{r})\), and side information is available about the previous k = 1, …, r − 1 running sequences of the structure. Quantum information propagates only forward in the network via quantum links (solid lines), the αr−1,i = ωr−1 quantities are distributed via backpropagation of side information through the classical links (dashed lines). The θr,j gate parameter of an i-th unitary Ui(θr,i) of \(U({\overrightarrow{\theta }}_{r})\) is set to θr,i = θr−1,i − αr−1,i, where θr−1,i is the gate parameter of the i-th unitary Ui(θr−1,i) of the \(U({\overrightarrow{\theta }}_{r-1})\) unitary sequence.

Closed-form error evaluation

Lemma 2

The δ quantity of the unitaries of a \({\mathscr{D}}({{\rm{RQNN}}}_{QG})\) can be expressed in a closed form via the \({{\mathscr{G}}}_{{{\rm{RQNN}}}_{QG}}\) environmental graph of RQNNQG.

Proof. Let \({{\mathscr{G}}}_{{{\rm{RQNN}}}_{QG}}\) be the environmental graph of RQNNQG, such that RQNNQG is characterized via \(\overrightarrow{\theta }\) (see (3)). Utilizing the structure \({{\mathscr{G}}}_{{{\rm{RQNN}}}_{QG}}\) of RQNNQG allows us to express the square error in a closed form as follows.

Let Y and Z refer to output realizations \(|Y\rangle \) and \(|Z\rangle \) of RQNNQG, \({\mathscr{Y}}\in Y,Z\), with an output set \({\mathscr{Y}}\), and let \( {\mathcal L} ({x}_{0},\tilde{l}(z))\) be the loss function. Then let \({{\bf{H}}}_{{{\rm{RQNN}}}_{QG}}\) be a Hessian matrix48 of the RQNNQG structure, with a generic coordinate \({\hslash }_{ij,lm}^{{{\rm{RQNN}}}_{QG}}\), as

where \({W}_{{U}_{i}({\theta }_{i})}\) is given in (81), fi∠m(⋅) is a topological ordering function on \({{\mathscr{G}}}_{{{\rm{RQNN}}}_{QG}}\), indices Y and Q are associated with the output realizations \(|Y\rangle \) and \(|Q\rangle \), while \({({\delta }_{{U}_{l}({\theta }_{l}),{U}_{i}({\theta }_{i})}^{Q})}^{2}\) is the square error between unitaries Ul(θl) and Ui(θi) at a particular output \(|Q\rangle \) as

where \({Q}_{{U}_{i}({\theta }_{i})}\) is as in (80). Note that the relation \({({\delta }_{{U}_{l}({\theta }_{l}),{U}_{i}({\theta }_{i})}^{Q})}^{2}\ne 0\) in (119) holds if only there is an edge sil between \({v}_{{U}_{i}}\in V\) and \({v}_{{U}_{l}({\theta }_{l})}\in V\) in the environmental graph \({{\mathscr{G}}}_{{{\rm{RQNN}}}_{QG}}\) of RQNNQG. Thus,

Since \({{\mathscr{G}}}_{{{\rm{RQNN}}}_{QG}}\) contains all information for the computation of (119) and \({\mathscr{D}}({{\rm{RQNN}}}_{QG})\) is defined through the structure of \({{\mathscr{G}}}_{{{\rm{RQNN}}}_{QG}}\), the proof is concluded here.■

Conclusions

Gate-model QNNs allow an experimental implementation on near-term gate-model quantum computer architectures. Here we examined the problem of learning optimization of gate-model QNNs. We defined the constraint-based computational models of these quantum networks and proved the optimal learning methods. We revealed that the computational models are different for nonrecurrent and recurrent gate-model quantum networks. We proved that for nonrecurrent and recurrent gate-model QNNs, the optimal learning is a supervised learning. We showed that for a recurrent gate-model QNN, the learning can be reduced to backpropagation. The results are particularly useful for the training of QNNs on near-term quantum computers.

References

Preskill, J. Quantum Computing in the NISQ era and beyond. Quantum 2, 79 (2018).

Harrow, A. W. & Montanaro, A. Quantum Computational Supremacy. Nature 549, 203–209 (2017).

Aaronson, S. & Chen, L. Complexity-theoretic foundations of quantum supremacy experiments. Proceedings of the 32nd Computational Complexity Conference, CCC ’17, 22:1–22:67, (2017).

Biamonte, J. et al. Quantum Machine Learning. Nature 549, 195–202 (2017).

LeCun, Y., Bengio, Y. & Hinton, G. Deep Learning. Nature 521, 436–444 (2014).

Goodfellow, I., Bengio, Y. & Courville, A. Deep Learning. MIT Press. Cambridge, MA (2016).

Debnath, S. et al. Demonstration of a small programmable quantum computer with atomic qubits. Nature 536, 63–66 (2016).

Monz, T. et al. Realization of a scalable Shor algorithm. Science 351, 1068–1070 (2016).

Barends, R. et al. Superconducting quantum circuits at the surface code threshold for fault tolerance. Nature 508, 500–503 (2014).

Kielpinski, D., Monroe, C. & Wineland, D. J. Architecture for a large-scale ion-trap quantum computer. Nature 417, 709–711 (2002).

Ofek, N. et al. Extending the lifetime of a quantum bit with error correction in superconducting circuits. Nature 536, 441–445 (2016).

IBM. A new way of thinking: The IBM quantum experience. URL, http://www.research.ibm.com/quantum (2017).

Brandao, F. G. S. L., Broughton, M., Farhi, E., Gutmann, S. & Neven, H. For Fixed Control Parameters the Quantum Approximate Optimization Algorithm’s Objective Function Value Concentrates for Typical Instances. arXiv 1812, 04170 (2018).

Farhi, E. & Neven, H. Classification with Quantum Neural Networks on Near Term Processors. arXiv 1802, 06002v1 (2018).

Farhi, E., Goldstone, J., Gutmann, S. & Neven, H. Quantum Algorithms for Fixed Qubit Architectures. arXiv 1703, 06199v1 (2017).

Farhi, E., Goldstone, J. & Gutmann, S. A Quantum Approximate Optimization Algorithm. arXiv 1411, 4028 (2014).

Farhi, E., Goldstone, J. & Gutmann, S. A Quantum Approximate Optimization Algorithm Applied to a Bounded Occurrence Constraint Problem. arXiv 1412, 6062 (2014).

Lloyd, S. The Universe as Quantum Computer, A Computable Universe: Understanding and exploring Nature as computation, H. Zenil ed., World Scientific, Singapore, 2012, arXiv:1312.4455v1 (2013).

Lloyd, S., Mohseni, M. & Rebentrost, P. Quantum algorithms for supervised and unsupervised machine learning. arXiv 1307, 0411v2 (2013).

Lloyd, S., Mohseni, M. & Rebentrost, P. Quantum principal component analysis. Nature Physics 10, 631 (2014).

Rebentrost, P., Mohseni, M. & Lloyd, S. Quantum Support Vector Machine for Big Data Classification. Phys. Rev. Lett. 113 (2014).

Lloyd, S., Garnerone, S. & Zanardi, P. Quantum algorithms for topological and geometric analysis of data. Nat. Commun. 7, arXiv:1408. 3106 (2016).

Schuld, M., Sinayskiy, I. & Petruccione, F. An introduction to quantum machine learning. Contemporary Physics 56, pp. 172–185. arXiv: 1409.3097 (2015).

Imre, S. & Gyongyosi, L. Advanced Quantum Communications - An Engineering Approach. Wiley-IEEE Press (New Jersey, USA) (2012).

Dorozhinsky, V. I. & Pavlovsky, O. V. Artificial Quantum Neural Network: quantum neurons, logical elements and tests of convolutional nets, arXiv:1806.09664 (2018).

Torrontegui, E. & Garcia-Ripoll, J. J. Universal quantum perceptron as efficient unitary approximators, arXiv:1801.00934 (2018).

Lloyd, S. et al. Infrastructure for the quantum Internet. ACM SIGCOMM Computer Communication Review 34, 9–20 (2004).

Gyongyosi, L., Imre, S. & Nguyen, H. V. A Survey on Quantum Channel Capacities. IEEE Communications Surveys and Tutorials 99, 1, https://doi.org/10.1109/COMST.2017.2786748 (2018).

Van Meter, R. Quantum Networking, John Wiley and Sons Ltd, ISBN 1118648927, 9781118648926 (2014).

Gyongyosi, L. & Imre, S. Multilayer Optimization for the Quantum Internet. Scientific Reports, Nature, https://doi.org/10.1038/s41598-018-30957-x, (2018).

Gyongyosi, L. & Imre, S. Entanglement Availability Differentiation Service for the Quantum Internet. Scientific Reports, Nature, https://doi.org/10.1038/s41598-018-28801-3, https://www.nature.com/articles/s41598-018-28801-3 (2018).

Gyongyosi, L. & Imre, S. Entanglement-Gradient Routing for Quantum Networks. Scientific Reports, Nature, https://doi.org/10.1038/s41598-017-14394-w, https://www.nature.com/articles/s41598-017-14394-w, (2017).

Gyongyosi, L. & Imre, S. Decentralized Base-Graph Routing for the Quantum Internet, Physical Review A, American Physical Society, https://doi.org/10.1103/PhysRevA.98.022310, https://link.aps.org/doi/10.1103/PhysRevA.98.022310 (2018).

Pirandola, S., Laurenza, R., Ottaviani, C. & Banchi, L. Fundamental limits of repeaterless quantum communications, Nature Communications, 15043, https://doi.org/10.1038/ncomms15043 (2017).

Pirandola, S. et al. Theory of channel simulation and bounds for private communication. Quantum Sci. Technol. 3, 035009 (2018).

Laurenza, R. & Pirandola, S. General bounds for sender-receiver capacities in multipoint quantum communications. Phys. Rev. A 96, 032318 (2017).

Pirandola, S. Capacities of repeater-assisted quantum communications. arXiv 1601, 00966 (2016).

Pirandola, S. End-to-end capacities of a quantum communication network. Commun. Phys. 2, 51 (2019).

Cacciapuoti, A. S. et al. Quantum Internet: Networking Challenges in Distributed Quantum Computing. arXiv 1810, 08421 (2018).

Shor, P. W. Scheme for reducing decoherence in quantum computer memory. Phys. Rev. A 52, R2493–R2496 (1995).

Petz, D. Quantum Information Theory and Quantum Statistics, Springer-Verlag, Heidelberg, Hiv: 6. (2008).

Bacsardi, L. On the Way to Quantum-Based Satellite Communication. IEEE Comm. Mag. 51(08), 50–55 (2013).

Gyongyosi, L. & Imre, S. A Survey on Quantum Computing Technology, Computer Science Review, Elsevier, https://doi.org/10.1016/j.cosrev.2018.11.002, ISSN: 1574-0137 (2018).

Wiebe, N., Kapoor, A. & Svore, K. M. Quantum Deep Learning. arXiv 1412, 3489 (2015).

Wan, K. H. et al. Quantum generalisation of feedforward neural networks. npj Quantum Information 3, 36 arXiv 1612, 01045 (2017).

Cao, Y., Giacomo Guerreschi, G. & Aspuru-Guzik, A. Quantum Neuron: an elementary building block for machine learning on quantum computers. arXiv: 1711.11240 (2017).

Lloyd, S. & Weedbrook, C. Quantum generative adversarial learning. Phys. Rev. Lett., 121, arXiv 1804, 09139 (2018).

Gori, M. Machine Learning: A Constraint-Based Approach, ISBN: 978-0-08-100659-7, Elsevier (2018).

Hyland, S. L. & Ratsch, G. Learning Unitary Operators with Help From u(n). arXiv 1607, 04903 (2016).

Dunjko, V. et al. Super-polynomial and exponential improvements for quantum-enhanced reinforcement learning. arXiv: 1710.11160 (2017).

Romero, J. et al. Strategies for quantum computing molecular energies using the unitary coupled cluster ansatz. arXiv: 1701.02691 (2017).

Riste, D. et al. Demonstration of quantum advantage in machine learning. arXiv 1512, 06069 (2015).

Yoo, S. et al. A quantum speedup in machine learning: finding an N-bit Boolean function for a classification. New Journal of Physics 16(10), 103014 (2014).

Farhi, E. & Harrow, A. W. Quantum Supremacy through the Quantum Approximate Optimization Algorithm. arXiv 1602, 07674 (2016).

Crooks, G. E. Performance of the Quantum Approximate Optimization Algorithm on the Maximum Cut Problem. arXiv 1811, 08419 (2018).

Gyongyosi, L. & Imre, S. Dense Quantum Measurement Theory. Scientific Reports, Nature, https://doi.org/10.1038/s41598-019-43250-2 (2019).

Farhi, E., Kimmel, S. & Temme, K. A Quantum Version of Schoning’s Algorithm Applied to Quantum 2-SAT. arXiv 1603, 06985 (2016).

Schoning, T. A probabilistic algorithm for k-SAT and constraint satisfaction problems. Foundations of Computer Science, 1999. 40th Annual Symposium on, pages 410–414. IEEE (1999).

Salehinejad, H., Sankar, S., Barfett, J., Colak, E. & Valaee, S. Recent Advances in Recurrent Neural Networks. arXiv 1801, 01078v3 (2018).

Arjovsky, M., Shah, A. & Bengio, Y. Unitary Evolution Recurrent Neural Networks. arXiv: 1511.06464 (2015).

Goller, C. & Kchler, A. Learning task-dependent distributed representations by backpropagation through structure. Proc. of the ICNN-96, pp. 347–352, Bochum, Germany, IEEE (1996).

Baldan, P., Corradini, A. & Konig, B. Unfolding Graph Transformation Systems: Theory and Applications to Verification, In: Degano P., De Nicola R., Meseguer J. (eds) Concurrency, Graphs and Models. Lecture Notes in Computer Science, vol 5065. Springer, Berlin, Heidelberg (2008).

Roubicek, T. Calculus of variations. Mathematical Tools for Physicists. (Ed. Grinfeld, M.) J. Wiley, Weinheim, ISBN 978-3-527-41188-7, pp. 551–588 (2014).

Binmore, K. & Davies, J. Calculus Concepts and Methods. Cambridge University Press. p. 190. ISBN 978-0-521-77541-0. OCLC 717598615. (2007).

Acknowledgements

The research reported in this paper has been supported by the National Research, Development and Innovation Fund (TUDFO/51757/2019-ITM, Thematic Excellence Program). This work was partially supported by the National Research Development and Innovation Office of Hungary (Project No. 2017-1.2.1-NKP-2017-00001), by the Hungarian Scientific Research Fund - OTKA K-112125 and in part by the BME Artificial Intelligence FIKP grant of EMMI (BME FIKP-MI/SC).

Author information

Authors and Affiliations

Contributions

L.GY. designed the protocol and wrote the manuscript. L.GY. and S.I. analyzed the results. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gyongyosi, L., Imre, S. Training Optimization for Gate-Model Quantum Neural Networks. Sci Rep 9, 12679 (2019). https://doi.org/10.1038/s41598-019-48892-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-019-48892-w

This article is cited by

-

Efficient noise mitigation technique for quantum computing

Scientific Reports (2023)

-

Preparing quantum states by measurement-feedback control with Bayesian optimization

Frontiers of Physics (2023)

-

Smart explainable artificial intelligence for sustainable secure healthcare application based on quantum optical neural network

Optical and Quantum Electronics (2023)

-

Natural quantum reservoir computing for temporal information processing

Scientific Reports (2022)

-

Fixed-point oblivious quantum amplitude-amplification algorithm

Scientific Reports (2022)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.