Abstract

The brain is possibly the most complex system known to mankind, and its complexity has been called upon to explain the emergence of consciousness. However, complexity has been defined in many ways by multiple different fields: here, we investigate measures of algorithmic and process complexity in both the temporal and topological domains, testing them on functional MRI BOLD signal data obtained from individuals undergoing various levels of sedation with the anaesthetic agent propofol, replicating our results in two separate datasets. We demonstrate that the various measures are differently able to discriminate between levels of sedation, with temporal measures showing higher sensitivity. Further, we show that all measures are strongly related to a single underlying construct explaining most of the variance, as assessed by Principal Component Analysis, which we interpret as a measure of “overall complexity” of our data. This overall complexity was also able to discriminate between levels of sedation and serum concentrations of propofol, supporting the hypothesis that consciousness is related to complexity - independent of how the latter is measured.

Similar content being viewed by others

Introduction

The science of complex systems has gained increasing prominence in the 21st century. It combines the reductionist ideal of science, with the notion of emergence, whereby high-level phenomena can result from the interactions of simple constituent parts, confirming Aristotle’s saying that “the whole is more than the sum of its parts”1. However, complexity science is also a discipline still in its infancy. In particular, due to its appealing and apparently intuitive nature, the notion of complexity has remained relatively ill-defined. The interdisciplinary nature of this science has resulted in different fields applying the term complexity to multiple quantities, variously measured. Complexity is perhaps best understood as the negation of simplicity. A system exhibits complex behaviour when it is not uniform, stereotyped, or predictable. However, there is a key assumption that this is not sufficient: complexity must emerge from the underlying orderly interactions of a system’s components, about which its behaviour must provide information – in other words, its unpredictability must be more than mere randomness, but rather the result of interesting behaviours emerging. Thus, a complex system lies between complete order – such as the perfectly predictable regularity of a crystal – and complete disorder, as exhibited for instance by the random motion of molecules of a gas. Historically, complex, self-organizing systems have been separated from both “simple” systems, and systems that display “disorganized complexity”2.

Complexity can be identified in more than one dimension of the same system, too. It may be due to the structure of the interactions between components, such as the connections in a social or biological network. Or it may only become apparent over time, as when it is applied to signals and temporal patterns. Furthermore, there are different ways in which something can be said to be complex, reflected in the different ways that have been developed to estimate complexity. On the one hand, methods from algorithmic information theory such as Shannon entropy and Lempel-Ziv compressibility3,4 emphasise unpredictability as the key property for complexity. One downside of such approach, however, is that they would treat a purely random sequence as maximally complex. Alternatively, methods from the physics of dynamical systems focus on the aspect of interactions in the process – whether between the system’s elements (e.g. synchronisability5), between its present and past states (e.g. Hurst exponent6), or between different scales7. In this work, we aim to explore the relation between algorithmic and process measures of complexity, in both the topological and temporal dimensions.

We choose to test these measures on a paradigmatic complex system: the human brain. Not only is the brain the source of humans’ widely diverse range of behaviours and accomplishments, which is itself suggestive of a highly complex underlying organisation; its structure is also that of a complex network of sub-networks, in turn made of multiple kinds of neurons obeying nontrivial plasticity rules for their interactions. For these reasons, it has been proposed that the brain’s complexity may explain another unique property it possesses: consciousness. Recent scientific theories of consciousness have emphasised, in one way or another, the brain’s complexity as a crucial requirement for consciousness8,9,10,11. Anaesthetic drugs such as the GABA-ergic agonist propofol provide a way to control and reversibly modulate the brain’s state of consciousness. Its complexity, in various aspects, may then be assessed based on signals from noninvasive neuroimaging techniques. Previous research using electrophysiological imaging methods such as EEG has found that the complexity of brain activity changes with alteration of consciousness, decreasing under propofol sedation12,13,14, increasing under the influence of psychedelic drugs like LSD or ketamine15, and decreasing during sleep16 or in patients with disorders of consciousness17. Nonlinear analysis of BOLD signals from functional MRI is a less explored area, largely because BOLD timeseries tend to comprise a limited number of timepoints and have a much more restricted frequency domain; however, there is fast-growing interest in this kind of analysis, owing to recent studies suggesting that this may be a rich field to explore18,19,20. Moreover, functional MRI (fMRI) has the advantage of providing high spatial resolution, thus allowing for estimation of the brain’s network properties in greater detail than afforded by other methods such as EEG.

Here, we chose to evaluate whole-brain measures of algorithmic and process complexity applied to the temporal and topological (network) dimensions, derived from fMRI blood-oxygen-level-dependent (BOLD) signals of volunteers undergoing sedation with propofol. Our aim was twofold: first, to investigate the relationship between the different measures of complexity as applied to human fMRI data, with the hypothesis that results should be consistent across measures; secondly, we sought to provide a comprehensive investigation of the hypothesis that whole-brain complexity of the human brain measured from fMRI BOLD data is reduced when consciousness is lost as a result of anaesthetic-induced unconsciousness. We also replicated our results in an independent dataset of propofol anaesthesia, in order to demonstrate their robustness.

Results

Temporal Algorithmic Complexity

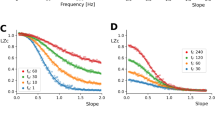

Lempel-Ziv Compressibility

The first measure of algorithmic complexity we used was normalised Lempel-Ziv compressibility14,16 of BOLD signals. We found significant differences between conditions in both Datasets A and B. In Dataset A, Kruskal-Wallis Analysis of Variance found significant differences between all three conditions (H(10.57), p = 0.005), and post-hoc analysis with Wilcoxon Signed-Rank test found significant differences between the Awake and Mild conditions (W(21), p = 0.05), Awake and Moderate conditions (W(9), p = 0.006), and Mild and Moderate conditions (W(4), p = 0.002). In Dataset B, the Wilcoxon test found significant difference between the Awake and Deep conditions (H(19), p = 0.011). In both datasets, the Awake condition had the highest complexity, and as the depth of sedation increased, the associated LZC decreased. In Dataset A, the transition from Awake to Moderate showed \(\Delta =-\,0.029\pm 0.027\), and in Dataset B, the transition from Awake to Deep showed Δ = −0.029 ± 0.039. We note that these two results are remarkably similar, although this is likely a coincidence. For full results from Dataset A, see Table 1, and for Dataset B, Table 2. In the propofol sedation conditions of Dataset A (Mild and Moderate), we found significant negative correlations between LZC and serum concentrations of propofol (r = −0.55, p = 0.002).

These results are consistent with previous findings that Lempel-Ziv compressibility of spontaneous brain activity is discriminative of level of consciousness in humans14,16 and animals21.

Of all the time-series measures described, the LZC algorithm described here is distinct in that it communicates information about the spatial complexity as well as the temporal complexity. This is because, unlike other measures like Sample Entropy or Higuchi Fractal Dimension which are calculated on 234 individual time-series and then averaged, LZC is calculated on an entire dataset, which has been flattened column-wise, as was done in14,15,16, by “stacking” each column on top of the next, resulting in a one-dimensional vector where the first 234 elements are the first column, the second 234 elements are the second column, etc. This means that the vector V (see Methods section) can be divided into 234 segments where every entry corresponds to the coarse activation of a distinct brain region at the same time. The result is that each entry in the dictionary D created by the Lempel-Ziv algorithm corresponds, not to a series of samples from a single ROI, but rather a distribution of cortical regions that are “on” or “off.”

Sample Entropy

We found significant decreases in the Sample Entropy of BOLD signals under anaesthesia in both Datasets A and B. In Dataset A, Kruskal-Wallis Analysis of Variance found a significant difference between all three conditions (H(12.94), p = 0.002) and post-hoc analysis with the Wilcoxon Signed-Rank test found significant differences between the Awake and Moderate conditions (W(6), p = 0.004), and Mild versus Moderate conditions (W(8), p = 0.005), but not the Awake versus Mild conditions. In Dataset B there was a significant difference between the Awake and Deep conditions (W(21), p = 0.015). As with the LZC analysis, the Awake condition had the highest Sample Entropy in both Datasets A and B, with the mean value decreasing with increasing sedation. In Dataset A, we observed \(\Delta =0.036\pm 0.035\) from Awake to Moderate, and in Dataset B we observed \(\Delta =-\,0.023\pm 0.031\). In the Mild and Moderate conditions of Dataset A, we found a significant negative correlation between serum concentration of propofol and Sample Entropy of BOLD signals (r = −0.53, p = 0.003).

These results are consistent with both the LZC results reported above and the findings of Ferenets et al., (2007), who found that Sample Entropy decreased with increasing sedation in much the same way that LZC does.

PCA of BOLD Signals

As with LZC, the PCA-based measure of BOLD signal complexity returns a measure of how compressible the set of data are as a proxy for complexity, by identifying the number of components required to explain a fixed proportion of the variance in the data. A larger number of components to explain the same amount of variance would indicate less compressibility of the data. Thus, we hypothesised that as level of sedation increased, so would the compressibility of BOLD signals, as measured by the number of components required to explain 95% of the variance. In Dataset A, Kruskal-Wallis Analysis of Variance found significant differences between all three conditions (H(8.13), p = 0.017), and post-hoc testing found significant differences between all three sets of conditions: Awake vs. Mild (W(9), p = 0.03), Awake vs. Moderate (W(6), p = 0.016), and Mild vs. Moderate (W(11), p = 0.048). In Dataset B, we found a significant difference between Awake and Deep (W(4), p = 0.002). As before, there was a consistent pattern of increasing mean compressibility (and a consequent decreasing number of required components) as sedation increased. In Dataset A, \(\Delta =-\,2.214\pm 2.73\) from Awake to Moderate, and in Dataset B, \(\Delta =-\,5.188\pm 4.68\). Here, the Δ is negative because the number of components decreased between the Awake and sedated conditions, and is non-integer because it is the average over all subjects in the datasets. Of all the measures of BOLD signal compressibility, this was the only measure that did not significantly correlate with serum propofol concentration in the Mild and Moderate conditions in Dataset A.

As with LZC and the SampEn, these results indicate that as propofol-induced sedation increases, the algorithmic complexity of BOLD signals decreases. All measures of complexity discussed so far support each other, despite being a variety of linear and non-linear algorithms.

Temporal Process Complexity

Hurst Exponent

The Hurst Exponent was the only measure that we hypothesised would increase as consciousness was lost, rather than decrease, since as a signal becomes more predictable, its Hurst Exponent tends towards unity6. In both Datasets A and B we found significant differences between conditions. In Dataset A, Kruskal-Wallis Analysis of Variance found an omnibus difference (H(9.11), p = 0.01), and post-hoc testing found significant differences between Awake and Mild (W(16), p = 0.022), Awake and Moderate (W(8), p = 0.005), and Mild and Moderate (W(17), p = 0.026). In Dataset B, we found significant differences between the Awake and Deep conditions (W(26), p = 0.02). Unlike the previous two metrics, and as we expected, we found a relative increase in the Hurst Exponent as sedation increased: in Dataset A, we found \(\Delta =0.014\pm 0.014\) from Awake to Moderate sedation, and in Dataset B we found \(\Delta =0.01\pm 0.016\) from Awake to Deep sedation. In Dataset A, we found a significant correlation between serum concentration of propofol and Hurst Exponent in the Mild and Moderate sedation conditions (r = 0.393, p = 0.039).

This is consistent with our initial hypothesis that as sedation increased and consciousness was lost, the BOLD signals would become more predictable, as measured by an increasing Hurst Exponent.

Higuchi Fractal Dimension

The Higuchi Fractal Dimension was one of the least sensitive measures of BOLD signal complexity sampled here. In Dataset A, Kruskal-Wallis Analysis of Variance found a significant difference between all three conditions (H(8.27), p = 0.016), and post-hoc analysis found significant differences between the Awake and Moderate conditions (W(15), p = 0.019) and the Mild and Moderate conditions (W(17), p = 0.026), but not the Awake and Mild conditions. In Dataset B we found a significant difference between the Awake and Deep conditions (W(23), p = 0.02). As with LZC and Sample Entropy, the Awake condition had the highest mean fractal dimension in both samples, which went down as sedation increased: in Dataset A \(\Delta =-\,0.024\pm 0.032\) from Awake to Moderate and in Dataset B, \(\Delta =-\,0.018\pm 0.027\) from Awake to Deep. Surprisingly, the Higuchi Fractal dimension showed a very strong negative correlation with serum propofol concentration in the Mild and Moderate conditions of Dataset A (r = −0.614, p = 0.0005).

The finding that Higuchi Fractal dimension was relatively less able to discriminate between level of consciousness than LZC or Sample Entropy but more predictive of serum propofol concentration is interesting. While it is hard to come up with a definitive interpretation, it may suggest that there is some variable factor in individuals that makes their level of consciousness more or less resistant to the changes in brain activity (as measured by Higuchi Fractal Dimension) induced by propofol, or that the plasma concentration data offer more resolution, extending beyond the three artificially imposed bins of Awake, Mild and Moderate sedation.

Topological Algorithmic Complexity

Graph Lempel-Ziv Compressibility

The final metric we tested, and the second measure of topological complexity, was the compressibility of functional connectivity adjacency matrices using the Lempel-Ziv algorithm. This was the weakest of all the measures explored: the only significant difference was in Dataset A, between the Awake and Moderate conditions (W(11), p = 0.009), although in Dataset B there was a similar trend that approached, but did not reach significance (W(32), p = 0.06). The general trend of Awake having the highest value which decreased under increasing sedation was conserved (although note the large standard deviations): in Dataset A \(\Delta =-\,2885.71\pm 4937.34\) and in Dataset B \(\Delta =-\,4923.44\pm 8967.29\). There was no significant correlation between graph compressibility and serum propofol concentrations in Dataset A.

While this is clearly the weakest result, in the context of the others, we still find its success at discriminating between the Awake and Moderate conditions of Dataset A intriguing, and suspect that in a larger set of data it may have more discriminative power. The relationship between consciousness and network compressibility may not be as direct as when performing analysis such as LZC on BOLD signals, but these results suggest this is an area worth exploring.

Topological Process Complexity

Algebraic Connectivity

Our first of two measures of functional network complexity is algebraic connectivity, which returns information about the robustness of the network to removal of elements22. In Dataset A, Kruskal-Wallis analysis found a significant difference in algebraic connectivity between all three conditions (H(9.654), p = 0.008). Post-hoc analysis found significant differences between the Awake and Moderate conditions (W(12), p = 0.011) and the Mild and Moderate conditions (W(15), p = 0.019), but not the Awake versus Mild conditions. In Dataset B, we found a significant difference between the Awake and Deep conditions (W(23), p = 0.02). As before, in Datasets A and B the Awake condition had the highest mean algebraic connectivity, with mean values dropping as sedation increased. In Dataset A, \(\Delta =-\,107.67\pm 116.98\) from Awake to Moderate, while in Dataset B, −\(906.09\pm 1235.07\). Despite the ability of algebraic connectivity to discriminate between conditions, there was no significant correlation with serum propofol concentration in the Mild and Moderate conditions of Dataset A.

These results suggest that, while graph theoretical measures may be predictive of level of consciousness in propofol anaesthesia, algebraic connectivity in particular seems to lack the discriminative power of direct analysis on BOLD signals. Nevertheless, these results are promising as they show that the topological complexity of functional brain networks can communicate information relevant to the level of consciousness of an individual.

Higher Order Analysis of Overall Complexity

Every metric, when correlated against every other metric, showed a highly significant correlation (see Fig. 1), all of which were significant with the sole exception of the correlation between the number of PCA components required to explain the majority of the variance and the Hurst exponent in Dataset A. We had hypothesised that, if the different kinds of complexity explored here (algorithmic and process-based, in both the temporal and topological dimensions) all were ways to quantify an underlying construct of “overall complexity”, then there should be a single component that explains the majority of the variance of the results. In Dataset A, we found that the principal component explained 67.07% of the variance in the set of results and in Dataset B the principal component explained 71.05% of the variance of the results. In both datasets, this component correlated extremely highly with each metric: in Dataset A it correlated most highly with LZC (r = −0.947, p ≤ 1 × 10−5), followed by Sample Entropy (r = −0.929, p ≤ 1 × 10−5). In Dataset B, these two were also the most highly correlated with the principal component, although the order was flipped, with Sample Entropy having the highest correlation (r = −0.95, p ≤ 1 × 10−5), followed by LZC (r = −0.932, p ≤ 1 × 10−5). When broken down by condition, in both Datasets, the principal component was able to discriminate between states of consciousness: in Dataset A the Kruskal-Wallis test found a significant difference between all three conditions (H(12.048, p = 0.002), and post-hoc testing found significant differences between the Awake and Moderate conditions (W(8), p = 0.005), and the Mild and Moderate conditions (W(3), p = 0.001) but not the Awake and Mild conditions. In Dataset B we found a significant difference between the Awake and Deep conditions (W(8), p = 0.002). In the Mild and Moderate conditions of Dataset A, the principal component significantly correlated with serum concentrations of propofol (r = 0.531, p = 0.004) (see Fig. 2). Thus, the principal component derived from multiple specific measures of complexity can be related to states of consciousness in the human brain, and may be identified with the “overall complexity” of the dataset. For visualisation of these results, see Fig. 3.

The correlation matrices between all the different metrics for Datasets A and B. All entries along the diagonal have been removed. There are some typical patterns: the graph measures (LZ_Graph and Algebraic Connectivity are both generally more highly correlated, as are LZC, SampEn and Hurst). With the exception of a single correlation between the PCA Number and the Hurst Exponent in Dataset A. The p-values ranged over many orders of magnitude from 10−2 to 10−20.

There was a significant correlation between the first component and serum concentration of propofol, with patients in the Mild condition (r = 0.53, p-value = 0.004) clustering together with low concentrations, and increasing, with larger variances, as the propofol concentration climbs. As in Fig. 3 below, the incongruous increase in the values of the component does not reflect a relative increase in complexity in this case, but is an artefact of the PCA algorithm used to derive the principal component. No Awake volunteers were included in this analysis, as all would have had a blood propofol concentration of exactly zero.

Here are the differences in the first principal component generated from all the measures from Datasets A and B. Interestingly, in Dataset A, there was no significant difference between the Awake and Mild condition, while there were differences between both of those states and the Moderate condition. While this may be a reflection of lack of sensitivity, it is worth noting that, between the Awake and Mild conditions, consciousness was not actually lost: volunteers experienced conscious sedation, while the difference in level of consciousness between the Awake and and Moderate conditions was much more dramatic. In Dataset B, where consciousness was fully lost in the Deep condition, a significant difference appeared. Note that, despite the measures of complexity generally dropping as consciousness was lost (with the notable exception of the Hurst exponent analysis), the PCA analysis returned a Hurst-like pattern, with the values in the component increasing as consciousness is lost. This does not indicate an increase in complexity in any sense, but rather, is an artefact of how the dimensionality reduction transforms values. To ensure that this was not being driven by the Hurst exponent in any way, we ran the analysis after multiplying each Hurst exponent by −1 (so that the value decreased with loss of consciousness), and found no difference in the result.

Discussion

In the present work, we have investigated measures of complexity from algorithmic information theory and the physics of dynamical systems, as they apply to the temporal and topological (network) dimensions of functional MRI brain data from individuals under different levels of propofol sedation. Two main insights can be derived from our results. The first is that, at least in the context of the human brain, different measures purporting to quantify “complexity” are indeed related to some underlying common construct, regardless of the dimension along which they measure complexity, or the aspect of complexity that they measure. This provides much-needed validation to the idea that a dataset - and the system from which it derives - can be considered complex tout court, rather than just being complex in a specific dimension, and according to a specific way of assessing complexity. We term this the “overall complexity” of the system or dataset. In turn, this suggests that it is appropriate to use the term “complexity” for the various specific measures, because there does seem to exist a common underlying property of the data that they tap into. In particular, we have demonstrated that the complexity of the human brain activity, as inferred from fMRI BOLD signals, is modulated by one’s state of consciousness - supporting previous results from macaque electrocorticography data23. This was observed both with the individual measures - validating and extending previous results - and, most importantly, with the underlying construct of overall complexity, which demonstrates its validity as a construct. The latter is also reinforced by the fact that we were able to replicate this finding with a separate dataset.

Secondly, it is important to observe that different complexity measures, though correlated to each other and related to the same underlying construct of overall complexity, are nevertheless sensitive to different aspects of the data. In particular, measures operating along the temporal dimension appeared especially sensitive at discriminating between levels of sedation; conversely, topological measures failed to discriminate between Awake and Mild conditions in Dataset A, and also did not correlate with propofol serum levels. This suggests that the temporal dimension of the human brain’s complexity, as derived from BOLD signal timeseries (despite their limited temporal resolution compared to EEG), may be especially vulnerable to loss of consciousness, at least as it is induced by the GABA-ergic agent propofol. Further work may seek to identify whether this effect is uniform across cortical regions, or whether specific areas’ timeseries are more largely affected by propofol than others. This represents a novel insight regarding the ways in which anaesthetic drugs such as propofol intervene on the brain to cause unconsciousness. Additionally, it would be worth exploring whether this observation of different sensitivity of temporal and topological measures of complexity is drug-specific, or if instead it is a generalisable feature of how the brain loses consciousness. Thus, one future direction of research is to apply these same metrics to states of consciousness induced by different anaesthetic agents, whose molecular mechanisms of action can vary widely. Disorders of consciousness (DOC) due to severe brain injury may also represent a crucial future step for research: unlike anaesthetics, DOC involve changes in the physical structure of the brain, which is bound to impact the topology of brain networks. Investigating how this impacts the relation between different measures and dimensions of complexity will provide further understanding into the relation between complexity and consciousness in the brain. Additionally, as MRI is already a routine part of care for DOC patients, algorithms such as those explored here might be helpful in determining the presence or absence of consciousness in ambiguous states such as minimally conscious state.

Importantly, our results also show that, despite the relative temporal paucity of information in BOLD signals, these signals carry sufficient information to discriminate between states of consciousness. While preliminary, these findings suggest that the process complexity of individual BOLD signals is at least partially re-encoded as topological complexity when forming functional connectivity networks. One possible avenue of future work is to explore the parameters under which this conservation of complexity is maximised (different similarity functions, different thresholding procedures, etc), in order to increase the sensitivity of these measures. Crucially, even higher discriminative power may be achieved by applying the same analyses to measures with higher temporal information, such as EEG or ECoG23, which may then improve anaesthetists’ ability to detect unwanted residual consciousness in patients, thereby avoiding the rare but extremely distressing condition known as intraoperative awareness24.

Nevertheless, our work also presents a number of limitations, and these should be borne in mind when evaluating the present results. We do not claim to have exhaustively searched all measures of algorithmic or process complexity: as already evidenced, there are a vast number of measures to choose from (and many measures themselves have multiple implementations, eg. Lempel-Ziv compressibility features in multiple compression algorithms including Lempel-Ziv-Welch compressibility25 and LZ77/826, as well as being a component of the perturbational complexity index17). Other measures of algorithmic complexity might include permutation entropy27 or Shannon entropy28,29. Alternative measures of process complexity might be multiscale sample entropy30 or Lyapunov exponent31. When selecting which measures to include in this analysis, we included several criteria we hoped our measures would satisfy: the first is that they had been used in previous electrophysiological or fMRI studies of consciousness. The second criteria was that they should be relatively accessible theoretically and computationally. Finally, they shouldn’t require excessively long time-series and thus be amenable to BOLD signals (which tend to be \(\ll \)500 samples, excluding some measures like multiscale entropy).

Furthermore, as already mentioned the temporal information available in the BOLD signal is limited, and it is also not a direct measure of neural activity. More generally, the optimal way to construct brain graphs from BOLD signal data is an area of active investigation; although the approach we have taken here is among the most common in the literature32, alternative methods exist for defining nodes (for instance by using different parcellations33, or components derived from Independent Components Analysis) and for defining edges, such as by using partial correlation34, wavelet coherence35, or normalised mutual information instead of Pearson correlation36,37. In particular, our analysis pipeline involved removing the negative correlations between brain regions, before the network analysis. Removing negative correlations is the most commonly adopted approach in network neuroscience32 especially since their inclusion has been found to decrease reproducibility of brain network properties38,39. However, the importance of negative correlations in the brain has been demonstrated for both waking cognition40 and consciousness, with reductions in their prevalence observed during anaesthesia and other states of unconsciousness41,42,43; thus, ignoring them may have different effects on conscious versus unconscious brain networks, which could explain the reduced sensitivity of topological measures. In future work, it would be worthwhile to explore other methods of constructing functional connectivity networks that do not return negative edges at all such as wavelet coherence35 or normalised mutual information36,37, or the soft thresholding approach proposed by Schwarz and McGonigle44, thus avoiding the problem entirely.

Finally, in Dataset A the state of consciousness was determined based on the estimated propofol concentration, rather than behaviour, so that different individuals’ susceptibility to the drug may have led to different levels of sedation, despite the same level of propofol. However, this concern is mitigated by the replication of our results in Dataset B, where sedation was deeper and it was assessed behaviourally, so that all individuals met the same criteria. Finally, the measures of complexity explored here are but a subset of those that have been proposed over the years in the literature. Future research could benefit from expanding this repertoire, for instance including estimates of Phi, a measure of integrated information derived from neural complexity11, which has been proposed to quantify a system’s consciousness10,45.

Conclusion

We have investigated measures of algorithmic and process complexity of fMRI BOLD signal in both the temporal and topological dimensions, at various levels of consciousness induced by propofol sedation. Our results demonstrate that complexity measures are differently able to discriminate between levels of sedation, with temporal measures showing higher sensitivity. Additionally, all measures were strongly correlated, and most of the variance could be explained by a single underlying construct, which may be interpreted as a more general quantification of complexity, and which also proved capable of discriminating between levels of sedation, demonstrating a relation between consciousness and “complexity”, broadly defined, with a clear biological grounding given by the relation to propofol serum concentration. The finding that complexity measures formalized in very different ways are similarly useful suggests a deeper relationship between dynamical and algorithmic complexity, and the capacity of the human brain to support consciousness. Finally, these results provide strong evidence that many non-linear time-series analysis techniques that have previously been restricted to M/EEG imaging modalities can also be successfully applied to detect alterations of complexity in BOLD signals, expanding the repertoire of available fMRI image and time-series analyses.

Methods

Data Acquisition and Preprocessing

Ethics Statements

All data were collected in accordance with the relevant guidelines and under the oversight of the relevant ethical bodies. The specifics for ethical approvals are detailed below.

Dataset A

Ethical approval for these studies was obtained from the Cambridgeshire 2 Regional Ethics Committee, and all subjects gave informed consent to participate in the study. Twenty-five healthy volunteer subjects were recruited for scanning. The acquisition procedures are described in detail by Stamatakis et al.46: MRI data were acquired on a Siemens Trio 3T scanner (WBIC, Cambridge). Each functional BOLD volume consisted of 32 interleaved, descending, oblique axial slices, 3 mm thick with interslice gap of 0.75 mm and in-plane resolution of 3 mm, field of view = 192 × 192 mm, repetition time = 2 s, acquisition time = 2 s, time echo = 30 ms, and flip angle 78. We also acquired T1-weighted structural images at 1 mm isotropic resolution in the sagittal plane, using an MPRAGE sequence with TR = 2250 ms, TI = 900 ms, TE = 2.99 ms and flip angle = 9 degrees, for localisation purposes. Of the 25 healthy subjects, 14 were ultimately retained: the rest were excluded, either because of missing scans (n = 2), or due of excessive motion in the scanner (n = 9, 5 mm maximum motion threshold).

Propofol Sedation

Propofol was administered intravenously as a “target controlled infusion” (plasma concentration mode), using an Alaris PK infusion pump (Carefusion, Basingstoke, UK). Three target plasma levels were used - no drug (baseline), 0.6 mg/ml (mild sedation) and 1.2 mg/ml (moderate sedation). A period of 10 min was allowed for equilibration of plasma and effect-site propofol concentrations. Blood samples were drawn towards the end of each titration period and before the plasma target was altered, to assess plasma propofol levels. In total, 6 blood samples were drawn during the study. The mean (SD) measured plasma propofol concentration was 304.8 (141.1) ng/ml during light sedation, 723.3 (320.5) ng/ml during moderate sedation and 275.8 (75.42) ng/ml during recovery. Mean (SD) total mass of propofol administered was 210.15 (33.17) mg, equivalent to 3.0 (0.47) mg/kg. The level of sedation was assessed verbally immediately before and after each of the scanning runs. The three conditions from this dataset are referred to as Awake, Mild and Moderate sedation respectively.

Two senior anesthetists were present during scanning sessions and observed the subjects throughout the study from the MRI control room and on a video link that showed the subject in the scanner. Electrocardiography and pulse oximetry were performed continuously, and measurements of heart rate, noninvasive blood pressure, and oxygen saturation were recorded at regular intervals.

Dataset B

These data were provided by the Brain and Mind Institute, Department of Psychology, The University of Western Ontario. All scans were collected at the Robarts Research Institute in London, Ontario (Canada) between May and November 2014. A total of 19 (18–40 years; 13 males) healthy, right- handed, native English speakers, with no history of neurological disorders were recruited. Each volunteer provided written informed consent, following relevant ethical guidelines, and received monetary compensation for their time. The Health Sciences Research Ethics Board and Psychology Research Ethics Board of Western University (Ontario, Canada) ethically approved this study. Due to equipment malfunction or physiological impediments to anaesthesia in the scanner, data from three participants (1 male) were excluded from analyses, leaving 16.

Scanning was performed using a 3 Tesla Siemens Tim Trio system with a 32-channel head coil, at the Robarts Research Institute in London, Ontario, Canada. Participants lay supine in the scanner. Function echo-planar images (EPI) were acquired (33 slices, voxel size: 3 × 3 × 3 mm; inter-slice gap of 25%, TR = 2000ms, TE = 30 ms, matrix size = 64 × 64, FA = 75 degrees). An anatomical volume was obtained using a T1-weighted 3D MPRAGE sequence (32 channel coil, voxel size: 1 × 1 × 1 mm, TA = 5 min, TE = 4.25 ms, matrix size = 240 × 256, FA = 9 degrees).

Propofol Sedation

Intravenous propofol was administered with a Baxter AS 50 (Singapore). The infusion pump was manually adjusted using step-wise increases to achieve desired levels of sedation of propofol (Ramsay level 5). Concentrations of intra-venous propofol were estimated using the TIVA Trainer (the European Society for Intravenous Aneaesthesia, eurosiva.eu) pharmacokinetic simulation program. If Ramsay level was lower than 5, the concentration was slowly increased by increments of 0.3 μg/ml with repeated assessments of responsiveness between increments to obtain a Ramsay score of 5. Ramsay level 5 was determined as being unresponsive to verbal commands and rousable only by physical stimulus. In contrast to Propofol Dataset A, the two conditions from this dataset are referred to by Awake and Deep sedation respectively, reflecting the substantial increase in sedation depth present in this dataset.

Image Pre-Processing

All of the collected images were preprocessed using the CONN functional connectivity toolbox47 (http://www.nitrc.org/projects/conn), using the default pre-processing pipeline, which includes realignment and unwarping (motion estimation and correction), slice-timing correction, outlier detection, structural coregistration and spatial normalisation using standard grey and white matter masks, normalisation to the Montreal Neurological Institute space (MNI), and finally spatial smoothing with a 6 mm full width at half-maximum (FWHM) Gaussian kernel.

Temporal preprocessing included nuisance regression using anatomical CompCor to remove noise attributable to white matter and CSF components from the BOLD signal, as well as subject-specific realignment parameters (three rotations and three translations) and their first-order temporal derivatives48. Linear detrending was also applied, as well as band-pass filtering in the default range of [0.008, 0.09] Hz40. For a more detailed discussion of the details of the CONN default preprocessing pipeline, see Whitefield-Gabrieli and Nieto-Castanon, 2012.

Complexity of BOLD Signals

To explore the space of different formalisations of complexity, we used algorithms from algorithmic information theory (Lempel-Ziv compressibility, sample entropy, and principal component analysis), as well as from dynamical systems physics (Higuchi fractal dimension, Hurst exponent). Before analysis, the BOLD time-series were transformed by applying the Hilbert transform. The absolute value of the transformed signal was then taken, to remove negative frequencies and ensure that all series were positive. The Hilbert transform was also used to maintain consistency with earlier studies exploring the complexity of brain activity as it relates to consciousness14,16.

Lempel-Ziv Complexity

The Lempel-Ziv algorithm is a computationally tractable method for quantifying the complexity of a data-series by calculating the number of distinct patterns present in the data. For sufficiently large datasets, it is a useful approximation of Kolmogorov complexity, which is famously uncomputable for most strings3. The method used here is described in Schartner et al., (2015). Briefly: for every ROI in our parcellated brain, a time-series \(F(t)\) is binarised according to the following procedure:

The resulting time-series are stacked into a binary matrix \(M(X,T)\), where every row corresponds to the time-series \({F}_{B}(t)\) for every ROI \(x\in X\) and every column is a time-point \(t\in T\). The matrix is then flattened orthogonally to T, resulting in a vector \(V\) of length \(X\times T\), on which the Lempel-Ziv analysis was performed.

The Lempel-Ziv algorithm creates a dictionary \(D\), which is the set of binary patterns that make up \(V\) and returns a value \(L{Z}_{C}\propto |D|\). For every time-series \({F}_{B}(t)\in X\), a random time-series was created, by shuffling all the entries in \(F(t)\). These were stacked into a binary matrix \({M}_{rand}\), with the same dimensions as \(M\), however containing only noise. This random matrix was flattened and its \(L{Z}_{C}\) value calculated. As the randomness of a string increases, \(L{Z}_{C}\to 1\), so this value was used to normalise the “true” value of \(L{C}_{C}\), which was divided by \(L{Z}_{{C}_{Rand}}\) to ensure all values were within a range \((0,1)\).

Sample Entropy

Sample Entropy (SampEn) quantifies how unpredictable a signal is4 by estimating the probability that similar sequences of observations in a timeseries will remain similar over time. To compute SampEn, each time-series \(X(t)\) of length N is divided into subsections \(S\) of length \(m\) and the Chebychev distance between two sections \({S}_{i},{S}_{j}\) is calculated. Two sections are “similar” if their distance is less than some tolerance \(r\). The procedure is repeated for sections of length \(m+1\). We then calculate the probability that, if two data sequences of length \(m\) have distance less than \(r\), then the same two sequences of length \(m+1\) also have distance less than \(r\).

where A is the number of chunks of length \(m+1\) that are similar (have Chebyshev distance less than \(r\)), and B is the number of chunks of length \(m\) that are similar. Low values of SampEn would indicate that the signal is highly stereotyped - with a perfectly predictable series, such as [1, 1, 1, …] having a SampEn of zero, and SampEn increasing as the series becomes more disordered.

SampEn depends on the choice of parameters \(m\) and \(r\). Here, we used \(m=2\) and \(r=0.3\times \sigma (X(t))\), where \(\sigma ()\) is the standard deviation function.

SampEn has been used to test the level of sedation induced by propofol and remifentanil in electrophysiological studies49, and been shown to be associated with the degree of sedation much like Lempel-Ziv complexity has.

Hurst Exponent

The Hurst Exponent returns an estimate of how predictable a time-series is by quantifying its ‘memory,’ or how dependent the value at time \(t\) is on the value at time \(t-1\)6. There are a number of algorithms for estimating the Hurst Exponent; here we report results calculated using a rescaled range approach. In it, a time-series \(X(t)\) of length \(n\) is segmented into non-overlapping sections of length \(n\), \({X}_{i}(t)\). For each segment, the cumulative departure from the signal mean is calculated:

where \(\bar{x}\) is the mean of \({X}_{i}(t)\). The rescaled range of deviations (R/S) is then defined as:

where \(\sigma ()\) is the standard deviation function. We then compute R/S for all \({X}_{i}(t)\) and average them, generating \((R(n)/S(n))\), which is the average scaled range for all the subsections of \(X(t)\) with length \(n\). We are left with a power relation, where:

where H is the Hurst exponent, and can be extracted by regression.

Higuchi Fractal Dimension

To calculate the temporal fractal dimension, we used the Higuchi method for calculating the self-similarity of a one-dimensional time-series7, an algorithm widely used in EEG and MEG analysis50. From each time-series \(X(t)\), we create a new time-series \(X{(t)}_{k}^{m}\), defined as follows:

where \(m=1,2,\ldots ,k\).

For each time-series \(X{(t)}_{k}^{m}\) in \({k}_{1},{k}_{2},\ldots {k}_{max}\), the length of that series, \({L}_{m}(k)\), is given by:

We then define the average length of the series \(\langle L(k)\rangle \), on the interval \([k,{L}_{m}(k)]\) as:

If our initial time-series \(X(t)\) has fractal character, then:

where D is our desired fractal dimension. The Higuchi algorithm requires a pre-defined kmax value as an input, along with the target time-series. This value is usually determined by sampling the results returned by different values of kmax and selecting a value based on the range of kmax where the fractal dimension is stable. For both datasets, we sampled over a range of powers of two \((2,\ldots ,128)\). Due to the comparably small size of BOLD time-series, the range of kmax values that our algorithm could process without returning an error was limited. We ultimately decided on \({k}_{max}=32\) for Dataset A and \({k}_{max}=64\) for the Dataset B.

PCA of BOLD Signals

Principal component analysis (PCA) is commonly used to compress data by finding the dimensions that encode the maximal variance in a high-dimensional dataset. Here, we use PCA in a matter similar to Lempel-Ziv complexity, to relate the complexity of sets of BOLD signals to their compressibility. The more algorithmically random the dataset, the more orthogonal dimensions are required to describe the dataset, which we took advantage of to attempt to quantify the complexity of our BOLD time-series data. We constructed a large array of un-binarised BOLD signals, \(M(X,T)\) to which we applied a standard feature scaler from Scikit-Learn51 to ensure all values had a mean of zero and unit variance, and then a PCA function, recording recorded how many dimensions were required to cumulatively describe 95% of the variance in the original dataset. We used this value as our measure of data complexity.

Complexity of Functional Connectivity Graphs

Networks are a common example of complex system, and perhaps none more so than the human brain, which can be considered as a network at multiple scales. A network, or graph, is represented mathematically as an object comprised of nodes (in this case, cortical regions) and the connections between them, or edges (in this case, functional connectivity given by statistical association of the regions’ BOLD time-series). Investigating how the complexity of brain functional networks is affected by the anaesthetic drug propofol is therefore a clear way of testing our hypothesis that loss of consciousness should reduce the brain’s level of complexity.

Formation of Functional Connectivity Networks

To construct brain functional connectivity networks, the preprocessed BOLD time-series data were extracted from each brain in CONN and the cerebral cortex was segmented into distinct ROIs, using the 234-ROI parcellation of the Lausanne atlas52. Each time-series \(F(t)\) was transformed by taking the norm of the Hilbert transform, to maintain consistency with the time-series analysis.

Every time-series \(H(t)\) was then correlated against every other time-series, using the Pearson Correlation, forming a matrix \(M\) such that:

The matrices were then filtered to remove self-loops, ensuring simple graphs, and all negative correlations were removed:

Finally, the matrices were binarised with a k% threshold, such that:

The results could then be treated as adjacency matrices defining functional connectivity graphs, where each row \({M}_{i}\) and column \({M}_{j}\) corresponds to an ROI in the initial cortical parcellation, and their connection being represented by the corresponding cell in the matrix. For each graph theoretical analysis, a range of percentage thresholds (k%) were tested to ensure that any observed effects were not an artefact of one particular threshold, and are consistent over different graph topologies.

Algebraic Connectivity

Algebraic connectivity (AC) is a measure of graph connectivity derived from spectral graph theory5, which gives an upper bound on the classical connectivity of a graph. As such, it is often used as a measure of how well-integrated a graph is and how robust it is to damage, in the sense of the number of connections that must be removed before it is rendered disconnected. Unlike classical connectivity, which must be calculated by computationally intensive brute-force methods, AC is quite easy to find for even quite large graphs. AC is also a measure of graph synchronisability and emerges from analysis of the Kuramoto model of coupled oscillators53. For a simple example, imagine placing identical metronomes at every vertex of a graph and allowing the vibrations to propagate along the edges. The synchronisability describes the limit behaviour of how long it will take all the metronomes to synchronise. Here we use AC as a proxy measure of synchronisability to capture the possible temporal dynamics of the brain networks modelled by our functional connectivity graphs.

The AC of a graph \(G\) is formally defined as the first non-zero eigenvalue of the Laplacian matrix \({L}_{G}\) associated with \(G\). \({L}_{G}\) is derived by subtracting the adjacency matrix \({A}_{G}\) from the degree matrix \({D}_{G}\):

As every row and column of \({L}_{G}\) sum to zero, and it is symmetric about the diagonal, the imaginary part of every eigenvalue in the spectrum of \({L}_{G}\) is zero, and if \(G\) is a fully-connected graph, then:

To ensure that we were capturing the full topology of the graph, we calculated \({\lambda }_{2}\) for each graph at multiple thresholds [10, 20, 30, … 90], creating a curve \(\Lambda =[{\lambda }_{{2}_{10}},{\lambda }_{{2}_{20}},{\lambda }_{{2}_{30}}\ldots {\lambda }_{{2}_{90}}]\). We then integrated \(\Lambda \) using the trapezoid method to arrive at our final value \(AC={\int }^{}\,\Lambda \,dx\).

Graph Compressibility

In contrast to AC, which we use to explore the limit behaviour of possible brain temporal dynamics, our measure of graph compressibility is purely algorithmic, and estimates the Kolmogorov complexity of a graph: that is, the size of a computer program necessary to fully recreate a given graph \(G\). To do this, we re-employ the Lempel-Ziv algorithm originally used to calculate the \(L{Z}_{C}\) score of BOLD signals. Here we use it to calculate a related measure, \(L{Z}_{G}\), which is the length of a dictionary required to describe the adjacency matrix \({A}_{G}\) of a given graph.

To calculate \(L{Z}_{G}\), we take a binary adjacency matrix and flatten it into a single vector \(V\), and then run the Lempel-Ziv algorithm on that vector. As a binary vector of length \(l\) can be used to perfectly reconstruct an adjacency matrix defining a graph with \(\sqrt{l}\) vertices (so long as \(l\) is a square number, of course), we take \(V\) to be equivalent to a program defining \(G\). As with AC, to ensure that we were capturing the full topology of \(G\), we calculated the Lempel-Ziv complexity of the binary \({A}_{G}\) at the same nine thresholds [10…90], and then defined \(L{Z}_{G}\) as the integral of the resulting curve of complexity values.

Higher-Order Measures

Once we had calculated individual measures of complexity, we tested how they related to each-other, and (for Dataset A) serum concentrations of propofol. We correlated each one against all others to construct a correlation matrix which describes, how different metrics cluster.

We also did a principal component analysis on the set of all results. We hypothesised that, despite variability in the effectiveness of the individual measures, there should be a single, underlying component reflecting a shared factor of “complexity”. We further hypothesised that this underlying factor should be predictive of both the level of consciousness, and (in Dataset A), of the individual serum concentration of propofol.

Statistical Analysis

All analysis was carried out using the Python 3.6 programming language in the Spyder IDE (https://github.com/spyder-ide/spyder), using the packages provided by the Anaconda distribution (https://www.anaconda.com/download). All packages were in the most up-to-date version. Packages used include SciKit-Learn51, NumPy54, SciPy, and NetworkX55. Summary statistics are reported as mean ± standard deviation. Unless otherwise specified, all the significance tests are non-parametric: given the small sample sizes and heterogeneous populations, normal distributions were not assumed. Wilcoxon Signed Rank test was used to compare drug conditions against their respective control conditions.

Data availability

The results of our analyses and the original MRI and fMRI images are available on request from author E.A.S. (email: eas46@cam.ac.uk).

References

Crutchfield, J. P. Between order and chaos. Nature Physics 8, 17 (2012).

Weaver, W. Science and complexity. American Scientist 36, 536–544 (1948).

Chaitin, G. J. On the Simplicity and Speed of Programs for Computing Infinite Sets of Natural Numbers. J. ACM 16, 407–422, https://doi.org/10.1145/321526.321530 (1969).

Richman, J. S. & Moorman, J. R. Physiological time-series analysis using approximate entropy and sample entropy. American Journal of Physiology. Heart and Circulatory Physiology 278, H2039–2049, https://doi.org/10.1152/ajpheart.2000.278.6.H2039 (2000).

Kocarev, L. & Vattay, G. Synchronization in Complex Networks. In Kocarev, L. & Vattay, G. (eds) Complex Dynamics in Communication Networks, Understanding Complex Systems, 309–328, https://doi.org/10.1007/10973509_13 (Springer Berlin Heidelberg, Berlin, Heidelberg, 2005).

Hurst, H. E. Long-Term Storage Capacity of Reservoirs. Transactions of the American Society of Civil Engineers 116, 770–799, http://cedb.asce.org/CEDBsearch/record.jsp?dockey=0292165 (1951).

Higuchi, T. Approach to an irregular time series on the basis of the fractal theory. Physica D: Nonlinear Phenomena 31, 277–283, http://www.sciencedirect.com/science/article/pii/0167278988900814, https://doi.org/10.1016/0167-2789(88)90081-4 (1988).

Carhart-Harris, R. L. et al. The entropic brain: a theory of conscious states informed by neuroimaging research with psychedelic drugs. Frontiers in human neuroscience 8, 20 (2014).

Carhart-Harris, R. L. The entropic brain-revisited. Neuropharmacology (2018).

Tononi, G. An information integration theory of consciousness. BMC neuroscience 5, 42 (2004).

Tononi, G., Sporns, O. & Edelman, G. M. A measure for brain complexity: relating functional segregation and integration in the nervous system. Proceedings of the National Academy of Sciences 91, 5033–5037 (1994).

Zhang, X. S., Roy, R. J. & Jensen, E. W. EEG complexity as a measure of depth of anesthesia for patients. IEEE transactions on bio-medical engineering 48, 1424–1433, https://doi.org/10.1109/10.966601 (2001).

Liang, Z. et al. EEG entropy measures in anesthesia. Frontiers in Computational Neuroscience 9, 16, https://doi.org/10.3389/fncom.2015.00016 (2015).

Schartner, M. M. et al. Global and local complexity of intracranial EEG decreases during NREM sleep. Neuroscience of Consciousness 3, https://academic.oup.com/nc/article/3/1/niw022/2957408/Global-and-local-complexity-of-intracranial-EEG, https://doi.org/10.1093/nc/niw022 (2017).

Schartner, M. M., Carhart-Harris, R. L., Barrett, A. B., Seth, A. K. & Muthukumaraswamy, S. D. Increased spontaneous MEG signal diversity for psychoactive doses of ketamine, LSD and psilocybin. Scientific Reports 7, 46421, https://www.nature.com/articles/srep46421, https://doi.org/10.1038/srep46421 (2017).

Schartner, M. et al. Complexity of Multi-Dimensional Spontaneous EEG Decreases during Propofol Induced General Anaesthesia. PLoS One 10, e0133532, https://doi.org/10.1371/journal.pone.0133532 (2015).

Casali, A. G. et al. A Theoretically Based Index of Consciousness Independent of Sensory Processing and Behavior. Science Translational Medicine 5, 198ra105–198ra105, http://stm.sciencemag.org/content/5/198/198ra105, https://doi.org/10.1126/scitranslmed.3006294 (2013).

Dong, J. et al. Hurst Exponent Analysis of Resting-State fMRI Signal Complexity across the Adult Lifespan. Frontiers in Neuroscience 12, https://www.frontiersin.org/articles/10.3389/fnins.2018.00034/full, https://doi.org/10.3389/fnins.2018.00034 (2018).

Nobukawa, S. et al. Atypical temporal-scale-specific fractal changes in Alzheimers disease (ad). Cognitive Neurodynamics 1–11 (2019).

Luppi, A. I. et al. Consciousness-specific dynamic interactions of brain integration and functional diversity. Nature Communications 10, 1–12, https://www.nature.com/articles/s41467-019-12658-9, https://doi.org/10.1038/s41467-019-12658-9 (2019).

Hudetz, A. G., Liu, X., Pillay, S., Boly, M. & Tononi, G. Propofol anesthesia reduces Lempel-Ziv complexity of spontaneous brain activity in rats. Neuroscience Letters 628, 132–135, http://www.sciencedirect.com/science/article/pii/S0304394016304268, https://doi.org/10.1016/j.neulet.2016.06.017 (2016).

Diestel, R. Graph Theory. Graduate Texts in Mathematics, 5 edn., https://www.springer.com/gb/book/9783662536216 (Springer-Verlag, Berlin Heidelberg, 2017).

Tajima, S., Yanagawa, T., Fujii, N. & Toyoizumi, T. Untangling brain-wide dynamics in consciousness by cross-embedding. PLoS computational biology 11, e1004537 (2015).

Orser, B. A. Depth-of-anesthesia monitor and the frequency of intraoperative awareness (2008).

Lempel, A. & Ziv, J. On the Complexity of Finite Sequences. IEEE Transactions on Information Theory 22, 75–81, https://doi.org/10.1109/TIT.1976.1055501 (1976).

Ziv, J. & Lempel, A. Compression of individual sequences via variable-rate coding. IEEE Transactions on Information Theory 24, 530–536, https://doi.org/10.1109/TIT.1978.1055934 (1978).

Bandt, C. & Pompe, B. Permutation entropy: a natural complexity measure for time series. Physical Review Letters 88, 174102, https://doi.org/10.1103/PhysRevLett.88.174102 (2002).

Viol, A., Palhano-Fontes, F., Onias, H., Araujo, D. B. D. & Viswanathan, G. M. Shannon entropy of brain functional complex networks under the influence of the psychedelic Ayahuasca. Scientific Reports 7, 7388, https://www.nature.com/articles/s41598-017-06854-0, https://doi.org/10.1038/s41598-017-06854-0 (2017).

Pappas, I., Adapa, R. M., Menon, D. K. & Stamatakis, E. A. Brain network disintegration during sedation is mediated by the complexity of sparsely connected regions. NeuroImage, http://www.sciencedirect.com/science/article/pii/S1053811918320597, https://doi.org/10.1016/j.neuroimage.2018.10.078 (2018).

Costa, M., Goldberger, A. L. & Peng, C.-K. Multiscale entropy analysis of biological signals. Physical Review E 71, 021906, https://doi.org/10.1103/PhysRevE.71.021906 (2005).

Aftanas, L. I. et al. Non-linear analysis of emotion EEG: calculation of Kolmogorov entropy and the principal Lyapunov exponent. Neuroscience Letters 226, 13–16, http://www.sciencedirect.com/science/article/pii/S0304394097002322, https://doi.org/10.1016/S0304-3940(97)00232-2 (1997).

Hallquist, M. N. & Hillary, F. G. Graph theory approaches to functional network organization in brain disorders: A critique for a brave new small-world. Network Neuroscience 3, 1–26 (2018).

Arslan, S. et al. Human brain mapping: A systematic comparison of parcellation methods for the human cerebral cortex. Neuroimage 170, 5–30 (2018).

Smith, S. M. et al. Network modelling methods for fmri. Neuroimage 54, 875–891 (2011).

Lachaux, J.-P. et al. Estimating the time-course of coherence between single-trial brain signals: an introduction to wavelet coherence. Neurophysiologie Clinique/Clinical Neurophysiology 32, 157–174, http://www.sciencedirect.com/science/article/pii/S0987705302003015, https://doi.org/10.1016/S0987-7053(02)00301-5 (2002).

Chai, B., Walther, D., Beck, D. & Fei-fei, L. Exploring Functional Connectivities of the Human Brain using Multivariate Information Analysis. In Bengio, Y., Schuurmans, D., Lafferty, J. D., Williams, C. K. I. & Culotta, A. (eds) Advances in Neural Information Processing Systems 22, 270–278, http://papers.nips.cc/paper/3797-exploring-functional-connectivities-of-the-human-brain-using-multivariate-information-analysis.pdf (Curran Associates, Inc., 2009).

Gómez-Verdejo, V., Martínez-Ramón, M., Florensa-Vila, J. & Oliviero, A. Analysis of fMRI time series with mutual information. Medical Image Analysis 16, 451–458, http://www.sciencedirect.com/science/article/pii/S1361841511001629, https://doi.org/10.1016/j.media.2011.11.002 (2012).

Braun, U. et al. Test–retest reliability of resting-state connectivity network characteristics using fmri and graph theoretical measures. Neuroimage 59, 1404–1412 (2012).

Wang, J.-H. et al. Graph theoretical analysis of functional brain networks: test-retest evaluation on short-and long-term resting-state functional mri data. PloS one 6, e21976 (2011).

Fox, M. D. et al. The human brain is intrinsically organized into dynamic, anticorrelated functional networks. Proceedings of the National Academy of Sciences of the United States of America 102, 9673–9678, https://doi.org/10.1073/pnas.0504136102 (2005).

Deshpande, G., Kerssens, C., Sebel, P. S. & Hu, X. Altered Local Coherence in the Default Mode Network due to Sevoflurane Anesthesia. Brain research 1318, 110–121, https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2845285/, https://doi.org/10.1016/j.brainres.2009.12.075 (2010).

Boveroux, P., Vanhaudenhuyse, A. & Phillips, C. Breakdown of within- and between-network Resting State during Propofol-induced Loss of Consciousness. Anesthesiology 113, 1038–1053 (2010).

Di Perri, C. et al. Neural correlates of consciousness in patients who have emerged from a minimally conscious state: a cross-sectional multimodal imaging study. The Lancet. Neurology 15, 830–842, https://doi.org/10.1016/S1474-4422(16)00111-3 (2016).

Schwarz, A. J. & McGonigle, J. Negative edges and soft thresholding in complex network analysis of resting state functional connectivity data. Neuroimage 55, 1132–1146 (2011).

Tegmark, M. Improved Measures of Integrated Information. PLoS Computational Biology 12, https://doi.org/10.1371/journal.pcbi.1005123, 1601.02626 (2016).

Stamatakis, E. A., Adapa, R. M., Absalom, A. R. & Menon, D. K. Changes in Resting Neural Connectivity during Propofol Sedation. PLoS One 5, e14224, https://doi.org/10.1371/journal.pone.0014224 (2010).

Whitfield-Gabrieli, S. & Nieto-Castanon, A. Conn: a functional connectivity toolbox for correlated and anticorrelated brain networks. Brain Connectivity 2, 125–141, https://doi.org/10.1089/brain.2012.0073 (2012).

Behzadi, Y., Restom, K., Liau, J. & Liu, T. T. A component based noise correction method (CompCor) for BOLD and perfusion based fMRI. NeuroImage 37, 90–101, https://doi.org/10.1016/j.neuroimage.2007.04.042 (2007).

Ferenets, R., Vanluchene, A., Lipping, T., Heyse, B. & Struys, M. M. R. F. Behavior of Entropy/Complexity Measures of the Electroencephalogram during Propofol-induced SedationDose-dependent Effects of Remifentanil. Anesthesiol. The J. Am. Soc. Anesthesiol. 106, 696–706, https://doi.org/10.1097/01.anes.0000264790.07231.2d (2007).

Kesić, S. & Spasić, S. Z. Application of Higuchi’s fractal dimension from basic to clinical neurophysiology: A review. Computer Methods and Programs in Biomedicine 133, 55–70, http://www.sciencedirect.com/science/article/pii/S0169260715302923, https://doi.org/10.1016/j.cmpb.2016.05.014 (2016).

Pedregosa, F. et al. Scikit-learn: Machine Learning in Python. Journal of Machine Learning Research 12, 2825–2830, http://jmlr.csail.mit.edu/papers/v12/pedregosa11a.html (2011).

Daducci, A. et al. The Connectome Mapper: An Open-Source Processing Pipeline to Map Connectomes with MRI. PLoS One 7, e48121, https://doi.org/10.1371/journal.pone.0048121 (2012).

Kuramoto, Y. Chemical Oscillations, Waves, and Turbulence. Springer Series in Synergetics, https://www.springer.com/us/book/9783642696916 (Springer-Verlag, Berlin Heidelberg, 1984).

Walt, S. V. D., Colbert, S. C. & Varoquaux, G. The NumPy Array: A Structure for Efficient Numerical Computation. Computing in Science & Engineering 13, 22–30, https://doi.org/10.1109/MCSE.2011.37 (2011).

Hagberg, A., Schult, D. & Swart, P. Exploring Network Structure, Dynamics, and Function using NetworkX, https://conference.scipy.org/proceedings/scipy2008/paper_2/ (2008).

Acknowledgements

This work was supported by a grant from the Wellcome Trust: Clinical Research Training Fellowship [to R.A.] (Contract grant number: 083660/Z/07/Z); from the Canada Excellence Research Chairs program (215063) and the Canadian Institute for Advanced research (CIFAR)[to A.M.O.]; Cambridge Biomedical Research Centre and NIHR Senior Investigator Awards [to D.K.M.], the Stephen Erskine Fellowship at Queens’ College, Cambridge [to E.A.S.], the L’Oréal-Unesco for Women in Science Excellence Research Fellowship [to L.N.]; the British Oxygen Professorship of the Royal College of Anaesthetists [to D.K.M.]; the Gates Cambridge Trust [to A.I.L.]; the Oon Khye Beng Ch’Hia Tsio Studentship for Research in Preventive Medicine, administered via Downing College, University of Cambridge [to I.P.]; the Complex Networks & Systems NSF Research Traineeship (grant 1735095) [to T.F.V.]. The research was also supported by the NIHR Brain Injury Healthcare Technology Co-operative based at Cambridge University Hospitals NHS Foundation Trust and University of Cambridge. We would like to thank Victoria Lupson and the staff in the Wolfson Brain Imaging Centre (WBIC) at Addenbrooke’s Hospital for their assistance in scanning. We would like to thank Dian Lu and Olaf Sporns for useful discussions, and all the participants for their contribution to this study.

Author information

Authors and Affiliations

Contributions

T.F.V., A.I.L. and E.A.S. designed and carried out this study. T.F.V. and A.I.L. analysed the data. I.P. contributed to data analysis. A.M.O., L.N., R.A., D.K.M. and E.A.S. were involved in designing the original studies for which the present data were collected. R.A., L.N. and E.A.S. all participated in data collection. A.I.L., T.F.V., D.K.M. and E.A.S. wrote the paper with feedback from all co-authors.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Varley, T.F., Luppi, A.I., Pappas, I. et al. Consciousness & Brain Functional Complexity in Propofol Anaesthesia. Sci Rep 10, 1018 (2020). https://doi.org/10.1038/s41598-020-57695-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-57695-3

This article is cited by

-

Neural complexity is increased after low doses of LSD, but not moderate to high doses of oral THC or methamphetamine

Neuropsychopharmacology (2024)

-

Distributed harmonic patterns of structure-function dependence orchestrate human consciousness

Communications Biology (2023)

-

Whole-brain modelling identifies distinct but convergent paths to unconsciousness in anaesthesia and disorders of consciousness

Communications Biology (2022)

-

The complexity of the stream of consciousness

Communications Biology (2022)

-

Mechanisms Underlying Disorders of Consciousness: Bridging Gaps to Move Toward an Integrated Translational Science

Neurocritical Care (2021)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.