Medical Device & Diagnostic Industry MagazineMDDI Article IndexOriginally Published May 2000R&D HORIZONSThough still largely investigational, computer simulations and augmented reality systems are poised to make a dramatic impact on medical treatment.

May 1, 2000

Medical Device & Diagnostic Industry Magazine

MDDI Article Index

Originally Published May 2000

R&D HORIZONS

Though still largely investigational, computer simulations and augmented reality systems are poised to make a dramatic impact on medical treatment.

Convicted of murder and sentenced to death, Joseph Paul Jernigan donated his body to science. Following his execution by lethal injection in 1993, the body of the 39-year-old man provided the basis for a remarkable endeavor—The Visible Human Project. This was an undertaking by the National Library of Medicine to create a three-dimensional map of the entire human body by using x-rays, computed tomography (CT), and magnetic resonance imaging (MRI), followed by digital photography. The resulting images of Jernigan's body were processed by computer and used to create 3-D anatomical images.

The Visible Human Male data set—followed by the Visible Human Female data set—has provided the basis for virtual anatomy as a teaching aid, and has become a resource from which other virtual reality systems continue to be developed. Traditionally, textbook images or cadavers were used for training purposes, the former limiting one's perspective of anatomical structures to the two-dimensional plane, and the latter limited in supply and generally allowing one-time use only. Today, virtual reality simulators are becoming the training method of choice in medical schools. Unlike textbook examples, virtual reality simulations allow users to view the anatomy from a wide range of angles and "fly through" organs to examine bodies from the inside. The experience can be highly interactive, allowing students to strip away the various layers of tissues and muscles to examine each organ individually. Unlike cadavers, virtual reality models enable the user to perform a procedure countless times.

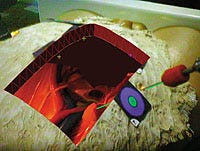

Three-dimensional view (above) of internal organs as seen through a "synthetic pit" generated by a prototype augmented-reality system (left). Photos courtesy of University of North Carolina Department of Computer Science.

Three-dimensional view (above) of internal organs as seen through a "synthetic pit" generated by a prototype augmented-reality system (left). Photos courtesy of University of North Carolina Department of Computer Science.

The impact of virtual reality (VR) is also being felt in many other areas of the medical industry, including surgery, diagnostics, and rehabilitation. The use of VR and robotics in intraoperative surgery is being explored as a means of enhancing minimally invasive techniques that can replace open surgical procedures. VR images can help guide surgeons during conventional surgery and allow them to practice complex procedures even before they enter the operating room. In terms of diagnosing diseases, the radiology market will probably be affected the most by VR, as virtual endoscopy, bronchoscopy, and laparoscopy replace conventional procedures. This may, in turn, lead to radiologists fulfilling a role now played by surgeons and internists. Similarly, the role of physical therapists may change with the introduction of virtual reality rehabilitation clinics where patients can undergo repetitive training without assistance.

VR AND COMPUTER-AIDED SURGERY

VR entails the use of computer-generated 3-D modeling to simulate real-world phenomena. In medical applications, anatomical accuracy and realism are important for the manipulation of virtual objects in real time. Such heightened realism requires advanced computer hardware and software and convincing sensory feedback.

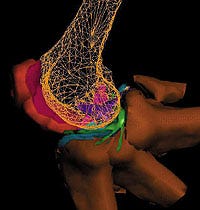

VR images of the structure and movement of the human hand and fingers are used to assist development of joint prostheses. (Courtesy of University of Sheffield Virtual Reality in Medicine and Biology Group; UK)

VR images of the structure and movement of the human hand and fingers are used to assist development of joint prostheses. (Courtesy of University of Sheffield Virtual Reality in Medicine and Biology Group; UK)

Haptic interfaces, which give the VR user a sense of touch, are an essential component of all VR simulations. These interfaces enable the user to experience the tactile force feedback of objects, such as the sensation of perforating skin with a surgical instrument. They also allow the user to feel a variety of textures, as well as any changes in texture as a result of manipulating tissues or organs.

The application of computational algorithms and VR visualizations to diagnostic imaging, preoperative surgical planning, and intraoperative surgical navigation is referred to as computer-aided surgery (CAS). Applications include minimally invasive surgical procedures, neurosurgery, orthopedic surgery, plastic surgery, and even coronary artery bypass surgery.

HEAD-MOUNTED DISPLAYS AND AUGMENTED REALITY

Visual interfaces—like haptic interfaces—are used to immerse participants in a virtual environment. These displays range from conventional desktop screens to head-mounted displays, depending on the degree of reality required. Head-mounted displays consist of goggles that afford a stereoscopic view of the computer-generated environment. A sense of motion is created by continuously updating the visual input with positional information derived from the participant's head movements. Collected by a tracking system connected to the display, this information is fed back to the computer controlling the graphics.

Microvision's high-performance display system uses anatomical, biochemical, and physiological images and other data to guide surgeons through procedures.

Microvision's high-performance display system uses anatomical, biochemical, and physiological images and other data to guide surgeons through procedures.

Two types of head-mounted displays—video see-through and optical see-through—create an augmented reality that merges a virtual image of the patient's internal organs with the actual view of the patient's body. Both methods allow the user to see real and virtual objects at the same time. Researchers at the University of North Carolina (UNC) department of computer science are exploring the use of augmented reality technology to improve laparoscopic surgery. Traditionally, surgeons have needed to cut through healthy tissue in order to reach the surgical site. With the use of head-mounted displays, the internal organs of the patient are visible through a virtual window that appears superimposed over the patient, enabling surgeons to manipulate instruments with minimal damage to surrounding tissue.

Jeremy Ackerman, researcher at the UNC department of computer science, explains that there are distinct differences between the optical see-through and video see-through displays. With the first method, synthetic and real-world imagery are optically mixed by way of a half-silvered mirror. The user sees the virtual image as a transparent overlay; however, there are no electronics between the user's eye and the real world. With the second method, the user sees both the real and virtual worlds on small displays in the head-mounted unit. The system captures its view of the real world via small, head-mounted video cameras, then digitally adds VR imagery. This combined view allows surgeons to effectively manipulate conventional imagery, and provides previously unavailable perspectives.

Ackerman explains that one limitation of the optical see-through display is the occasional lack of depth perception. Because occlusion is an important depth cue and the transparent virtual image does not fully occlude the real world behind it, this type of head-mounted display can cause users to misperceive depth. In addition, in a brightly lit room, the transparent overlay can be so faint as to be almost imperceptible. Although feedback from doctors using these displays has been positive, other limitations are evident, such as physical strain caused by the weight of the system, poor resolution of the displays, and inaccurate tracking of head and instrument motion.

Microvision Inc. (Bothell, WA) has designed head-mounted displays that address several of these concerns. The company's retinal scanning technology, which is integrated into its units, uses a safe, low-power beam of light to "paint" rapidly scanned images or information directly across the retina of the eye, generating a high-resolution, full-motion image without the use of electronic screens. The human visual system integrates the pixel elements into a stable, coherent image that appears to be floating in front of the viewer about an arm's length away. The unit is lightweight, and resolution, color fidelity, and contrast have been improved over earlier models, according to the company. Microvision's technology is designed to provide see-through capabilities in intense lighting conditions or outdoor settings, such as those encountered by emergency and intensive-care staff and military medics. The technology allows surgeons to perform surgical procedures while accessing measurement data from image-guided surgery systems and vital patient data, as well as MR, CT, and ultrasound data. The surgeon can access the information as needed during the procedure. This can be useful during surgery where measurement data are critical to excising tumors from the brain, spine, or abdomen, or to inserting pins or screws into bone.

MOTION TRACKING

In VR applications, the user's position and movements are tracked so that virtual images can be updated in real time. Polhemus Inc. (Colchester, VT) has developed the Fastrak system, which can be used to track motion of head-mounted displays, and can be used in conjunction with instrumentation to help locate lesions and tumors. "One of the problems with motion tracking has been the degree of latency," says Bill Panepinto, Polhemus vice president of sales and marketing. "Often, a lengthy lag time in updating images leads people wearing head-mounted displays to feel dizzy." The Fastrak has a lag time of 4 milliseconds, which is barely noticeable to the human eye. The system uses a magnetic tracker—the most widely used tracking system, owing to its low cost, small size, and reasonable accuracy. Unlike optical sensors, electromagnetic sensors do not introduce problems with occlusion or line of sight. "If blood flows over the instrument, it isn't a concern," Panepinto explains. "The sensor still knows where it is because it sends an electromagnetic signal from the receiver to the transmitter." With optical tracking, any time an object or person occludes the optical beam, the equipment stops reporting data, and the procedure is halted.

The FastScan system by Polhemus uses the Fastrak system to compile an exact 3D simulation—in real time—of an object that is digitally scanned.

The FastScan system by Polhemus uses the Fastrak system to compile an exact 3D simulation—in real time—of an object that is digitally scanned.

Polhemus has incorporated the Fastrak system into its FastScan, a handheld laser scanner with the company's motion-tracking technology built into the head of the wand. As the wand is swept over the object, the scanner creates an exact three-dimensional replica of the object in real time. This scanner can be used for objects that are prone to movement, such as body parts, by attaching a second tracker receiver to the object. The device is in use presently for radiotherapy, burn treatment, and prosthesis design and manufacture. Duncan Hynd Associates Ltd. (Oxon, UK) has created a 3-D virtual surgery assistant called Prima Facie using the FastScan technology. Physicians and surgeons can use the system to obtain detailed 3-D patient mapping and surface information that is essential for pinpointing the location for radiotherapy treatment. In the manufacture of prosthetic devices and noninvasive masks for burn patients, the Prima Facie system saves time and results in a more accurate fit for the patient, according to the company.

Ascension Technology Corp. (Burlington, VT) manufactures both magnetic and optical trackers. Because magnetic signals are not weakened by the human body, there are no line-of-sight elements; however, magnetic signals are susceptible to environmental issues, such as interference from large pieces of metal and strong electric currents. Proper equipment positioning, selection of the optimal update rate, and the comparative immunity of the newer pulsed direct-current magnetic systems have largely overcome these problems. For example, they have been used successfully in the orthopedic and neurosurgical suites, and in doctors' office and outpatient clinic settings. The elimination of line-of-sight issues allows the trackers to be used inside the body. This reduces or eliminates the need for offset calculations because the tracker can be at or extremely close to the tip of the instrument. Ascension's Flock of Birds and pcBIRD magnetic trackers have been found to be well suited for medical applications where sensor size is not crucial. The Regulus Navigator by Compass International Inc. (Rochester, MN) uses the pcBIRD for preoperative neurosurgical planning and intraoperative guidance. Radiological images are used to localize the surgical field, providing information for precise positioning of surgical instrumentation. The company states that, "Regulus registers to preoperatively collected CT and MRI diagnostic images, enabling surgeons to plan and perform many procedures with greater freehand efficiency."

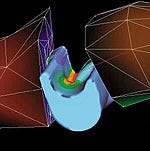

Flexing, finite-element model of the knee provides the basis for VR simulation.

Flexing, finite-element model of the knee provides the basis for VR simulation.

Systems that use optical trackers have the advantage of being inherently more accurate than magnetic trackers, and sensors can be either active (wired) or passive (unwired). Passive systems offer the advantage of eliminating wiring from the surgical field, a feature currently not available with magnetic tracking. There are, however, certain disadvantages to using optical trackers. One such disadvantage is the line-of-sight issue mentioned previously. Available optical systems are bulky and have a large footprint for operating rooms where space is limited, and optical tracking is typically too expensive for medical VR applications. Ascension Technology is addressing these issues with a new optical tracker, laserBIRD, which is undergoing beta site testing. The device has a much smaller footprint than other optical trackers currently in use. The laserBIRD can be operated close to the patient (0.25 to 2 m) because of its wide angle and short standoff, decreasing the area in which the beam is susceptible to occlusion or line-of-sight problems.

HAPTIC INTERFACE DEVICES

Force-feedback systems are haptic interfaces that output force reflecting input force and position information obtained from the participant. These devices come in the form of gloves, pens, joysticks, and exoskeletons. In medical applications, it is important that haptic devices convey the entire spectrum of textures—from rigid to elastic to fluid materials. It is also essential that force feedback occur in real time to convey a sense of realism. The Iris Indigo system from Silicon Graphics Inc. (Mountain View, CA) has become a common platform used for haptic devices in many VR applications.

A number of companies are incorporating haptic interfaces into VR systems to extend or enhance interactive functionality. SensAble Technologies (Cambridge, MA), a manufacturer of force-feedback interface devices, has developed its Phantom Desktop 3-D Touch System, which supports a workspace of 6 X 5 X 5 in. The system incoporates position sensing with six degrees of freedom and force feedback with three degrees of freedom. A stylus with a range of motion that approximates the lower arm pivoting at the user's wrist enables users to feel the point of the stylus in all axes and to track its orientation, including pitch, roll, and yaw movement. The Phantom haptic device has been incorporated into the desktop display by ReachIn Technologies AB (Stockholm). Developed for a range of medical simuation and dental training applications, the system combines a stereovisual display, haptic interface, and a six-degrees-of-freedom positioner. The user interacts with the virtual world using one hand for navigation and control and the other hand to touch and feel the virtual objects. A semitransparent mirror creates an interface where graphics and haptics are colocated. The result is that the user can see and feel the object in the same place. Among the medical procedures that can be simulated are catheter insertion, needle injections, suturing, and surgical operations. "The desktop and developer displays are being used mainly by medical students in universities and by doctors for presurgical practice," says ReachIn managing director Peter Åberg.

MATTERS OF THE HEART

Virtual reality is making an impact on the heart as both a diagnostic and surgical tool. Three-dimensional imaging places the cardiologist inside the heart to treat coronary artery disease by tracking and targeting the appropriate site for stent placement. VR can also benefit the surgeon by providing real-time images of the beating heart and coronary arteries for enhanced visualization during interventional procedures. Use of medical robotics in concert with VR may allow surgeons to perform procedures using techniques that combine the advantages of minimally invasive surgery with the direct visualization and physical simplicity of open-chest surgery. In October 1999, the Zeus Robotics Surgical System developed by Computer Motion (Goleta, CA) was used to perform the first successful closed-chest beating-heart bypass surgery. The system allows the surgeon to remain seated at a monitor during the procedure and use haptic force-feedback instrumentation while performing the surgery. The robotic system mimics the surgeon's hand movements at the surgery site, and compensates for motion caused by the beating heart. The system also filters out the surgeon's hand tremors and other undesirable moves.

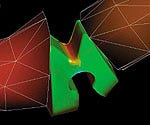

Dynamic 3-D models of the human knee may help develop prosthetic meniscuses.

Dynamic 3-D models of the human knee may help develop prosthetic meniscuses.

The combination of robotics technology and haptic interface allows surgeons to perform minimally invasive surgery with a high level of dexterity and precision. Surgeons can extend their manipulation skills, which are ordinarily confined by the limited space within which laparascopic surgery must be performed. The system allows beating and stopped-heart endoscopic coronary artery bypass grafting to be performed using incisions smaller than the diameter of a pencil. The company suggests that, compared with open-chest surgery, the method results in reduced pain and faster recovery times for patients, which can reduce costs because of shortened hospital stays.

REHABILITATION

VR devices are being used to assess and treat patients who require physical rehabilitation because of physical impairments or degenerative diseases. The CAREN (Computer Assisted Rehabilitation Environment) system, developed by MOTEK Motion Technology Inc. (Manchester, NH), allows the balance behavior of humans to be assessed in a variety of reproducible VR-generated environments. The technology is being studied at two European medical centers to evaluate its diagnostic and therapeutic use in physiotherapy, orthopedics, neurology, and certain degenerative conditions, including Parkinson's disease and multiple sclerosis. The subject wears optical or magnetic markers that record position and orientation. A 3-D virtual environment is selected for projection on a screen in front of the patient. The platform is then controlled according to the type of virtual environment being simulated—such as standing on an escalator, walking a tightrope, or shopping at the supermarket. With the CAREN system, patients are immersed in a virtual environment that operates in a real-time domain. The system can detect and quantify any movement of the patient to compensate for perturbations of the virtual environment—including tremors and anomalies that could escape the notice of physicians. Feeding the resulting data into a human-body model allows the system to calculate joint movement and muscle activation. According to the firm, comparing the generated data with a benchmark previously established for the patient can provide the basis for an early diagnosis, and enable timely intervention.

The use of virtual reality for rehabilitation purposes offers the advantages of testing patients in a controlled environment, according to MOTEK. The testing is dynamic, allowing for scenarios that can be difficult to present by other means. Environments and programs can be adjusted depending on the patient's degree of impairment and treatment goals. The result is that the time required for rehabilitation can be shortened by as much as 50% for certain neuromuscular and skeletal disorders.

Another VR application that has met with success in the treatment of Parkinson's disease is a wearable sensory enhancement aid developed by the human interface technology lab at the University of Washington (Seattle). Parkinson's disease is a progressive neurological disorder that results in movement difficulties. One of the most debilitating symptoms is the eventual inability to walk, which most Parkinson's disease patients experience, even with conventional drug treatments.

You May Also Like