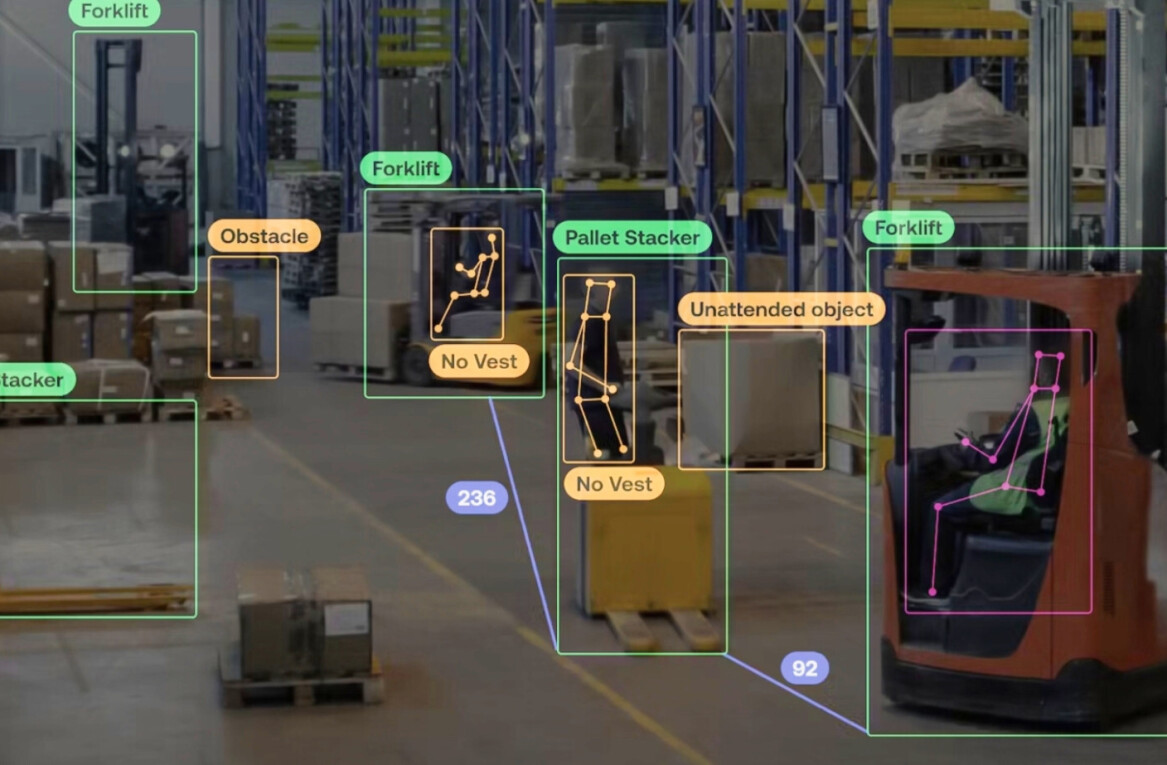

There’s been a recent hullabaloo over the US Army developing killer robots, but we’re here to set you straight. The Pentagon’s official stance: it’s all false. There’s no such thing as killer robots; you’re drunk.

The US Army’s “Advanced Targeting and Lethality Automated System” (ATLAS) project isn’t what you think. I know it sounds bad, but if you ignore every other word it’s actually quite palatable. “Advanced and Automated” sounds pretty good doesn’t it? Those are probably the only words you should focus on.

The US Army just happens to be simultaneously developing “optionally-manned” tanks and soliciting white papers for a fully autonomous targeting system capable of bringing a weapon to bear on both vehicle and individual personnel targets. That’s a coincidence. You’re obviously not thinking straight. Perhaps you’re hormonal.

If you went through the US Army’s phone right now you wouldn’t find a single nude (and if you do, it’s because someone else used to have the number, and sometimes the Army gets pics from people it doesn’t know). The point is: you can trust the Army.

In fact, when the Army heard that people like Justin Rohrlich from Quartz were calling the ATLAS project a plan to “turn tanks into AI-powered killing machines,” it immediately amended its official request document with the following addition:

All development and use of autonomous and semi-autonomous functions in weapon systems, including manned and unmanned platforms, remain subject to the guidelines in the Department of Defense (DoD) Directive 3000.09, which was updated in 2017. Nothing in this notice should be understood to represent a change in DoD policy towards autonomy in weapon systems. All uses of machine learning and artificial intelligence in this program will be evaluated to ensure that they are consistent with DoD legal and ethical standards.

So just calm down. The US Army’s policy against using lethal force remains unchanged. Before an AI-powered murder machine can kill the enemy with impunity it has to be authorized. It’s the exact same policy the Army has on killer humans. When a fire-team enters a combat zone, the soldiers in it are given authority to engage the enemy. But before it enters a combat zone, they are not authorized to use lethal force.

It’s the same with the robots. Until a soldier turns the autonomous vehicle on, and engages the weapon systems, it won’t shoot anyone. It has to be given orders, just like a person. Besides, according to Gizmodo this is nothing new:

The United States has been using robotic planes as offensive weapons in war since at least World War II. But for some reason, Americans of the 21st century are much more concerned about robots on the ground than they are with the robots in the air.

Ah yes, the fully autonomous targeting systems of World War II. Those neural networks spit out the greatest generations.

Still think the Army is making killer robots? You’re wrong. That’s all there is to it. Don’t take our word for it, Breaking Defense has it all figured out:

But if the Army’s intentions are so modest, why did they inspire such anxious headlines? If ATLAS is so benign, why does it stand for “Advanced Targeting & Lethality Automated System?” The answer lies in both the potential applications of the technology … and the US Army’s longstanding difficulty explaining itself to the tech sector, the scientific community, or the American people.

Honey, the US Army isn’t developing killer robots. It just loves you so much that sometimes it gets scared it’s going to lose you, and it forgets how to properly express itself.

The bottom line is: the US Army isn’t developing AI-powered machines for the purpose of killing humans with impunity. It’s just making machines that are capable of doing that. There, now don’t you feel better?

Want to learn more about AI than just the headlines? Check out our Machine Learners track at TNW2019.

Get the TNW newsletter

Get the most important tech news in your inbox each week.