- OpenAI, a San Francisco-based research group focused on studying artificial intelligence to help humanity (and founded by Elon Musk), has released footage of a robotic hand that's trained to solve a Rubik's Cube.

- The robotic hand is actually 15 years old, but was trained using new neural networks that completed the puzzle in simulation.

- Jumping from simulation to the real world required a new kind of training called automatic domain randomization.

First the robots came for our jobs, then they came for our puzzle games. OpenAI has released footage of a robotic hand that can solve a Rubik's Cube 60 percent of the time. It's all over.

The organization, founded by Elon Musk in 2015 to "freely collaborate" with other researchers by making all of its patents and other work public, decided to work on this robot, called Dactyl, because the researchers believe that training a robotic hand to do something this complicated is a step toward achieving general-purpose robots.

"Building robots that are as versatile as humans remains a grand challenge of robotics," the scientists write in a research paper released this week. "While humanoid robotics systems exist, using them in the real world for complex tasks remains a daunting challenge."

From Simulation to the Real World

One of the great challenges in robotics is teaching machines to grasp and hold objects at all, let alone complete complicated tasks like maneuvering around a Rubik's Cube with one hand. Manipulation with the hands is often regarded as one of the final frontiers to introducing robots into the home or medical settings due to the high level of dexterity required to move the individual digits of a robotic hand.

Yet that challenge was exactly what OpenAI sought to complete. By using what the researchers have coined "automatic domain randomization," they could endlessly create more and more challenging environments in simulation, mocking some of the curveballs that real life would certainly throw at the robot.

That type of training allowed the researchers to train the robot in simulation, while finding success in the physical world. After all, factors like friction, elasticity, and dynamics are hard for them to model accurately.

"This frees us from having an accurate model of the real world, and enables the transfer of neural networks learned in simulation to be applied to the real world," the researchers write.

Automatic domain randomization begins with a single nonrandom environment where the neural network is trained to solve a Rubik's Cube. Then, as the neural network improves at its task over time, reaching a performance threshold, the amount of randomization is automatically increased to make the task more difficult. That way, the neural network must learn to come up with general solutions to random environments, learning until the performance threshold is reached again. The process repeats.

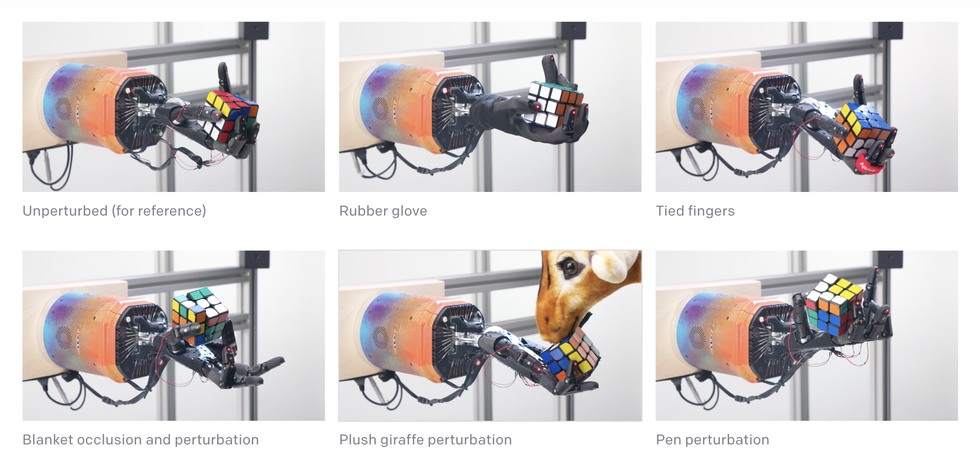

To further test the robot's robustness, the researchers tried to trip it up with a number of foreign objects, including a plastic deer head, a blanket, and even rubber gloves. But the robot still persevered.

Reaching for Generalized Robots

Sure, you can liken the Rubik's Cube-solving robot to generalized robots that can complete a number of tasks in the real world, adeptly adapting to new challenges that its neural network hasn't been directly taught to deal with in the simulation. There's just one problem: Researchers have already decried that relationship as inherently problematic.

“There can be an impression that there’s one unified theory or system, and now OpenAI’s just applying it to this task and that task,” Dmitry Berenson, a roboticist at the University of Michigan who specializes in machine manipulation, told MIT's Technology Review. “But that’s not what’s happening at all. These are isolated tasks. There are common components, but there’s also a huge amount of engineering here to make each new task work.”

“That’s why I feel a little bit uncomfortable with the claims about this leading to general-purpose robots,” he said. “I see this as a very specific system meant for a specific application.”

At the heart of the problem is reinforcement learning itself. This technique is used to train a system on one specific skill, which it can complete even with new obstacles it hasn't been trained on. However, the real world poses an innumerable number of risks for a Rubik's Cube-solving robot, let alone one that cleans your kitchen or takes care of your grandparents.