Nov. 25, 2020 — The Oct. 20, 2020, XSEDE ECSS Symposium featured overviews of two new NSF-funded HPC systems at the Pittsburgh Supercomputing Center (PSC). The new resources, called Bridges-2 and Neocortex, will continue the center’s exploration in scaling HPC for data science and AI on behalf of new communities and research paradigms. Both systems are currently preparing their early user programs. The two systems will be available at no cost for research and education, and at cost-recovery rates for other purposes.

Bridges-2: Scaling Deep Learning and Data Science for Expanding Applications

“One of the motivations for us to build Bridges-2 was rapidly evolving science and engineering,” said Shawn Brown, PSC’s director and PI for that system, in introducing that $20-million, XSEDE-allocated HPC platform, integrated by HPE. “The landscape of high performance computing and computational research has changed drastically over the last decade; we really wanted to build a machine that supported the new ways of doing computational science and not necessarily only traditional computational science,” especially in the areas of artificial intelligence and complex data science.

Bridges-2’s predecessor, Bridges, broke new ground in easing entry to heterogeneous HPC for research communities that never before required computing, let alone supercomputing. Bridges-2 will continue this mission and add expanded capabilities for fields such as scalable HPC-powered AI; data-centric computing both in fields that require massive datasets and many small datasets; and research via popular cloud-based applications, containers and user-focused platforms.

“We’re not just going to be supporting the command line, we want to be able to support all sorts of modes of computation to make this as applicable to [new] communities as possible,” Brown said. “We [want to] remove barriers to people using high performance computing for their research rather than us training them to do it the way that we do things—we want to … enable them to do their research in their own particular idiom.”

Like Bridges, Bridges-2 will offer a heterogeneous system designed to allow complex workflows leveraging different computational nodes with speed and efficiency. This will include:

- 488 256-GB-RAM regular-memory (RM) nodes and 16 512-GB-RAM large-memory (LM) nodes, featuring two AMD EPYC “Rome” 7742 CPUs each

- Four 4-TB extreme-memory (EM) nodes with four Intel Xeon Platinum 8260M “Cascade Lake” CPUs

- 24 GPU nodes with eight NVIDIA Tesla V100-32 GB SXM2 GPUs, two Intel Xeon Gold “Cascade Lake” CPUs and 512 GB RAM

- A Mellanox ConnectX-6 HDR InfiniBand 200Gb/s interconnect

- An efficient tiered storage system including a flash array with greater than 100 TB usable storage; a Lustre file system with 21 PB raw storage; and an HPE StoreEver MSL6480 Tape Library with 7.2 PB uncompressed, ~8.6 PB compressed space

“We want Bridges-2 … to work interoperably with all sorts of different [computational resources], including workflows, engines, heterogeneous computing, cloud resources,” Brown said. “We want this thing to be a member of the ecosystem—not just a standalone machine, but really a resource that’s widely available and applicable to a number of different rapidly evolving research paradigms.”

PSC will be integrating Bridges-2 with its extant Bridges-AI system, featuring an NVIDIA DGX-2 enterprise research AI system tightly coupled with 16 NVIDIA Tesla V100 (Volta) GPUs with 32 GB of GPU memory each.

Brown encouraged researchers to take advantage of Bridges-2’s Early User Program, which is now accepting proposals and is scheduled to begin early in 2021. This program will allow users to port, tune and optimize their applications early, and make progress on their research, while providing PSC with feedback on the system and how it can be better tuned to users’ needs. Information on applying as well as program updates can be found at https://psc.edu/bridges-2/eup-apply.

Updates on the system in general can be found at http://www.psc.edu/resources/computing/bridges-2.

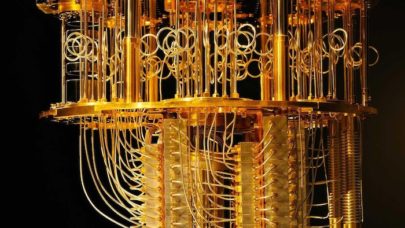

Neocortex: Democratizing Access to Game-Changing Compute Power in Deep Learning

Sergiu Sanielevici, Neocortex’s co-PI and director of user support for scientific applications at PSC, introduced the $5 million, Cerebras Systems/HPE system on behalf of PI Paola Buitrago, director of artificial intelligence & data science at PSC. Neocortex was granted via the NSF’s new category 2 awards, which fund systems intended to explore innovative HPC architectures. Neocortex will feature two Cerebras CS-1 systems and an HPE Superdome Flex HPC server robustly provisioned to drive the CS-1 systems simultaneously at maximum speed and support the complementary requirements of AI and high performance data analytics workflows.

“Neocortex is specifically designed for AI training—to explore how [the CS-1s] can be used, how that can be integrated into research workflows,” Sanielevici said. “We want to get to this ecosystem that [spans] from what Bridges-2 can do … to the things that really require this specialized hardware that our partners at Cerebras provide.”

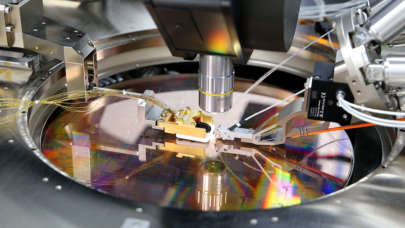

The CS-1, a new generation of “wafer-scale” engine, is the largest chip ever built: a 46-square-centimeter processor featuring 1.2 trillion transistors. Its design principle is to accelerate training to shorten this critical and lengthy component of deep learning.

“Machine-learning workflows are of course not simple,” Sanielevici said. “Training is not a linear process … it’s a highly iterative process with lots of parameters. The goal here is to vastly shorten the time required for deep learning training and in the larger ecosystem foster integration of deep learning with scientific workflows—to really see what this revolutionary hardware can do.”

The CS-1 fabric connects cluster-scale compute in a single system to eliminate communication bottlenecks and make model-parallel training easy, he added. Without orchestration or synchronization headaches, the system offers a profound advantage for machine learning training with small batches at high utilization, obviating the need for tricky learning schedules and optimizers.

A major design innovation was to connect the two CS-1 servers via an HPE Superdome Flex system. The combination is expected to provide substantial capability for preprocessing and other complementary aspects of AI workflows, enabling training on very large datasets with exceptional ease and supporting both CS-1s independently and together to explore scaling.

Neocortex accepted early user proposals in August through September 2020; 42 applications are currently being assessed. Proposals represent research areas including AI Theory, Bioinformatics, Neurophysiology, Materials Science, Electrical and Computer Engineering, Medical Imaging, Geophysics, Civil Engineering, IoT, Social Science, Drug Discovery, Fluid Dynamics, Ecology and Chemistry. Information about the system and its progress can be found at https://www.cmu.edu/psc/aibd/neocortex/.

You can find a video and slides for both presentations at https://www.xsede.org/for-users/ecss/ecss-symposium.

Source: XSEDE