Princeton researchers' latest salt grain-sized camera has massive potential

There are many uses for cameras in medicine and other areas, but typical modern cameras are too large for many medical uses. A group of researchers from Princeton University and the University of Washington has teamed up to create an extremely small camera about the size of a coarse grain of salt. Cameras of such small size have excellent potential for exploring inside the human body, among other things.

While the potential usefulness in the medical environment for cameras such as this is high, they can also be used in other devices, including extremely small robots allowing them to have vastly improved sensing capability. This tiny salt grain-sized camera certainly isn't the first minuscule camera ever created. Back in 2011, researchers created a similarly sized camera. However, the big improvement with the new camera breakthrough is vastly improved image quality.

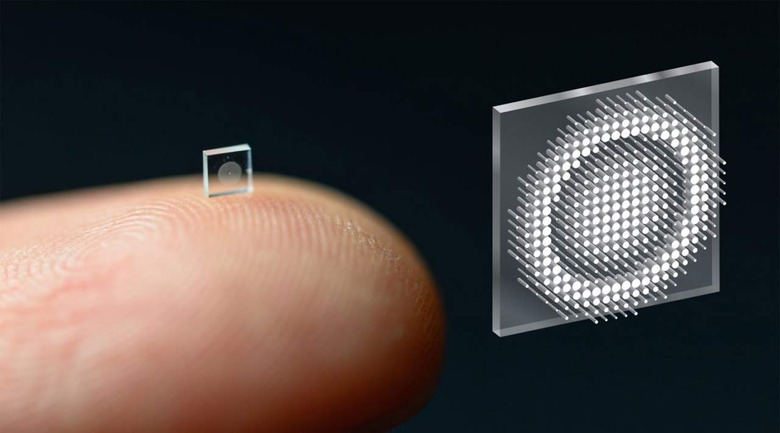

In the past, tiny cameras could only capture fuzzy and distorted images offering a limited field of view. The image below shows the vast improvement compared to older camera systems and the new camera created in this research. The camera can capture full-color images similar to images captured using a conventional camera lens with 500,000 times more volume.

Researchers relied on a joint design for the camera hardware and computational processing capability to build such a high-resolution and small camera. They believe the system could result in the creation of an endoscope that would be minimally invasive or medical robots able to explore the human body to diagnose and treat various diseases and conditions. In addition, the very small size of the camera is ideal for improving imaging capability on any type of robot where there are significant size and weight constraints.

Another interesting possibility for the camera system is its potential to be used to create an array of thousands of camera sensors allowing full-scene sensing and the ability to turn an entire surface into a camera. To create the new optical system, researchers relied on something called a metasurface that's created in a similar fashion as a computer chip is created. The metasurface has 1.6 million cylindrical posts on its surface, each approximately the size of the human immunodeficiency virus.

Each of those cylindrical posts has a unique shape and functions as an optical antenna. The varying design of the posts is required to shape the entire optical wavefront. Researchers used machine learning algorithms to control the interactions of the posts with light to produce the highest quality images with the widest field of view achievable with a full-color metasurface so far.

One of the keys to allowing such improved performance with this camera is the integrated design for the optical surface and signal processing algorithms that produce the image. By integrating the design of the optical surface and signal processing algorithms, the camera received a significant boost in performance during natural light conditions. Past metasurface cameras required laser light in a laboratory or other ideal conditions to produce high-quality images.

When images from the new system are compared to those from similar systems in the past, the team found that aside from blurring the edges of the image, images taken by their camera system are comparable to those utilizing a traditional lens with more than 500,000 times the volume. Researcher Ethan Tseng said it was a challenge to design and configure the microstructures to do what the team wanted. Before their research, the task of capturing a large field of view RGB image was unclear when it came to how to codesign millions of nanostructures together with post-processing.

Researcher Joseph Mait says that while the approach to the optical design used by the team isn't new, their system is the first to use optical surface technology in the front end and neural-based processing in the backend. Mait wasn't involved in the research. Currently, project researchers are working on adding additional computational abilities to the camera.

They want the computational abilities to extend beyond optimizing image quality to add capabilities such as object detection and other sensing capabilities relevant to the medical field and robotics. One tantalizing prospect of the breakthrough the researchers have made could have the potential to change smartphones as we know them. Current modern smartphones use multiple camera sensors in an array on the back of the device. However, the metasurface imaging system the researchers have created could eventually transform the entire back of the smartphone into a giant camera sensor.

Tiny imaging systems of this type could be integrated into cameras that can be swallowed and provide high-resolution images of the gastrointestinal tract to search for cancer or other disease conditions without invasive surgery. Many surgeries performed in hospitals worldwide use laparoscopic techniques involving inserting tiny cameras and instruments into small incisions in the patient's body. Having significantly smaller tools, which are possible with this new technology, would mean even smaller incisions and faster recovery times after surgery.

In July 2020, we talked a bit about a small camera system that researchers created that can be attached to the back of an insect. That entire system was significantly larger than the metasurface the scientists have created in this recent work. Currently, the researchers are continuing to work on improving their metasurface imaging system. There's no indication of when a commercial product might result from their work.