Alphabet subsidiary DeepMind is developing a framework that can help weed out human biases in decision-making machine learning algorithms.

October 8, 2019

|

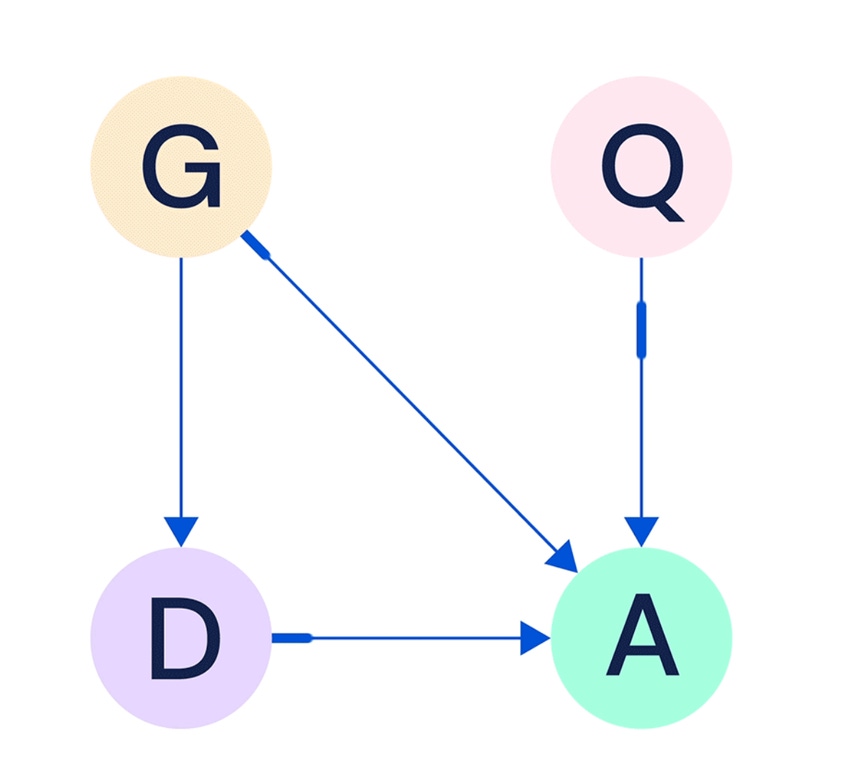

In DeepMind's hypothetical college admissions example: qualifications (Q), gender (G), and choice of department (D), all factor into whether a candidate is admitted (A). A Causal Bayesian Network can identify causal and non casual relationships between these factors and look for unfairness. In this example gender can have a non-causal effect on admission due to its relationship with choice of department. (Image source: DeepMind) |

DeepMind, a subsidiary of Alphabet (Google's parent company) is working to remove the inherent human biases from machine learning algorithms.

The increased deployment of artificial intelligence and machine learning algorithms into the real world has coincided with increased concerns over biases in the algorithms' decision making. From loan and job applications to surveillance and even criminal justice, AI has been shown to exhibit bias – particularly in terms of race and gender – in its decision making.

Researchers at DeepMind believe they've developed a useful framework for identifying and removing unfairness in AI decision making. Called Causal Bayesian Networks (CBNs), these are visual representations of datasets that can identify causal relationships within the data and help experts identify factors that might be unfairly weighed against or skewing others. The researchers describe their methodology in two recent papers, A Causal Bayesian Networks Viewpoint on Fairness and Path-Specific Counterfactual Fairness.

“By defining unfairness as the presence of a harmful influence from the sensitive attribute in the graph, CBNs provide us with a simple and intuitive visual tool for describing different possible unfairness scenarios underlying a dataset,” Silvia Chiappa and William S. Isaac, the authors of the studies, wrote in a blog post. “In addition, CBNs provide us with a powerful quantitative tool to measure unfairness in a dataset and to help researchers develop techniques for addressing it.”

RELATED ARTICLES:

To describe how CBNs can be applied to machine learning, Chiappa and Isaac use the example of a hypothetical college admissions algorithm. Imagine an algorithm designed to approve or reject applicants based on their qualifications, choice of department, and gender. While qualifications and gender can both have a direct (causal) relationship to whether a candidate is admitted, gender could also have an indirect (non-causal) impact as well due to its influence on choice of department. If a male and female are both equally qualified for admission, but they both applied to a department that historically admits men at a far higher rate, then the relationship between gender and choice of department is considered unfair.

“The direct influence captures the fact that individuals with the same qualifications who are applying to the same department might be treated differently based on their gender,” the researchers wrote. “The indirect influence captures differing admission rates between female and male applicants due to their differing department choices.”

This is not to say the algorithm is capable of correcting itself however. The AI would still need input and correction from human experts to make any adjustments to its decision making. And while a CBN could potentially provide insights into fair and unfair relationships in variables in random datasets, it would ultimately fall on humans to either proactively or retroactively take steps to ensure the algorithms are making objective decisions.

“While it is important to acknowledge the limitations and difficulties of using this tool – such as identifying a CBN that accurately describes the dataset’s generation, dealing with confounding variables, and performing counterfactual inference in complex settings – this unique combination of capabilities could enable a deeper understanding of complex systems and allow us to better align decision systems with society's values,” Chiappa and Isaac wrote.

Improving algorithms themselves is only one half of the work to be done to safeguard against bias in AI. Figures released from studies such as one conducted by New York University's AI Now Institute suggest there is a greater need to increase the diversity among the engineers and developers creating these algorithms. For example, as of this year only10 percent of the AI research staff at Google was female, according to the study.

Chris Wiltz is a Senior Editor at Design News covering emerging technologies including AI, VR/AR, blockchain, and robotics.

The Midwest's largest advanced design and manufacturing event! |

About the Author(s)

You May Also Like