EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

EMERGING TECH

Intel Corp. has achieved another key milestone on the road to developing neuromorphic processors modeled on the human brain that are designed to provide a more energy-efficient alternative to existing processing architectures.

The chipmaker said today it has deployed the largest neuromorphic system ever made to Sandia National Laboratories, which is run by the U.S. Department of Energy’s National Nuclear Security Administration. There, it will be used to support research into futuristic, brain-inspired artificial intelligence and tackle challenges relating to the sustainability of AI models.

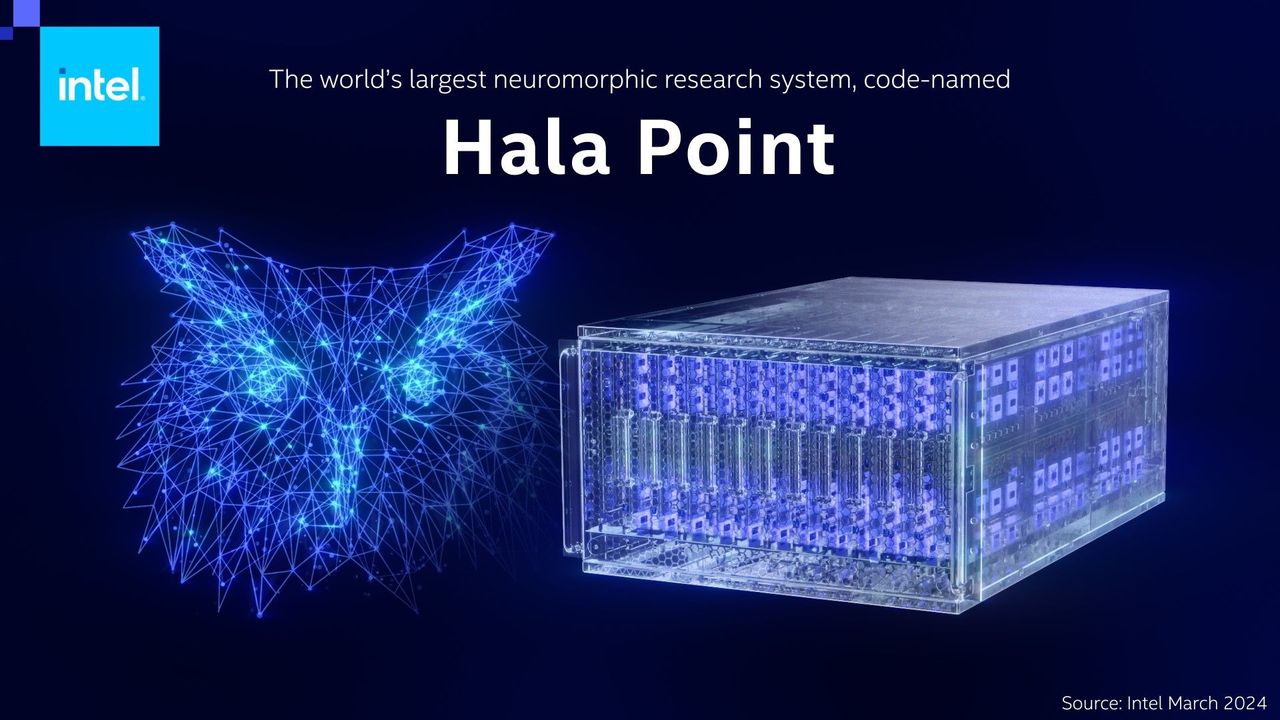

Intel calls this new system Hala Point, and it’s a major advance on the company’s first-generation neuromorphic chip system, called Pohoiki Springs, delivering 10 times more neuron capacity and up to 12 times higher performance.

Neuromorphic computing is an entirely new kind of semiconductor design approach that’s focused on building computer chips that function more like the human brain, with the basic idea being that the more neurons you have inside a chip, the more powerful it is. The approach draws on neuroscience insights that integrate memory and compute with highly granular parallelism in order to minimize data movement.

By employing brain-inspired computing principles such as asynchronous processing, event-based spiking neural networks, integrated memory and computing and sparse and continuously changing connections, it’s possible to achieve orders-of-magnitude increases in energy efficiency and performance, Intel says. The neurons communicate directly with one another, rather than going via the chip’s onboard memory, significantly reducing energy consumption.

Mike Davies, director of the Neuromorphic Computing Lab at Intel Labs, said the main benefit of the neuromorphic processors will be seen in AI, where computing costs are rising at unsustainable rates. The newly released Stanford Institute for Human-Centered Artificial Intelligence AI Report revealed the multimillion-dollar costs involved in developing the most advanced large language models, such as OpenAI’s GPT-4 and Google LLC’s Gemini Pro, highlighting the need for more efficient compute resources.

Image: Intel

In a briefing with SiliconANGLE, Davies said AI cost efficiency is one of the most obvious use cases for neuromorphic computing. “We need power plants near data centers to create these models,” he said. “It’s hard to see how this can go to even one more order of magnitude.”

Although they’re still something of a bleeding-edge development, Intel hopes its neuromorphic chips will pave the way for it to compete with Nvidia Corp., which currently dominates the AI processing industry with its graphics processing units.

“This is a different model that nature has given us, but the challenge is that it’s so different that it’s not an easy nut to crack,” Davies added.

With Hala Point, Intel says, it has built the first large-scale neuromorphic chip system that can demonstrate “state-of-the-art” computational efficiencies on mainstream AI workloads.

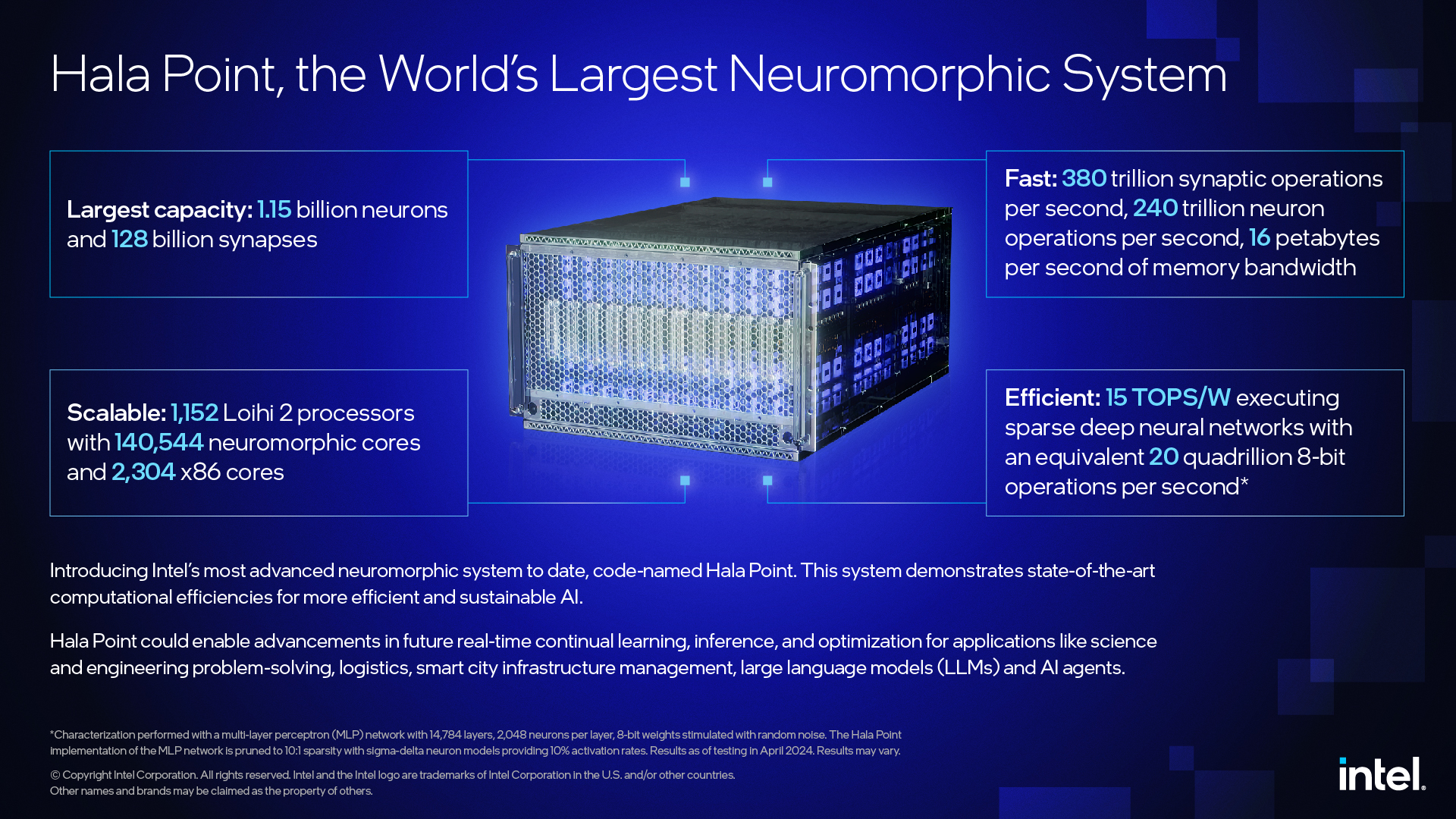

The Hala Point system packages 1,152 Loihi 2 processors in a six-rack-unit data center chassis that’s about the same size as a microwave oven. The system supports up to 1.15 billion neurons and 128 billion synapses distributed over 140,544 neuromorphic processing cores that consume a maximum of 2,600 watts of power. It’s integrated with 2,300 embedded x86 processors that handle ancillary computations.

By integrating processing, memory and communication into a single, massively parallelized fabric, Intel says, the system can provide up to 16 petabytes per second of memory bandwidth, with 11 petabytes per second intercore communication bandwidth and 5.5 terabytes per second of interchip communication bandwidth. With this level of performance, it can process more than 380 trillion eight-bit synaptic operations per second, and more than 240 trillion neuron operations per second.

In terms of processing conventional deep neural networks, Hala Point can support a whopping 30 quadrillion operations per second, or 30 petaops, with an efficiency that exceeds 15 trillion 8-bit operations per second per watt. In more simple terms, Intel said, Hala Point’s neuron capacity is equivalent to an owl’s brain, or the cortex of a capuchin monkey.

In terms of processing conventional deep neural networks, Hala Point can support a whopping 30 quadrillion operations per second, or 30 petaops, with an efficiency that exceeds 15 trillion 8-bit operations per second per watt. In more simple terms, Intel said, Hala Point’s neuron capacity is equivalent to an owl’s brain, or the cortex of a capuchin monkey.

According to Intel, this kind of performance suprasses the most powerful GPUs built by rival chipmakers, paving the way for significant breakthroughs in areas such as real-time continuous learning for scientific and engineering problem-solving AI applications. Other potential use cases include running complex logistics systems, smart city infrastructure management and large language model training, Intel said.

To get the ball rolling, Intel and Sandia National Laboratories will use Hala Point to tackle optimization problems that can be solved by searching for, planning and following the shortest path in a map. “That’s where we have achieved the best results,” Davies said. “In that domain, we have the most compelling results, with speedups of as much as 50 times, and 100-times savings in energy.”

Constellation Research Inc. analyst Holger Mueller said Intel’s neuromorphic computing architecture appears to be a genuine alternative to Nvidia’s GPUs, which currently dominate the AI processing industry but come at an excessive cost.

“It was inevitable that someone would come up with an alternative to challenge GPUs eventually as the opportunity with AI is just too big to miss,” Mueller said. “The competition is good for enterprises and helps to foster innovation, so it will be interesting to see how Nvidia responds. In the meantime, it’s nice to see the long-ailing Intel at the forefront of neuromorphic computing’s development. The Loihi 2 system looks extremely promising, but it’s not yet close to commercialization, so it will be a while before we can see if it will truly rival Nvidia.”

Davies said neuromorphic computing can potentially deliver similar advances to what quantum computers are expected to deliver – when they become available. “The difference is that quantum is much further out,” Davies said, so neuromorphic computers could be good for drug discovery and similar problems down the road.

Sandia National Laboratories will initially use Hala Point for advanced brain-scale computing research, with a focus on solving scientific computing problems in areas such as computer architecture, computer science, device physics and informatics.

Intel stressed that Hala Point is still a research prototype designed to advance the capabilities of future commercial systems, and it anticipates that the system will achieve practical breakthroughs that can significantly reduce the burden of modern AI workloads.

With reporting by Robert Hof

THANK YOU