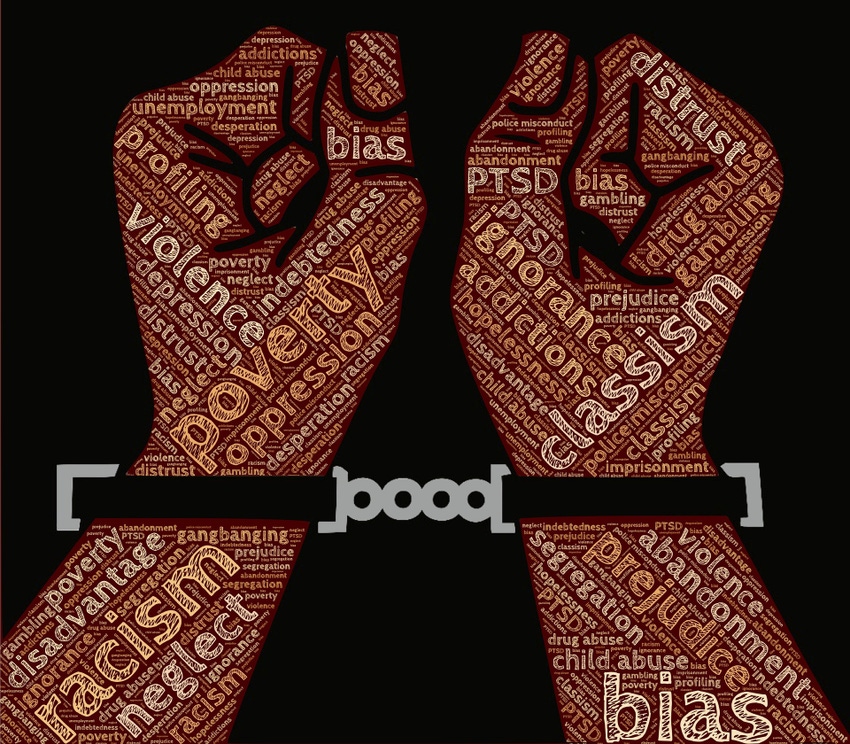

Artificial intelligence, meant to be completely unbiased and objective in its decision making, could prove to hold the same prejudices as humans. What can be done about it?

September 29, 2017

Microsoft must have only had the best intentions when it launched its artificial intelligence (AI) program, Tay, onto Twitter in the spring of 2016. Tay was a chatbot meant to tweet and sound like an 18 to 24 year old girl, with all of the same slang, verbiage, and vernacular. The more it interacted with humans on Twitter, the more it would learn and the more human it would sound.

That was the idea anyway. In less that 24 hours online trolls, realizing how easily influenced Tay could be, started teaching it racist and offensive language. After only 16 hours into its first day on Twitter Tay had gone from friendly greetings to spouting offensive anti-feminist, anti-semitic, and racist comments. Microsoft acted quickly and turned Tay off, but the damage was already done, and the message was clear – AI platforms are only as good as the data given to them. Fed nothing but hate speech Tay was forced to assume that was just how humans spoke. “We stress-tested Tay under a variety of conditions, specifically to make interacting with Tay a positive experience ...” Peter Lee - Corporate Vice President, Microsoft Research NexT, wrote in a blog apologizing for the incident. “... Unfortunately, in the first 24 hours of coming online, a coordinated attack by a subset of people exploited a vulnerability in Tay.”

That was the idea anyway. In less that 24 hours online trolls, realizing how easily influenced Tay could be, started teaching it racist and offensive language. After only 16 hours into its first day on Twitter Tay had gone from friendly greetings to spouting offensive anti-feminist, anti-semitic, and racist comments. Microsoft acted quickly and turned Tay off, but the damage was already done, and the message was clear – AI platforms are only as good as the data given to them. Fed nothing but hate speech Tay was forced to assume that was just how humans spoke. “We stress-tested Tay under a variety of conditions, specifically to make interacting with Tay a positive experience ...” Peter Lee - Corporate Vice President, Microsoft Research NexT, wrote in a blog apologizing for the incident. “... Unfortunately, in the first 24 hours of coming online, a coordinated attack by a subset of people exploited a vulnerability in Tay.”

Though offensive, a Twitter bot turned racist is a comparatively harmless example of AI gone wrong, but it does levy some important considerations for AI engineers and programmers as we come to rely more on AI and machine learning algorithms to make increasingly important decisions for us. Today algorithms can recommend movies, songs, and restaurants to us. Someday, they'll be guiding us in everything from medical diagnosis to important financial decisions.

So can AI ever be free of human biases?

“People are very concerned now about AI going wild and making decisions for us and taking control of us ... killer robots and all these kinds of issues,” Maria Gini, a professor at the Department of Computer Science and Engineering at the University of Minnesota, told Design News. Gini, a keynote speaker at the 2017 Embedded Systems Conference (ESC) in Minneapolis, who has spent the last 30 years researching artificial intelligence and robotics, said that while things like killer robots and AI weapons systems could be curbed by legislation such as a recent call for a UN ban on killer robots, concerns over decision-making AI will require more work on all levels.

“How do we know how these decisions that programs are making are made?” Gini asked. “When you apply for a loan and you are turned down you have person who can explain why. But if you apply online through a program there's no ability to ask questions. What's even tricker is how do I know the decision was made in a fair and impartial way?”

A group of researchers from the University of Bath in the UK set out to determine just how biased an algorithm can be. The results of their study, published in April of this year in the journal Science, showed that machines can very easily acquire both the same conscious and unconscious biases held by humans. All it takes is some biased data.

To conduct their study the researchers turned to the Implicit Associate Test (IAT). First created in 1998, the IAT essentially measures attitudes by determining how strongly a person associates certain words and image with each other. It can be used to as sort of a marketing tool to measure things as simple as if people think more positively of pizza or ice cream. But in recent years it has found popularity as a means of revealing unconscious racial bias. Harvard University for example has been running an online IAT, Project Implicit, that examines biases in everything from skin tone to weight, religion, and race. The test asks you to pair word concepts shown on a screen. Response times are shorter when you're asked to pair two concepts you find similar (i.e. “pizza” and “good”) versus things you find dissimilar (i.e. “ice cream” and “bad”). It's designed to reveal unconscious biases that we may not even know we have, but may be acting and making decisions based on nonetheless.

For their study the University of Bath researchers created a machine learning algorithm that could associate words by how often they appear together in a block of text. For example, if the algorithm always sees the word “flowers” mentioned in text along with the world “beautiful” it learns to associate flowers and beautiful together.

Next, the researchers turned their algorithm loose on the Internet, feeding it about 840 billion words. Once it was done the team took a look at a set of target words ( programmer, engineer, scientist, nurse, teacher, and librarian) alongside two sets of attribute words such as “man, male” and “woman, female.” What they found was the algorithm exhibited gender and race biases in its associations. The algorithm associated male more strongly with career attributes such as “professional” and “salary” than it did female names. Female names were more closely associated with terms like “wedding” and “parents.” The algorithm also carried more negative word associations for African American sounding names than European ones.

"The biases that we studied in the paper are easy to overlook when designers are creating systems," Arvid Narayanan, an assistant professor of computer science at Princeton University and one of the co-authors of the paper told the University of Bath. "The biases and stereotypes in our society reflected in our language are complex and longstanding. Rather than trying to sanitize or eliminate them, we should treat biases as part of the language and establish an explicit way in machine learning of determining what we consider acceptable and unacceptable."

“A system needs data to learn how to make decisions,” Gini said. “Take loans again for example. If you go through all the data from loan applications and approvals and you learn from that, then the data used is biased. In the simple case of a loan we know for example that African American people tend to be rejected more often. The computer doesn't know about reasons people might be singled out so many decisions being made are biased. The bias is in data and if you use that data the prediction will also be biased.”

For a real world example of what Gini is talking about you only need to go back a few weeks to the most recent lawsuit against Wells Fargo bank in which the city of Philadelphia alleges that the bank steered African American and Latino borrowers toward costlier and riskier mortgages than those offered to caucasian borrowers.

The University of Bath study is not the only one of its type. A 2016 study published as part of the Neural Information Processing Systems (NIPS) Conference found the same sort of biases present in word embeddings, systems used to train neural networks to understand natural human language. “We show that even word embeddings trained on Google News articles exhibit female/male gender stereotypes to a disturbing extent,” the NPS study says.

Then are we doomed for a future where AI make biased decisions, but only more rapidly and efficiently than humans? “The tricky part becomes how to remove as much of the bias in the data so that the data doesn't drive your decisions and repeat the mistakes of the past,” Gini said.

The authors of the NIPS study demonstrated that biases can be removed from algorithms as long as the data going into them is properly managed. After all, some word associations are good. It's just a matter of steering the AI away from stereotypical or potentially offensive associations like “female, receptionist” and toward useful ones such as “female, queen.” By creating benchmarks based on sets of words and their associations, rather than just pairs, NIPS researchers were able to teach an algorithm a more complex understanding of word associations and reduce the bias in its reasoning. “Using crowd-worker evaluation as well as standard benchmarks, we empirically demonstrate that our algorithms significantly reduce gender bias in embeddings while preserving the its useful properties such as the ability to cluster related concepts and to solve analogy tasks,” the study says. “The resulting embeddings can be used in applications without amplifying gender bias.”

About a year ago a consortium, the Partnership on Artificial Intelligence to Benefit People and Society, was formed to tackle issues around best practices for creating AI in a way that contributes to the greater good. Among its goals the Partnership lists, “Address such areas as fairness and inclusivity, explanation and transparency, security and privacy, values and ethics, collaboration between people and AI systems, interoperability of systems, and of the trustworthiness, reliability, containment, safety, and robustness of the technology.” Amazon, Google, Microsoft, Facebook, and Apple all count themselves among the founding members of the consortium.

“It's great there's a big consortium of companies looking for guidelines on how to design ethical decision-making AI,” Gini said. “But ethics is also culturally driven. Some things are ethical in one culture but not another so you can't just say, 'This is bad never do it.' Lots of things can be good or bad depending on where you are and the situation. It's going to be complicated, but at least there's an attempt to try to bring those issues to light and to try to come up with guidelines so that designers can understand those issues.

In the end Gini said what makes this issue so important is when you consider how many decisions we already let computer make for us...decisions in which we have no idea how the computer is reaching it's conclusions. “The more and more decisions [computers] make, the more we lose control,” Gini said. “If we don't know how those decisions are made there's no way we can intervene. Then the computers really will take control of our lives.”

The weight of the issue and the amount of effort it's going to take to resolve is no understatement. After all to remove biases from our machines, we first have to recognize them in ourselves.

|

Chris Wiltz is a senior editor at Design News covering emerging technologies, including VR/AR, AI, and robotics.

[main image source: Johnhain / Pixabay]

About the Author(s)

You May Also Like