Industrial robots have revolutionized manufacturing by pumping out more goods with more precision at a faster rate than any human worker could ever hope to achieve. But up to this point, programing a robot to do anything other than repeat a specific task has been a major challenge. Madeline Gannon, a researcher and educator at Carnegie Mellon University, is leading an effort with her design collective MADLAB.CC to develop robotic systems that can analyze a person's movements and respond accordingly.

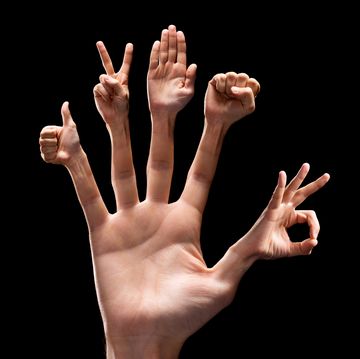

The project, called Quipt, "is a gesture-based control software that facilitates new, more intuitive ways to communicate with industrial robots." Developed during a residency at Pier 9's workshop, Quipt works by translating information obtained by motion capture technology into movement commands for a robot. Wearable markers on the hands and neck tell the robot where a human user is and what movements she carries out.

At this point, the tech is in a proof-of-concept phase. Gannon has successfully implemented her system in an ABB IRB 6700, a 2,500-pound robotic arm, and programmed it to follow her movements. Similar technology could be expanded to assist workers on a construction site, telling a mobile robot exactly where to weld or drill and when to stop. Hollywood might be interested in Quipt to precisely move and arrange camera equipment with simple gestures, and the military could use this type of technology to issue orders to ground-based robots with hand signals.

"Automation tasks where the human is entirely removed from the equation is reaching a limit of diminishing returns," writes Gannon. "The next step is create ways for these machines to augment our abilities, not replace them."

Check out MADLAB's video detailing the project to learn more.

Source: MADLAB.CC h/t Discover

Jay Bennett is the associate editor of PopularMechanics.com. He has also written for Smithsonian, Popular Science and Outside Magazine.