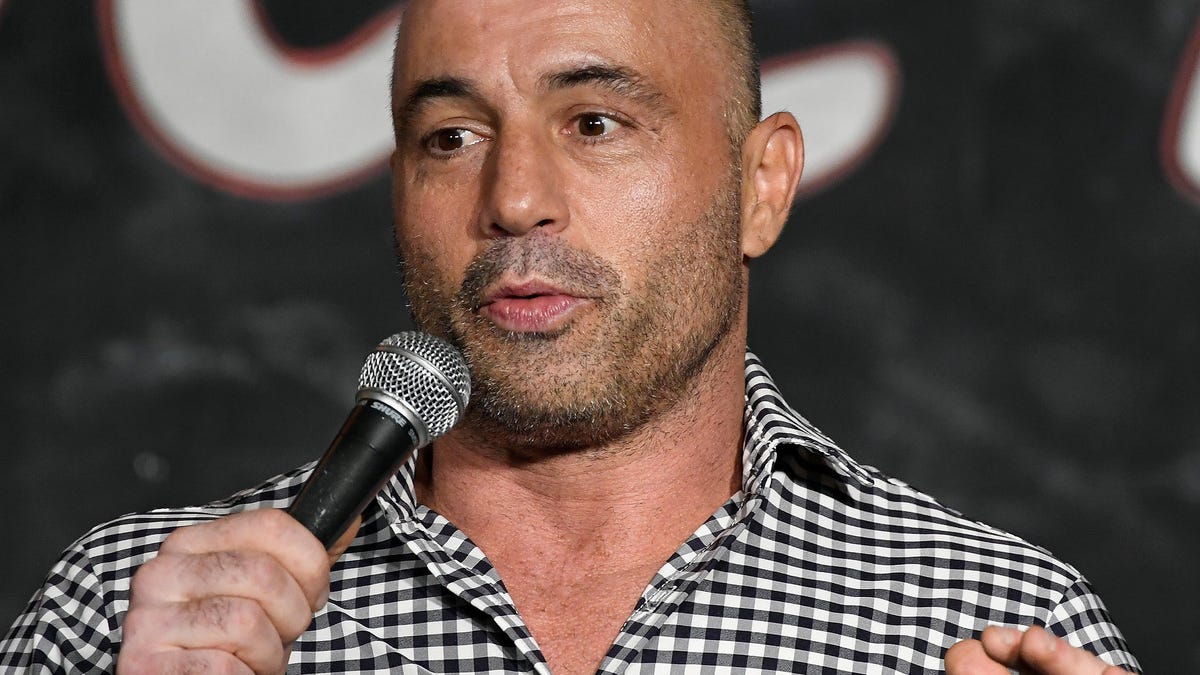

Joe Rogan calls AI that replicated his voice 'terrifyingly accurate'

It's funny, but the real-world implications are kind of scary.

Joe Rogan got an up close and personal look at AI.

We've read about celebrities doing weird things, but we can usually separate fact from fiction. But what if you hear it straight from the source? Or you think you did?

Engineers at AI enterprise Dessa published a video demonstrating what it says is the "most realistic AI simulation of a voice we've heard to date," according to a Medium blog post on Wednesday. Dessa simulated podcast host Joe Rogan's voice and made it say some pretty weird stuff.

Rogan, who's no stranger to the world of tech, posted about Dessa's recording on Instagram.

"I just listened to an AI generated audio recording of me talking about chimp hockey teams and it's terrifyingly accurate," Rogan wrote on Friday. "At this point, I've long ago left enough content out there that they could basically have me saying anything they want, so my position is to shrug my shoulders and shake my head in awe, and just accept it. The future is gonna be really f---ing weird, kids."

Dessa said its AI could replicate anyone's voice with enough data The company also pointed out that this technology could be concerning in the wrong hands, such as spam callers or people impersonating someone to gain security clearance or access. Dessa also said the tech could be used to create an "audio deepfake."

Deepfakes are video forgeries that essentially make someone appear like they've said or done something that they didn't. Some are harmless fun, like memes that plaster actor Nicolas Cage's face into movies and shows he's never starred in. Others can be harmful, like the face of an unwitting victim grafted onto graphic pornography. The process seeks to mash up identity so you don't question that it might be fake, and the possibilities for manipulation between video and audio are endless.

Neither Dessa nor Rogan immediately responded to requests for comment.