You can plausibly say today’s virtual reality is a descendent of smartphones. The affordable sensors, chips, and high-resolution displays critical to rendering a decent VR experience were engineered for iPhones and Galaxys not Rifts and Vives. Early on, VR pioneer Oculus built prototypes with 1080p AMOLED displays from Samsung Galaxy S4 smartphones.

But after Facebook’s $2 billion acquisition, the team had the wherewithal to begin dreaming up and ordering custom components. And of course, displays were first on their list.

Early Oculus Rift developer kits were like looking through a coarsely patterned screen door. But the ideal experience is one in which the eye discerns nary a pixel on the screen, a heavenly state referred to as retina resolution.

The best VR displays are somewhere between super-screen-door and retina resolution. High-end, tethered headsets offer higher resolution than early versions of the Rift. Yet, the image still isn’t so crisp that the eye detects no pixelation at all. Not bad, not ideal.

Retina resolution depends on a number of factors, one of which is how close the display is to your eyes. The closer it is, the more pixels you need. That means for VR to hit retina resolution, we’ll need displays with way more, way smaller pixels.

Luckily, science is on it, and with retina resolution laptops and phones yawn-worthy at this point, VR and AR are now key technologies driving cutting-edge research in high-res displays.

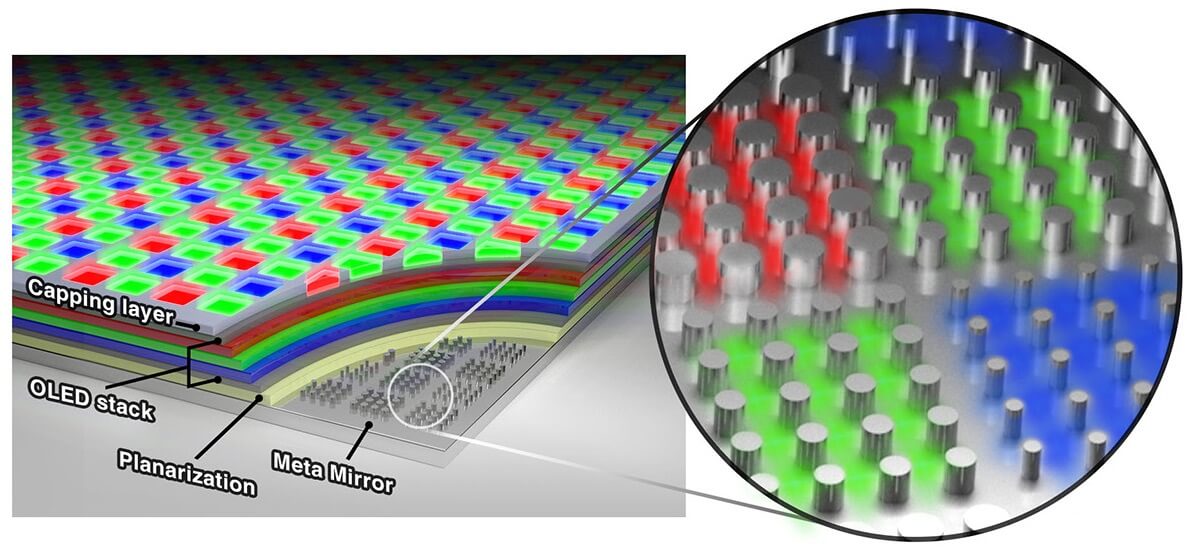

In a recent example, a team of scientists led by Samsung’s Won-Jae Joo and Stanford’s Mark Brongersma published a paper in Science describing a new meta-OLED display that can pack in 10,000 pixels per inch with room to scale. In comparison, today’s smartphone and VR displays are less than 1,000 pixels per inch.

The team says current displays, sufficient for TVs or smartphones, can’t meet the pixel density needs of near-eye VR and AR applications. They’re looking beyond headsets too, writing, “An ultrahigh density of 10,000 pixels per inch readily meets the requirements for the next-generation microdisplays that can be fabricated on glasses or contact lenses.”

Of course, they aren’t alone in their quest for ultra-high-def, and the display is still firmly in the research phase, but it hints at what the future holds for stunning AR/VR experiences.

Very Meta: From Solar Panels to Virtual Reality

The new display was born from a breakthrough in solar cells, where Brongersma’s lab used optical metasurfaces—these are surfaces with built-in nanoscale structures to control a material’s properties—to manipulate light. Joo, who was visiting Stanford at the time, learned of Brongersma’s approach in a presentation by graduate student Majid Esfandyarpour.

He realized the same approach could be useful in organic light-emitting diode (OLED) displays too.

Some of the top displays in the world—like the ones in high-end televisions and iPhones—use OLEDs because they’re very thin and flexible and known for their deep, pure colors.

Currently, there are two ways to make OLED displays. For small screens like smartphones, the pixels are split into subpixels that emit red, green, or blue light. These are laid down by spraying dots of each material through a fine mesh. But the method has limitations both in how small the subpixels (and therefore pixels) can be and how large the display can go. If the mesh is too big, it has a tendency to sag.

So, for larger displays like televisions, manufacturers opt for white OLEDs with red, blue, and green filters sitting on top of them. The thing is, the filters absorb 70% of the light, thus requiring more power to keep them bright. Filtered OLEDs are also limited in how small you can make them.

A Nanoscale Skyline to Filter Light Into Pixels

The new Stanford and Samsung solves both problems at the same time.

Instead of filters or color-specific OLED materials, the new display makes use of a surface bristling with tiny silver nanopillars 80 nanometers high.

When bathed in white light, the spacing between the pillars determines which wavelengths are transmitted. Each subpixel contains pillars of different widths. The widest pillars with the least spacing give off red light, the next widest, green, and least wide, blue. Larger squares—containing red, blue, and green subpixels—make up the display’s pixels. Each is 2.4 microns wide or roughly 1/10,000th of an inch.

As the pixels are no longer shaded by filters—and due to a curious property of the metasurface that allows light to build up and resonate, a bit like sound in a musical instrument—the display’s color is very pure and achieves greater brightness with less power.

The team estimates the approach could yield pixel density up to 20,000 pixels per inch. But as the pixels go below a micron, you begin to sacrifice brightness. The next step is to make a full-size display, something Samsung is currently working to make happen.

Of course, other research is going after ultrahigh resolution displays too.

MicroLED tech, a leading candidate, has achieved 30,000 pixels per inch. However, displays over 1,000 pixels per inch are monochromatic, and full-color displays remain challenging.

Whichever approach wins, computing power will have to scale too. More pixels equals more processing. One potential solution for AR/VR is called foveated rendering, where devices track our eyes and only serve up the highest resolution image where the gaze is directed. This makes use of the fact our peripheral vision is blurry, regardless of medium, thus saving precious processing power.

More Immersion = Better?

Of course, visuals are only part of the equation. Truly immersive, sci-fi-like VR will supply a sense of touch, muscular resistance, and maybe even direct manipulation of virtual worlds. And if we’re to avoid only enjoying VR in huge warehouses, we’ll need to figure out how to move in place.

Practical solutions to these problems have been slowly emerging. Early, weird, and/or expensive peripherals include at-home VR treadmills, robotic boots, and even robotic exoskeletons.

One might wonder (quite rationally) if we really need to be more immersed in our devices. Many people have been spending a whole lot more time on screens, and it can be pretty draining. It’s amazing what an afternoon spent sitting by a good old fashioned stream rendered by the laws of physics unfiltered can do for your sanity.

Still, quality virtual and augmented reality interfaces may solve a few of the less palatable side effects of 2D displays because it’s better tailored to how our brains operate in the world. We very consciously have to teach ourselves to type and use a trackpad, but we learn to navigate the physical world almost by accident (just watch a baby move into toddlerhood).

Perhaps, as we spend more time in the digital world, making it immersive will also help make it a better experience (in some ways, at least). And regardless, the next round of interfaces is coming. So, wouldn’t it be nice if they looked especially pretty?

Image credit: Luca Huter / Unsplash