At CES 2018 Intel unveiled a prototype chip, Loihi, that mimics the architecture of the human brain for adaptable AI processing on the edge.

January 12, 2018

Intel isn't letting controversy over a series of processor bugs stop it from continuing to push innovation in processors. 2018 is already looking to be the year that competition in the artificial intelligence processor space really heats up. And at the 2018 Consumer Electronics Show (CES) Intel made its first major chip announcement of the year by unveiling a new prototype neuromorphic chip, codenamed Loihi (pronounced low-ee-hee), which it says will enable devices to perform advanced deep learning processing on the edge with new levels of power efficiency.

|

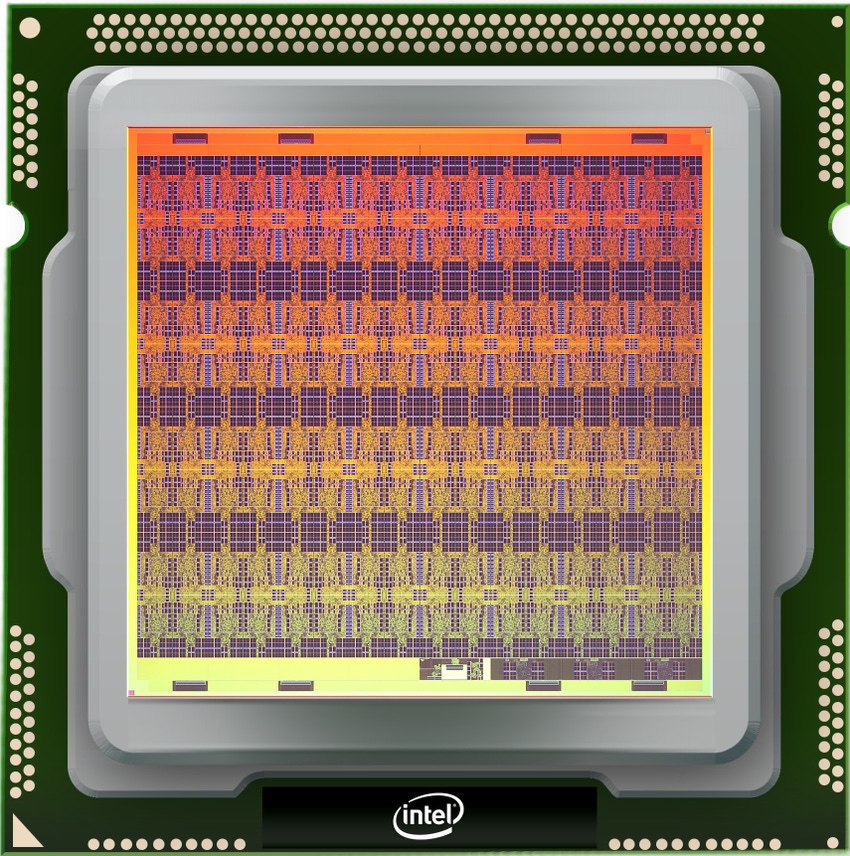

Intel's Loihi neuromorphic chip takes cues from the architecture of the brain to allow neural networks to learn and adapt on the fly. (Image source: Intel). |

You may be wondering what a neuromorphic chip is. Put simply, a neuromorphic processor is designed to mimic the way neurons connect in the human brain – effectively simulating the brain on a piece of silicon. The idea is that by adapting the brain's architecture engineers can also harness the brain's natural ability to adapt and learn in real time.

There was a time when psychologists and neuroscientists thought the brain was a static organ. Once you reached a certain age (about your late teens or early twenties) the thinking was that your brain structure was more or less set in stone. You couldn't teach an old dog new tricks precisely because his brain was locked in place. Today we know better; that the brain's structure actually challenges throughout life as we learn and take on new tasks – a phenomenon called neuroplasticity.

Engineers of neuromorphic chips are bringing the concept of neuroplasticity into electronics. In the case of Loihi specifically, the chip is comprised of a many core mesh of “neurons,” capable of supporting hierarchical and recurrent neural network topologies. According to specs released by Intel, Loihi simulates a total of 130,000 neurons and 130 million synapses, all capable of communicating with each other. This is a far cry from the sophistication of a human brain with its 100 billion neurons, but comparable to some small insects. For example, a common fruit fly, an insect studied for AI research, has about 250,000 neurons and 10 million synapses.

“This has been a major research effort by Intel and today we have a fully functioning neuromorphic research chip,” Intel CEO Brian Krzanich said during his keynote at CES 2018. “This incredible technology adds to the breadth of AI solutions that Intel is developing.” Krzanich added that Intel has already used Loihi successfully in image recognition tests in its own labs.

In a video from Intel shown as part of the keynote, Mike Davis, the director of Intel's Neuromorphic Computing Lab further explained: “Traditional computing rests on this basic idea that you have two computing elements – a CPU and a memory. Neuromorphic computing is throwing that out and starting from a completely different point on the architectural spectrum.”

Intel engineers discuss the development of the Loihi chip. |

With Loihi engineers will be able to build a network, feed it data, and that data will change the network, much in the same way that new information changes the brain. The chip can learn and infer on its own without the add of any sort of external update from the cloud or other source. And it does all of this while using fewer resources than a general compute chip. According to Intel, Loihi is up to 1,000 times more energy-efficient than the general purpose computing that is typically used for training neural networks. Ultimately this could mean creating devices that can adapt and modify their own performance in real time.

Of course these chips won't be able to just pick up any new task like a human. Like any neural network they will still have to be initially trained. Neuromorphic chips are also generally slower than general-purpose CPUs. There advantages come in their specialization and their ability to adapt from their training.

In a blog written for Intel on the development of Loihi, Dr. Michael Mayberry corporate vice president and managing director of Intel Labs at Intel, explained that typical neural networks don't generalize well unless they've been trained in a way that accounts for a specific circumstance or element to arise. You can train a factory collaborative robot to assemble a product for example, but it won't know to account for the wrong pieces being laid in front of it unless it's trained to recognize them as such.

Mayberry explained that because neuromorphic chips are self-learning they only need to be trained on what's “normal” and can figure out what's abnormal on their own. As he wrote:

"The potential benefits from self-learning chips are limitless. One example provides a person’s heartbeat reading under various conditions – after jogging, following a meal or before going to bed – to a neuromorphic-based system that parses the data to determine a “normal” heartbeat. The system can then continuously monitor incoming heart data in order to flag patterns that do not match the “normal” pattern. The system could be personalized for any user."

Mayberry cited cybersecurity as another example of this. A neuromorphic processor could learn what normal, authorized traffic on a network looks like and then automatically learn to flag any unusual network traffic that could signal a breach or hack.

A November 2017 report by Mordor Intelligence predicted the global market for neuromorphic chips will reach $2.94 billion by 2020. Research and Markets also forecast similar numbers in its own report released in July of 2017, predicting the global neuromorphic chip market to grow with a CAGR of about 25% from 2016-2023. Both research firms identify Intel along with IBM, Qualcomm, Samsung, HP, and Lockheed Martin among the major players in the neuromorphic engineering space and believe the market will be driven primarily by image recognition, signal recognition, data processing, and speech applications across industries.

Liohi will be shared with universities and research institutions in the first half of 2018. There is no official word yet on when Intel may bring the chip to OEM partners. Intel hopes that neuromorphic chips will eventually show up anywhere in which devices need fast, power efficient neural network computing on the edge – everything from industrial robots to autonomous cars to smart city applications.

REGISTER FOR PACIFIC DESIGN & MANUFACTURING 2018 Pacific Design & Manufacturing, North America’s premier conference that connects you with thousands of professionals across the advanced design & manufacturing spectrum, is back at the Anaheim Convention Center February 6-8, 2018! Over three days, OKuncover software innovation, hardware breakthroughs, fresh IoT trends, product demos and more that will change how you spend time and money on your next project. CLICK HERE TO REGISTER TODAY! |

Chris Wiltz is a Senior Editor at Design News , covering emerging technologies including AI, VR/AR, and robotics.

About the Author(s)

You May Also Like