AI: IBM showcases new energy-efficient chip to power deep learning

IBM's researchers have designed what they claim to be the world's first AI accelerator chip that is built on high-performance seven-nanometer technology, while also achieving high levels of energy efficiency.

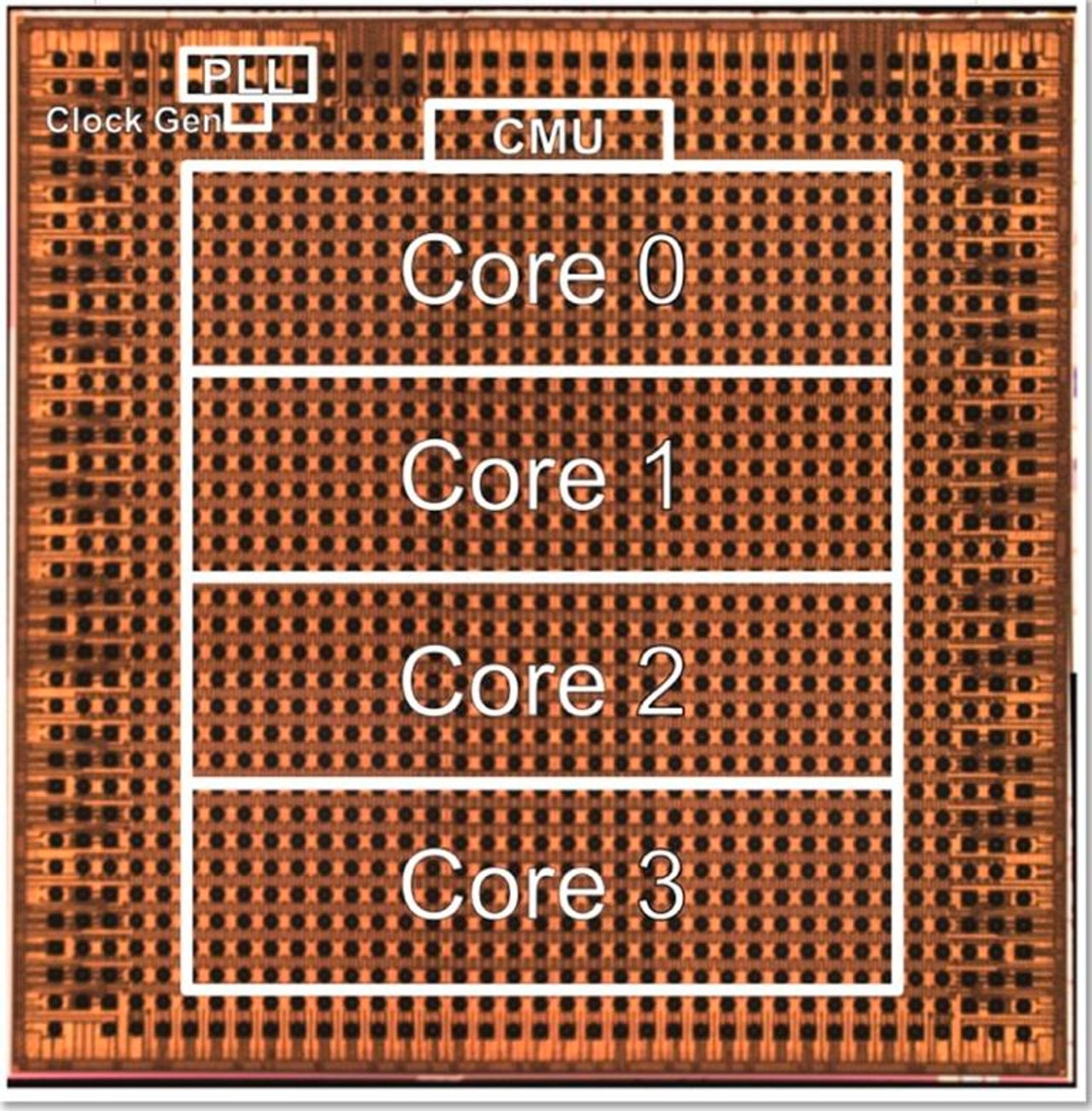

Ankur Agrawal and Kailash Gopalakrishnan, both staff members at IBM research, unveiled the four-core chip at the International Solid-State Circuits Virtual Conference this month, and have disclosed more details about the technology in a recent blog post. Although still at the research stage, the accelerator chip is expected to be capable of supporting various AI models and of achieving "leading-edge" power efficiency.

"Such energy efficient AI hardware accelerators could significantly increase compute horsepower, including in hybrid cloud environments, without requiring huge amounts of energy," said Agrawal and Gopalakrishnan.

IBM's researchers have designed what they claim to be the world's first four-core AI accelerator chip that is built on high-performance seven-nanometer technology, while also achieving high levels of energy efficiency.

AI accelerators are a class of hardware that are designed, as the name suggests, to accelerate AI models. By boosting the performance of algorithms, the chips can improve results in data-heavy applications like natural language processing or computer vision.

As AI models increase in sophistication, however, so does the amount of power required to support the hardware that underpins algorithmic systems. "Historically, the field has simply accepted that if the computational need is big, so too will be the power needed to fuel it," wrote IBM's researchers.

IBM's research department has been working on new designs for chips that are capable of handling complex algorithms without growing their carbon footprint. The crux of the challenge is to come up with a technology that doesn't require exorbitant energy, but without trading off compute power.

One way to do so is to employ reduced precision techniques in accelerator chips, which have been shown to boost deep learning training and inference, while also requiring less silicon area and power, meaning that the time and energy needed to train an AI model can be cut significantly.

The new chip presented by IBM's researchers is highly optimized for low-precision training. It is the first silicon chip to incorporate an ultra-low precision technique called the hybrid FP8 format – an eight-bit training technique developed by Big Blue, which preserves model accuracy across deep-learning applications such as image classification, or speech and object detection.

What's more, equipped with an integrated power management feature, the accelerator chip can maximize its own performance, for example by slowing down during computation phases with high power consumption.

Artificial Intelligence

The chip also has high utilization, with experiments showing more than 80% utilization for training and 60% utilization for inference – far more, according to IBM's researchers, than typical GPU utilizations which stand below 30%. This translates, once more, in better application performance, and is also a key part of engineering the chip for energy efficiency.

SEE: Building the bionic brain (free PDF) (TechRepublic)

All these characteristics come together in a chip that Agrawal and Gopalakrishnan described as "state of the art" when it comes to energy efficiency, but also performance. Comparing the technology to other chips, the researchers concluded: "Our chip performance and power efficiency exceed that of other dedicated inference and training chips."

The researchers now hope that the designs can be scaled up and deployed commercially to support complex AI applications. This includes large-scale deep-training models in the cloud ranging from speech-to-text AI services to financial transaction fraud detection.

Applications at the edge, too, could find a use for IBM's new technology, with autonomous vehicles, security cameras and mobile phones all potentially benefitting from highly performant AI chips that consume less energy.

"To keep fueling the AI gold rush, we've been improving the very heart of AI hardware technology: digital AI cores that power deep learning, the key enabler of artificial intelligence," said the researchers. As AI systems multiply across all industries, the promise is unlikely to fall on deaf ears.