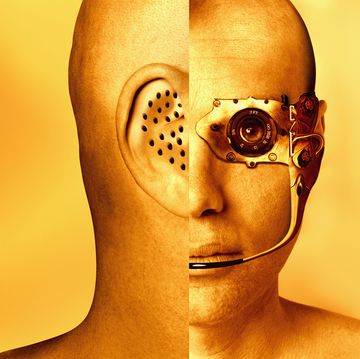

When able, humans use their senses in tandem. Hearing a voice, a person turns around to see if it's their friend. Touching an object can offer tactile information, but viewing it can confirm its true identity. But robots can't use their senses in tandem as easily, which is why scientists at MIT's Computer Science and Artificial Intelligence Lab (CSAIL) have worked at correcting what they call a robotic "sensory gap."

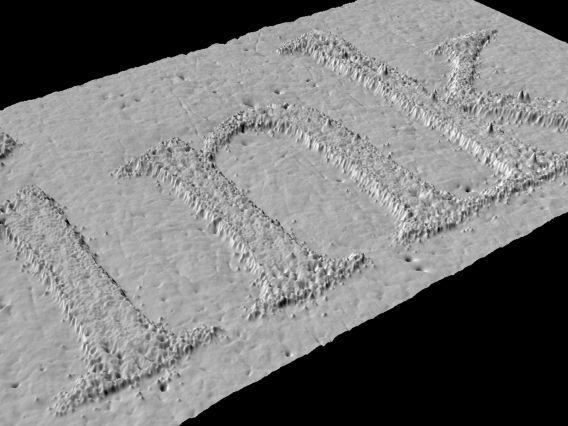

To connect sight and touch, the CSAIL engineers worked with a KUKA robot arm, a type often used in industrial warehouses. The scientists outfitted it with a special tactile sensor called GelSight—a slab of transparent, synthetic rubber that works as an imaging system. Objects are pressed into GelSight, and then cameras surrounding the slab monitor the impressions.

With a common webcam, the CSAIL team recorded almost 200 objects, including tools, household products, and fabrics being touched by the robot arm over 12,000 times. That created a trove of video clips that the team could break down into 3 million static images, creating a dataset they termed “VisGel.”

“By looking at the scene, our model can imagine the feeling of touching a flat surface or a sharp edge,” says Yunzhu Li, CSAIL Ph.D. student and lead author on a new paper about the system, in a press statement.

“By blindly touching around,” Li says, “our model can predict the interaction with the environment purely from tactile feelings. Bringing these two senses together could empower the robot and reduce the data we might need for tasks involving manipulating and grasping objects.”

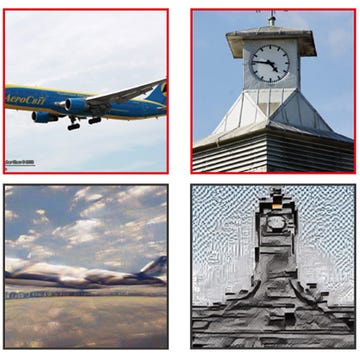

The CSAIL team then combined the VisGel dataset with what are known as generative adversarial networks, or GANs. GANs are deep neural net architectures comprised of two nets, according to an explainer from AI company Skymind, with the potential to imitate images, music, speech, and prose. They're often associated with artistic uses of AI. In 2018, the auction house Christie's sold a painting generated by a GAN for $432,000.

GANs work with two neural nets in competition against each other. One net is deemed the "generator," while the other is called the "discriminator." The generator creates images that it tries to make look real; the discriminator tries to prove the images are created. Every time the discriminator wins the battle, the generator is forced to examine its own internal logic, creating and hopefully refining into a better system.

Combined together, CSAIL engineers tried to make their system hallucinate, or create a visual image based solely on tactile data. When fed tactile data of a shoe, the AI would try to recreate what that shoe looked like.

“This is the first method that can convincingly translate between visual and touch signals”, says Andrew Owens, a postdoc at the University of California at Berkeley. “Methods like this have the potential to be very useful for robotics, where you need to answer questions like ‘is this object hard or soft?’, or ‘if I lift this mug by its handle, how good will my grip be?’ This is a very challenging problem, since the signals are so different, and this model has demonstrated great capability.”

CSAIL projects often focus on bridging digital divides. One project last year allowed AIs to be corrected through human thought.

Source: TechCrunch

David Grossman is a staff writer for PopularMechanics.com. He's previously written for The Verge, Rolling Stone, The New Republic and several other publications. He's based out of Brooklyn.