- Cognitive scientists suggest in a new paper that machines could model human vulnerability in order to make better decisions.

- Humans in survival mode must make complex decisions with dire consequences, and scientists suggest modeling this mode could add depth and “emotion” to machines.

- A machine that understands risk and threat could, in turn, make better decisions in applications to care, industry, and more.

How do you get machines to perform better? Tell them they could croak at any minute. In a new paper from the University of Southern California, scientists say that “in a dynamic and unpredictable world, an intelligent agent should hold its own meta-goal of self-preservation.”

Lead researcher Antonio Damasio is a luminary in the field of intelligence and the brain. In his profile at the Edge Foundation, they say Damasio “has made seminal contributions to the understanding of brain processes underlying emotions, feelings, decision-making and consciousness.” At USC, he’s co-director of the Brain and Creativity Institute (BCI) with his equally luminous wife, Hanna Damasio.

Damasio’s paper, coauthored with BCI researcher Kingson Man, is a model based on philosophy and science of mind paired with accumulating research into robotics technology. They published the paper in Nature Machine Intelligence, a title usually meant as “Nature’s [Journal of] Machine Intelligence” but in this case, strangely prescient.

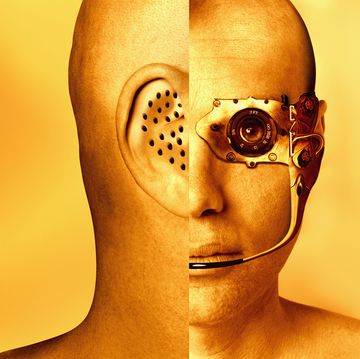

Damasio and Man suggest the way to make resilient robots isn’t to make them impenetrably strong, but rather, to make them vulnerable in order to introduce ideas like restraint and self-preserving strategy. “If an AI can use inputs like touch and pressure, then it can also identify danger and risk-to-self,” ScienceAlert summarized.

This idea invokes the design concept of a survival game, where a finite number of resources is given to a set number of players and they must find an equilibrium or eliminate their competitors. The gorgeous 2018 card game Shipwreck Arcana is a great example of a cooperative survival game: To win, at least one person must survive being shipwrecked. You can share resources to preserve more people, or you can sacrifice resources from some players to increase the likelihood that one person will survive.

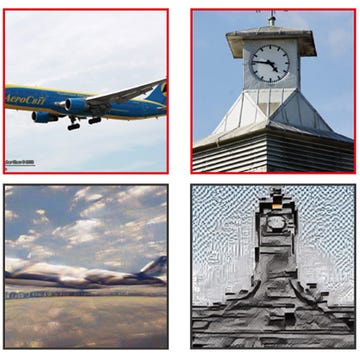

A robot with a sense of its own “health” isn’t the most novel thing—when a car tells you the oil is low or the engine is overheating, that’s a direct self-preservation behavior. There’s just no in-between layer of circuitry to model thinking or prioritizing. Instead, the car has sensors only, and those sensors flag errors in order for the vehicle’s operator to address them. Imagine a car that considered your planned commute and the health of its engine and pulled itself over every 10 minutes to cool off.

“Under certain conditions, machines capable of implementing a process resembling homeostasis might also acquire a source of motivation and a new means to evaluate behaviour, akin to that of feelings in living organisms,” Damasio and Man say in their abstract. The car example fits this rubric. Instead of a sensor alerting an outsider every time, the hypothetical car has a “brain” to run analyses of the different factors that can go wrong and how likely each scenario is.

Human brains do this without, well, a second thought. Our many senses—not just five—feed constant input into our brains, and our body systems adjust in response. People dislike the idea that their brains and bodies are basically machines, although our complexity will probably never be fully understood by human scientists. What the scientists’ paper represents is the way more and more complex materials and computing are bringing machines steps toward our level, not the other way around.

Caroline Delbert is a writer, avid reader, and contributing editor at Pop Mech. She's also an enthusiast of just about everything. Her favorite topics include nuclear energy, cosmology, math of everyday things, and the philosophy of it all.