Should robots be taxed? That is the question that Bill Gates has given his somewhat controversial answer to last week, declaring that humans should be taxing robot workers as if they were human. Gates has suggested that this is the answer to the economic problem posed by mass automation – rather than the increasingly popular suggestion of a Universal Basic Income. UBI has become a hotbed for controversy, with it branded as everything from the saviour of society to excessive welfare. But the possibility of taxing robots to make up for the lost tax revenue has gained some vocal support and opposition.

Speaking in an interview with Quartz, the enigmatic Microsoft founder had this to say: “Right now, the human worker who does, say, $50,000 worth of work in a factory, that income is taxed and you get income tax, social security tax, all those things. If a robot comes in to do the same thing, you’d think that we’d tax the robot at a similar level.”

Gates isn’t the first person to offer this as a solution to the unprecedented unemployment issue that looms in the near future, and he is unlikely to be the last, and yet every time some form of robot-tax is suggested it is lambasted by free market capitalists. In fact, this very idea was rejected recently by the European parliament, in favour of a set of guidelines and ethics rules regarding how a business should go about manufacturing and deploying automated robots in the place of human workers. At least here the EU if offering some form of protection for workers – a small one albeit, but at least it is a recognition of the problem.

The Financial Times (amongst others) have been particularly critical of the idea, arguing that a tax on robot workers would restrict or even halt the inevitable march of innovation and progress. They argue that automation and technology have always improved and benefited society, so why should this be any different?

I can see the logic in their argument – and I agree that it is likely to impact the pace of innovation, and while the level of taxation could easily be levied to ensure that it was still prudent to invest in new technology, the financial and human costs should be considered. What happens to all the money in the economy when there are no human workers, how do businesses continue to manufacture products when they have laid off all the consumers.

The Financial Times have also suggest that there is no need for humans to fear complete automation whilst robots are so expensive and automation and AI still have a long way to go before they are truly smarter than humans. But it is naïve to think that this moment is not on the horizon, we cannot simply plough into the future automating every imaginable part of society without recognising that there could be serious economic and social consequences.

Businesses, especially those leading the latest tech-revolution, will need to consider the socio-economic cost of automation, before anyone else. Governments can plan and provide for workers who have been laid off, but in the end, is slightly less innovation a worthwhile trade-off for a healthy and functioning consumer society? To quote Mr Gates on the issue,

“If you can take the labour that used to do the thing automation replaces, and financially and training-wise and fulfillment-wise have that person go off and do these other things, then you’re net ahead. But you can’t just give up that income tax, because that’s part of how you’ve been funding that level of human workers.”

He proposed the tax in order to pump money into industries that require human employment, like child or senior care – places where technology and automation are unfit to deal with complex and volatile situations that will present themselves every day, and in which a human touch is much more beneficial than even a super-intelligent AI – maybe that is where the focus needs to go.

A small tax on robots to ensure training for jobs that require human input is surely a small price to pay for moving towards an increasingly automated economy. The automated world is on our doorstep and we need to decide how we are going to respond.

If we can tax robots, should we give them rights or even representation? The founding and defining phrase of the American War of Independence was “no taxation without representation” – so if we were to levy a robo-tax, are we obligated to give them inalienable rights and just representation?

Marcus du Sautoy, a mathematician from the University of Oxford, argued that the question over rights for robots should be judged on one very simple test – whether robots have the ability to feel, suffer, or have a “sense of self”. In other words, if robots have a form of consciousness, whether natural or synthesized, they should be given rights – although whether they should be considered equal to human rights still remains a major question.

Whether robots will ever develop a level of consciousness on par with (or even close to) humans depends on who you ask. Many technologists believe that this could come about as a result of intelligent AI’s who develop the next generation of AI – taking human decisions out of the design process.

It has been suggested that in order to function to the best of it’s ability, AI’s may be designed with the ability to feel pain, much like animals and humans evolved to feel pain as a method of survival.

Dutch computer-science expert Ronald Siebes famously commented that: “Robots are starting to look so much like humans that we also have to think about protecting them in the same way as we protect humans.” So perhaps the question of whether robots can feel pain is obsolete? Robots and AI’s who have the ability to think “freely” could be seen as human, or at least human enough to deserve rights.

According to Alain Bensoussan, a lawyer who specialises in the rights of robots, the more autonomous a robot or machine becomes, the more deserving it is of rights. In the April 2015 edition of French magazine L’Express he stated that “The more independent the machine is of its owner, the closer it gets to meriting human rights. I’m not responsible for my car, which can drive itself to Toulouse in the same way that I’m responsible for my toaster.”

This argument does seem somewhat flawed – we already have beings on this planet who are completely autonomous of humanity, and yet they are treated as second-class citizens on a planet they have inhabited much longer than we have been. Animal rights are often dismissed, with the argument being that their lives are less important that humans, because they are not as cognitively developed, or capable of higher thought in the same way (most) humans are. In “An Introduction to the Principles of Morals and Legislation” (1789), Jeremy Bentham posed that one day animals would be recognised as deserving the same rights as any living being,

“The day may come, when the rest of the animal creation may acquire those rights which never could have been withholden from them but by the hand of tyranny… The question is not, can they reason? nor, can they talk? but, can they suffer?”

This brings us back to the question of whether suffering is a sufficient litmus test for rights, given that animals suffer, surely all animals should have rights? Yet some animals are deemed to be more important than others – no-one is punished for poisoning rats, yet poisoning your dog is frowned upon. So perhaps the question of whether rights should be imbued upon robots is a combination of their intellect, the ability to think freely, the ability to suffer, and their usefulness to society.

Ultimately, as is the case with many hypothetical situations that we discuss regarding AI, the answer will depend on our designs. If we want to see robots as equal, we will build them and bill them as equals, but if we want to create a being to serve humanity, they will not be taught to suffer or think freely. The real trouble comes when we decide to enslave a being that we create as an equal, but treat it like a lesser form of life – so treat your robots well, and they will work for and with humanity!

Lets look at how treating robots as equals could affect humans as a species, would the lines between robot and human become blurred? And would we start to feel emotions towards the robots that we spend each day working and living around.

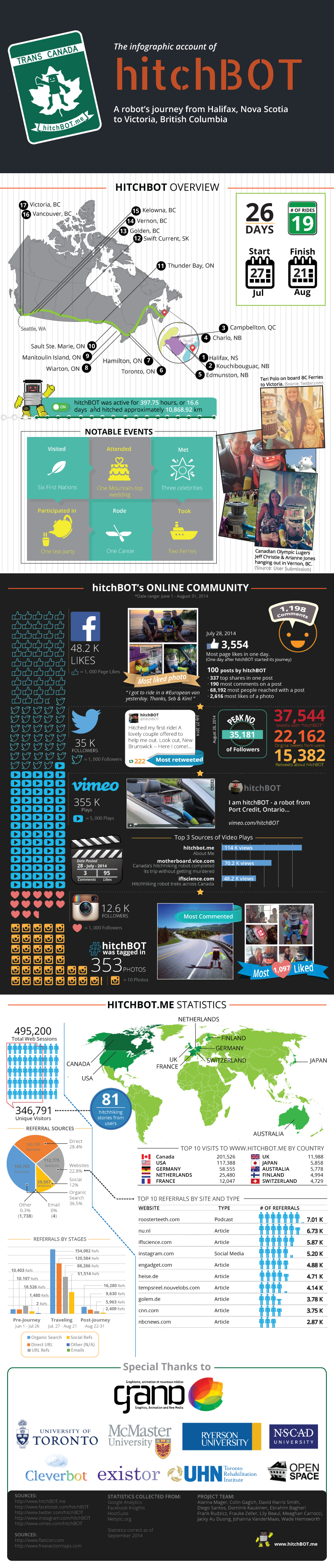

Take hitchBOT for example, this was a hitchhiking robot designed to travel across the country, hitchhiking with other travellers. After he conquered Europe and Canada, the hitchBOT set out in 2015 with the goal of travelling from Salem to San Francisco; having already managed 10,000 kilometres across the whole of Canada, and made it around Germany and the Netherlands, this was to be his crowning achievement. However, no more than a week later, hitchBOT was found destroyed in an alley in Philadelphia, and had to be shipped back in pieces to the manufacturers.

When I first read about this story I felt a weird mix of emotions, I was sad for the little robot who just wanted to go to Cali, but why? It couldn’t feel anything, it had no capacity to feel emotion or pain, so was it natural to feel sad about the loss of a robot that couldn’t care less about what happened to it.

This isn’t an isolated emotion, people get attached to pieces of technology all the time, our phones, cameras, laptops, anything that we spend an extended period of time we tend to develop some form of emotional attachment. Julie Carpenter, an educational psychologist at the University of Washington commented that:

“The technology is being developed and integrated before we fully understand the long-term psychological ramifications… How we treat robots and how they affect us emotionally – we’re not really sure what’s going to happen.”

She specialises in dealing with the relationships between robots and people, and the psychological effects it can have on people. She spends most of her time working with US military bomb disposal units and looking at how the soldiers interact with their bomb disposal robots. According to Carpenter the technicians often develop bonds with their robots, “lending them human characteristics and naming them, much like a proud owner might do to a new car”. They are seen by many as another team member, with their own quirks and personalities – so the soldiers are obviously disappointed and sad when one of them is harmed or blown up. One technician that spoke to New Scientist spoke of his emotional conflict, whereby he was sad to see the robot blown up, but happy that it was that rather than one of his friends – they described it as “a sense of loss from something happening to one of your robots. Poor little fella.”

This is hardly a new phenomenon in society, people have been naming their cars, trains, and ships, for as long as they have been around. Humans have a tendency to attach themselves to items in their lives and project human qualities onto those items, and when they are mechanical +that humanisation is exacerbated.

There has been no major studies or research into the long-term effects of how this sort of relationship with robots will affect humanity, so for now we can only speculate. The reality is that as robots come closer and closer to human levels of operation and intelligence, the boundaries between humans and robots will become increasingly blurred. Moral and ethical issues are going to arise, alongside the complex relationships that we may build up with artificial intelligence in the workplace. However, until robots are capable of even simulating emotion, I believe we have nothing to fear regarding the potential psychiatric effects of long term living with robots and machines in our lives.

By Josh Hamilton