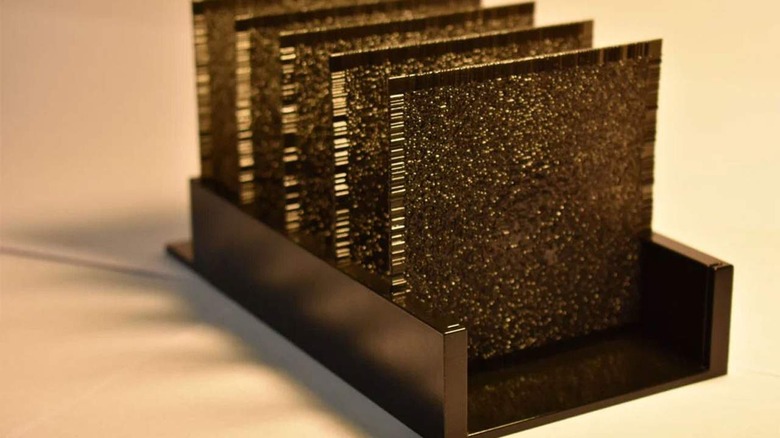

3D printed all-optical diffractive deep neural network created at UCLA

Researchers from UCLA have used a 3D printer to create an artificial neural network able to analyze large volumes of data and identify objects at the speed of light. The system is called a diffractive deep neural network (D2NN). It uses the light scattered by an object to identify the object.

UCLA researchers based the system on a deep learning-based design using passive diffractive layers working together. Researchers created a computer-simulated design first and then used a 3D printer to create thin eight centimeter-square polymer wafers. Each of those wafers has uneven surfaces to help diffract light coming from an object.

The 3D printed wafers are penetrated using terahertz frequencies. Each of the layers is comprised of tens of thousands of pixels that light can travel through. The design has each type of object assigned a pixel, with light coming from the object diffracted towards the pixel that has been assigned to its type. The technique allows D2NN to identify an object in the same amount of time it would take a computer to see the object.

The network was trained to learn the diffracted light each object produced as the light from that object passes through the device using a branch of AI called deep learning. Deep learning teaches machines through repetition and over time as patterns emerge. During experiments, the device was able to accurately identify handwritten numbers and items of clothing.

The device was also trained to act as an imaging lens, similar to how a typical camera lens works. Since the device is created using a 3D printer, the D2NN can be made with larger and additional layers resulting in a device with hundreds of millions of artificial neurons. Larger devices could find many more objects simultaneously with the potential to perform more complex data analysis. Another critical aspect of the D2NN is cost, with the researcher saying the device could be reproduced for less than $50.