From the 1970s when it was first theorised to its status today, the leaps in technology have blazed a new era in advanced imaging for autonomous mobile robots (AMRs). The most common uses of the AMRs are in industrial warehouses, in which time-of-flight (ToF) technology helps the robots to perceive their surroundings with optimal levels of accuracy.

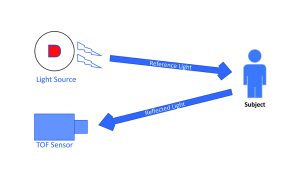

ToF technology calculates how long it takes for a thing, particle or a wave to move from point A to point B. It calculates the distance between objects by measuring the time taken for a signal’s transmission emission from point A and its return after being deflected from the object to the source detector. ToF cameras utilise a sensor that is highly sensitive to a laser’s specific wavelength, typically around 850nm or 940nm, to capture the light reflected from objects. ToF technology lies in precisely measuring the time delay (∆T) between the emission of light and the reception of the reflected light by the camera or sensor.

ToF calculation

This ∆T serves as the key parameter, as it is directly proportional to twice the distance between the camera or sensor and the object in focus. To calculate depth using ToF technology, the following formula is applied:

d = cΔT/2

Here, ‘d’ represents the estimated depth, and ‘c’ denotes the velocity of light, a fundamental constant. By precisely measuring the time delay and applying this formula, ToF cameras can provide accurate depth information, enabling them to perceive their surroundings in three dimensions.

Figure 1 shows a ToF camera measuring the distance to a target object.

Figure 1: A ToF camera measuring depth

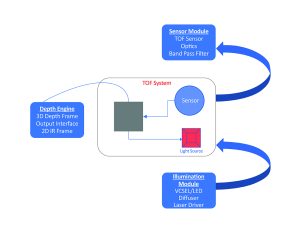

There are three essential components in a ToF camera. The first is a ToF sensor and sensor module. These components are responsible for gathering light data that gets reflected from the target object. The sensor’s primary function is to collect the reflected light from the scene and convert it into depth data on each pixel of the array. The quality of the depth map generated is directly influenced by the resolution of the sensor, with higher resolutions resulting in better quality depth maps. Additionally, the sensor module in a ToF camera incorporates optics, often featuring a large aperture to maximise light collection efficiency.

The second component is the light source. ToF cameras typically use either a vertical-cavity surface-emitting laser or an LED as the light source. These sources emit light, usually in the near infrared region, which is ideal for ToF measurements.

Figure 2: Key components of a ToF camera system

The final component is a depth sensor. The depth processor plays a critical role in the ToF camera’s architecture. Its primary task is to process the raw pixel data received from the sensor and convert it into depth information, which is essential for accurate distance measurement and 3D imaging. (Figure 2 shows the ToF camera set-up.)

ToF technology in mobile robots

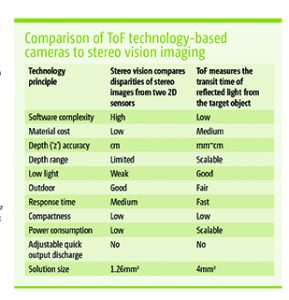

The modern applications of stereo vision technology, with the use of infrared pattern projector, irradiate the surroundings to compare discrepancies of images from the 2D sensors. This secures a high-level low light performance. In comparison, the ToF technology elevates this performance with the use of a sensor, a lighting unit and a depth processing unit to calculate depth for mobile robots. As a result, they can be used immediately, without needing further calibration.

The Table (right) compares ToF technology-based cameras to stereo vision imaging.

While performing scene-based tasks, the ToF camera is placed on the mobile robot to extract 3D images at high frame rates – with rapid segmentation of the background or foreground. Since ToF cameras also use active lighting components, mobile robots can perform tasks in brightly-lit conditions or in complete darkness.

The role of ToF cameras is well defined in warehouse operations; these cameras have been pertinent in equipping automated guided vehicles and AMRs with depth-sensing intelligence. They help them perceive their surroundings and capture depth imaging data to undertake business-critical functions with accuracy, convenience, and speed.

These functions include:

These functions include:

* Mapping

The cameras create a map of the unknown environment by measuring the time of transit of the light reflected from the object to the source. It uses the SLAM (simultaneous localisation and mapping) algorithm, which requires 3D depth perception for precise mapping. For instance, using 3D depth sensing, these cameras can easily create pre-determined paths within the premises for mobile robots to move around.

* Navigation

AMRs tend to move on a specified map from point A to point B, but with SLAM algorithms they can also do so in an unknown environment. By successfully making use of ToF technology, AMRs can process information quickly and analyse their environment in 3D before deciding the path to be taken.

* Obstacle detection and avoidance

AMRs are likely to cross paths with obstacles during the course of their navigation in a warehouse simulation. This makes it a priority for them to be equipped with the ability to process information fast and precisely. Equipped with sensor-based ToF cameras, AMRs can redefine their paths if they run into an obstacle.

* Localisation

Usually, the ToF camera helps AMRs with identification of objects on a known map by scanning the environment and matching real-time information with pre-existing data. This requires a GPS signal, but that presents a challenge in indoor environments such as warehouses. An alternative solution is a localisation feature, which can be operated locally. With ToF cameras, the AMRs can capture 3D depth data by calculating the distance from the reference points in a map. Then, using triangulation, the AMR can pinpoint its exact position. This enables seamless localisation, making navigation easy and safe.

Electronics Weekly Electronics Design & Components Tech News

Electronics Weekly Electronics Design & Components Tech News