Neural network model shows why people with autism read facial expressions differently

People with autism spectrum disorder have difficulty interpreting facial expressions.

Using a neural network model that reproduces the brain on a computer, a group of researchers based at Tohoku University have unraveled how this comes to be.

The journal Scientific Reports published the results on July 26, 2021.

"Humans recognize different emotions, such as sadness and anger by looking at facial expressions. Yet little is known about how we come to recognize different emotions based on the visual information of facial expressions," said paper coauthor, Yuta Takahashi.

"It is also not clear what changes occur in this process that leads to people with autism spectrum disorder struggling to read facial expressions."

The research group employed predictive processing theory to help understand more. According to this theory, the brain constantly predicts the next sensory stimulus and adapts when its prediction is wrong. Sensory information, such as facial expressions, helps reduce prediction error.

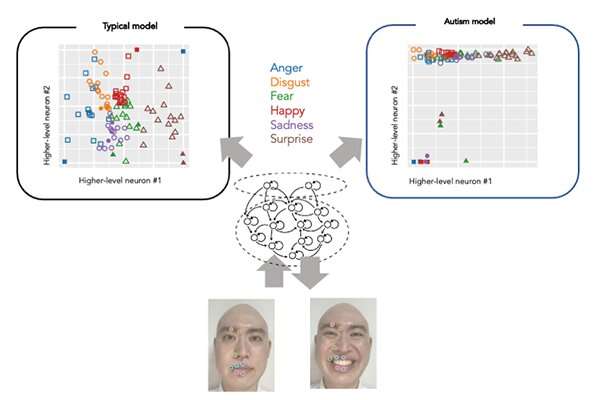

The artificial neural network model incorporated the predictive processing theory and reproduced the developmental process by learning to predict how parts of the face would move in videos of facial expression. After this, the clusters of emotions were self-organized into the neural network model's higher level neuron space—without the model knowing which emotion the facial expression in the video corresponds to.

The model could generalize unknown facial expressions not given in the training, reproducing facial part movements and minimizing prediction errors.

Following this, the researchers conducted experiments and induced abnormalities in the neurons' activities to investigate the effects on learning development and cognitive characteristics. In the model where heterogeneity of activity in neural population was reduced, the generalization ability also decreased; thus, the formation of emotional clusters in higher-level neurons was inhibited. This led to a tendency to fail in identifying the emotion of unknown facial expressions, a similar symptom of autism spectrum disorder.

According to Takahashi, the study clarified that predictive processing theory can explain emotion recognition from facial expressions using a neural network model.

"We hope to further our understanding of the process by which humans learn to recognize emotions and the cognitive characteristics of people with autism spectrum disorder," added Takahashi. "The study will help advance developing appropriate intervention methods for people who find it difficult to identify emotions."

More information: Yuta Takahashi et al, Neural network modeling of altered facial expression recognition in autism spectrum disorders based on predictive processing framework, Scientific Reports (2021). DOI: 10.1038/s41598-021-94067-x